Post Syndicated from Geographics original https://www.youtube.com/watch?v=DN25V1Q05Os

Organizing 1 million+ photos 🤯

Post Syndicated from Matt Granger original https://www.youtube.com/watch?v=0aLbnV6_SS0

Introducing logs from the dashboard for Cloudflare Workers

Post Syndicated from Ashcon Partovi original https://blog.cloudflare.com/workers-dashboard-logs/

If you’re writing code: what can go wrong, will go wrong.

Many developers know the feeling: “It worked in the local testing suite, it worked in our staging environment, but… it’s broken in production?” Testing can reduce mistakes and debugging can help find them, but logs give us the tools to understand and improve what we are creating.

if (this === undefined) {

console.log("there’s no way… right?") // Narrator: there was.

}

While logging can help you understand when the seemingly impossible is actually possible, it’s something that no developer really wants to set up or maintain on their own. That’s why we’re excited to launch a new addition to the Cloudflare Workers platform: logs and exceptions from the dashboard.

Starting today, you can view and filter the console.log output and exceptions from a Worker… at no additional cost with no configuration needed!

View logs, just a click away

When you view a Worker in the dashboard, you’ll now see a “Logs” tab which you can click on to view a detailed stream of logs and exceptions. Here’s what it looks like in action:

Each log entry contains an event with a list of logs, exceptions, and request headers if it was triggered by an HTTP request. We also automatically redact sensitive URLs and headers such as Authorization, Cookie, or anything else that appears to have a sensitive name.

If you are in the Durable Objects open beta, you will also be able to view the logs and requests sent to each Durable Object. This is a great tool to help you understand and debug the interactions between your Worker and a Durable Object.

For now, we support filtering by event status and type. Though, you can expect more filters to be added to the dashboard very soon! Today, we support advanced filtering with the wrangler CLI, which will be discussed later in this blog.

console.log(), and you’re all set

It’s really simple to get started with logging for Workers. Simply invoke one of the standard console APIs, such as console.log(), and we handle the rest. That’s it! There’s no extra setup, no configuration needed, and no hidden logging fees.

function logRequest (request) {

const { cf, headers } = request

const { city, region, country, colo, clientTcpRtt } = cf

console.log("Detected location:", [city, region, country].filter(Boolean).join(", "))

if (clientTcpRtt) {

console.debug("Round-trip time from client to", colo, "is", clientTcpRtt, "ms")

}

// You can also pass an object, which will be interpreted as JSON.

// This is great if you want to define your own structured log schema.

console.log({ headers })

}In fact, you don’t even need to use console.log to view an event from the dashboard. If your Worker doesn’t generate any logs or exceptions, you will still be able to see the request headers from the event.

Advanced filters, from your terminal

If you need more advanced filters you can use wrangler, our command-line tool for deploying Workers. We’ve updated the wrangler tail command to support sampling and a new set of advanced filters. You also no longer need to install or configure cloudflared to use the command. Not to mention it’s much faster, no more waiting around for logs to appear. Here are a few examples:

# Filter by your own IP address, and if there was an uncaught exception.

wrangler tail --format=pretty --ip-address=self --status=error

# Filter by HTTP method, then apply a 10% sampling rate.

wrangler tail --format=pretty --method=GET --sampling-rate=0.1

# Filter using a generic search query.

wrangler tail --format=pretty --search="TypeError"We recommend using the “pretty” format, since wrangler will output your logs in a colored, human-readable format. (We’re also working on a similar display for the dashboard.)

However, if you want to access structured logs, you can use the “json” format. This is great if you want to pipe your logs to another tool, such as jq, or save them to a file. Here are a few more examples:

# Parses each log event, but only outputs the url.

wrangler tail --format=json | jq .event.request?.url

# You can also specify --once to disconnect the tail after receiving the first log.

# This is useful if you want to run tests in a CI/CD environment.

wrangler tail --format=json --once > event.jsonTry it out!

Both logs from the dashboard and wrangler tail are available and free for existing Workers customers. If you would like more information or a step-by-step guide, check out any of the resources below.

- Go to the dashboard and look at some logs!

- Read the getting started guide for logging.

- Look at the tail logs API reference.

Monitor, Evaluate, and Demonstrate Backup Compliance with AWS Backup Audit Manager

Post Syndicated from Steve Roberts original https://aws.amazon.com/blogs/aws/monitor-evaluate-and-demonstrate-backup-compliance-with-aws-backup-audit-manager/

Today, I’m happy to announce the availability of AWS Backup Audit Manager, a new feature of AWS Backup that helps you monitor and evaluate the compliance status of your backups to meet business and regulatory requirements, and enables you to generate reports that help demonstrate compliance to auditors and regulators.

AWS Backup is a fully managed service that provides the ability to initiate policy-driven backups and restores of AWS applications, simplifying the process of protecting data at scale by removing the need for custom scripts and manual processes. However, customers still needed to use their own tooling for verifying that backup policies were being enforced and, as part of proving adherence to auditors, parsing backup transcripts to convert them into auditable reports.

With AWS Backup Audit Manager, you can now continuously and automatically track your backup activity, such as changes to a backup plan or backup vault, and generate automatic daily reports. AWS Backup Audit Manager provides built-in, customizable, compliance controls. Simply put, controls are procedures with backup policy parameters, for example the backup frequency or the retention period, that align with your business compliance and regulatory requirements.

You create a framework, scoped to an account and Region, and add the controls you need to it. Backup activities are tracked against the controls, automatically detecting violations of your defined data protection policies, enabling you to take quick corrective actions. To enable tracking of backup activities, AWS Backup Audit Manager requires you to enable monitoring through AWS Config for your backup plans (AWS::Backup::BackupPlan resource type), backup selection (AWS::Backup::BackupSelection), vaults (AWS::Backup::BackupVault), recovery points (AWS::Backup::RecoveryPoint), and AWS Config resource compliance (AWS::Config::ResourceCompliance). You can check the recording status of these resources in the AWS Backup console, using the Resource Tracking section of the Frameworks page.

Once you’ve added the controls you need to your framework, you deploy it. If you have different internal or regulatory standards to meet, you can create and deploy additional frameworks of controls. Once the framework is deployed, you can set up automatic daily reports of your backup activity. These are displayed in a dashboard, and you can also request on-demand reports at any time. You can also import your findings into AWS Audit Manager, a service I wrote about during AWS re:Invent 2020 in this news blog post.

This short video gives a brief overview of the new AWS Backup Audit Manager feature.

Available Controls and Backup Reports

AWS Backup Audit Manager provides five backup governance control templates and backup activity reporting on your backup jobs, copy jobs, and restore jobs. These reports improve visibility into backup activities for a single account and Region, helping you monitor your operational posture and identify failures that may need further action.

When creating a framework, you provide a name, an optional description, and you select whether to use the provided AWS Backup framework type, which includes five pre-defined controls, or you can opt to customize your framework.

Choosing Custom framework expands the panel to show the available controls, their parameters, and the option to include or exclude them from your framework. The five available controls are titled Backup resources protected by backup plan, Backup plan minimum frequency and minimum retention, Backup prevent recovery point manual deletion, Backup recovery point encrypted, and Backup recovery point minimum retention. To the right of each control’s title you’ll find an info link that describes what the control evaluates, how frequently, and what it means for a resource to be compliant with the control.

Let’s examine a couple of controls. The Backup resources protected by backup plan control enables you to select all supported resources, or those identified by a tag, by type, or a particular resource. This control helps identify gaps in your backup coverage.

The Backup plan minimum frequency and minimum retention control has parameters governing how frequently the backup plan should be taking backups, and for how long recovery points should be maintained. The default settings require backups to occur every hour, and recovery points should be retained for a month, but you can customize the settings to meet your business compliance requirements.

You complete your selections for the remaining controls, including them and setting appropriate parameter values for your needs, or excluding them from the framework, and then click Create framework to complete the process. The new framework will be created and deployed, which will take a few minutes. If needed, you can go back and edit the controls and parameters in a framework at any time.

Once deployed, the controls in the framework will start to evaluate compliance and you can inspect compliance status in the console by selecting the framework. The summary section reports the overall compliance status of the framework and the number of controls in the framework that are compliant or non-compliant, based on your deployed control definitions.

Below the summary, you’ll find a list containing compliance details for each of the controls in the framework, which can be filtered by status. Each control details whether it’s compliant or non-compliant, and how many resources monitored by the control are non-compliant. Clicking a control title will take you directly to the AWS Config dashboard, where you can view more details on the resources identified by the control.

Automated reports on backup activity can be used to demonstrate compliance to auditors and regulators. To set up reports, first click the Reports entry in the navigation toolbar, and then click Create report plan. You’ll be asked to select a report template.

With the template selected (I chose Backup jobs report), you fill in a name and optional description, choose where in your Amazon Simple Storage Service (Amazon S3) buckets you want the report to be delivered, and the report file formats, and then click Create report plan. Your report will update every 24 hours, and you can run an on-demand report at any time.

Once a report has been run, either automatically or on-demand, you can view the report data by first selecting the report in your Report plans list, followed by clicking View report. You’ll be taken directly to the chosen S3 location of the report files, where you’ll see one object (report) per chosen file type.

Downloading the file shows you the time period in which the resources were evaluated, the backup job details, failure or completion status, status messages, the resource type and backup plan, and more. Here I’ve opened the CSV format file in a spreadsheet.

Open Raven Launch Partnership

With this launch, we’re excited to have Open Raven join us as an AWS Backup partner. Open Raven is a cloud-native data security platform purpose-built for protecting modern data lakes and warehouses. From finding all data locations to proactively identifying exposure, their platform solves a broad spectrum of problems that organizations commonly face when living with large amounts of cloud-based data.

Open Raven Chief Technology Officer Mark Curphey had this to say about the new AWS Backup feature: “To successfully recover from a ransomware attack, organizations need to plan ahead by completing two foundational tasks, identifying critical data and systems and backing them up as per organizational requirements so that they can be protected and recovered. The combination of AWS Backup Audit Manager and Open Raven streamlines this effort, eliminating guesswork and hours of manual toil.”

Start Using AWS Backup Audit Manager Today

AWS Backup Audit Manager is available today in the US East (N. Virginia, Ohio), US West (N. California, Oregon), Canada (Central), EU (Frankfurt, Ireland, London, Paris, Stockholm), South America (Sao Paulo), Asia Pacific (Hong Kong, Mumbai, Seoul, Singapore, Sydney, Tokyo), and Middle East (Bahrain) Regions.

For more information about Backup Audit Manager, refer to this section in the AWS Backup Developer Guide. To get started, visit the AWS Backup console.

Cybercriminals Selling Access to Compromised Networks: 3 Surprising Research Findings

Post Syndicated from Paul Prudhomme original https://blog.rapid7.com/2021/08/24/cybercriminals-selling-access-to-compromised-networks-3-surprising-research-findings/

Cybercriminals are innovative, always finding ways to adapt to new circumstances and opportunities. The proof of this can be seen in the rise of a certain variety of activity on the dark web: the sale of access to compromised networks.

This type of dark web activity has existed for decades, but it matured and began to truly thrive amid the COVID-19 global pandemic. The worldwide shift to a remote workforce gave cybercriminals more attack surface to exploit, which fueled sales on underground criminal websites, where buyers and sellers transfer network access to compromised enterprises and organizations to turn a profit.

Having witnessed this sharp rise in breach sales in the cybercriminal ecosystem, IntSights, a Rapid7 company, decided to analyze why and how criminals sell their network access, with an eye toward understanding how to prevent these network compromise events from happening in the first place.

We have compiled our network compromise research, as well as our prevention and mitigation best practices, in the brand-new white paper “Selling Breaches: The Transfer of Enterprise Network Access on Criminal Forums.”

During the process of researching and analyzing, we came across three surprising findings we thought worth highlighting. For a deeper dive, we recommend reading the full white paper, but let’s take a quick look at these discoveries here.

1. The massive gap between average and median breach sales prices

As part of our research, we took a close look at the pricing characteristics of breach sales in the criminal-to-criminal marketplace. Unsurprisingly, pricing varied considerably from one sale to another. A number of factors can influence pricing, including everything from the level of access provided to the value of the victim as a source of criminal revenue.

That said, we found an unexpectedly significant discrepancy between the average price and the median price across the 40 sales we analyzed. The average price came out to approximately $9,640 USD, while the median price was $3,000 USD.

In part, this gap can be attributed to a few unusually high prices among the most expensive offerings. The lowest price in our dataset was $240 USD for access to a healthcare organization in Colombia, but healthcare pricing tends to trend lower than other industries, with a median price of $700 in this sample. On the other end of the spectrum, the highest price was for a telecommunications service provider that came in at about $95,000 USD worth of Bitcoin.

Because of this discrepancy, IntSights researchers view the average price of $9,640 USD as a better indicator of the higher end of the price range, while the median price is more representative of typical pricing for these sales — $3,000 USD was also the single most common price. Nonetheless, it was fascinating to discover this difference and dig into the reasons behind it.

2. The numerical dominance of tech and telecoms victims

While the sales of network access are a cross-industry phenomenon, technology and telecommunications companies are the most common victims. Not only are they frequent targets, but their compromised access also commands some of the highest prices on the market.

In our sample, tech and telecoms represented 10 of the 46 victims, or 22% of those affected by industry. Out of the 10 most expensive offerings we analyzed, four were for tech and telecommunications organizations, and there were only two that had prices under $10,000 USD. A telecommunications service provider located in an unspecified Asian country also had the single most expensive offering in this sample at approximately $95,000 USD.

After investigating the reasoning behind this numerical dominance, IntSights researchers believe that the high value and high number of tech and telecommunications companies as breach victims stem from their usefulness in enabling further attacks on other targets. For example, a cybercriminal who gains access to a mobile service provider could conduct SIM swapping attacks on digital banking customers who use two-factor authentication via SMS.

These pricing standards were surprisingly expensive compared to other industries, but for good reason: the investment may cost more upfront but prove more lucrative in the long run.

3. The low proportion of retail and hospitality victims

As previously mentioned, we broke down the sales of network access based on the industries affected, and to our surprise, only 6.5% of victims were in retail and hospitality. This seemed odd, considering the popularity of the industry as a target for cybercrime. Think of all the headlines in the news about large retail companies falling victim to a breach that exposed millions of customer credentials.

We explored the reasoning behind this low proportion of victims in the space and came to a few conclusions. For example, we theorized that the main customers for these network access sales are ransomware operators, not payment card data collectors. Payment card data collection is likely a more optimal way to monetize access to a retail or hospitality business, whereas putting ransomware on a retail and hospitality network would actually “kill the goose that lays the golden eggs.”

We also found that the second-most expensive offering in this sample was for access to an organization supporting retail and hospitality businesses. The victim was a third party managing customer loyalty and rewards programs, and the seller highlighted how a buyer could monetize this indirect access to its retail and hospitality customer base. This victim may have been more valuable because, among other things, loyalty and rewards programs are softer targets with weaker security than credit cards and bank accounts; thus, they’re easier to defraud.

Learn more about compromised network access sales

Curious to learn more about the how and why of cybercriminals selling compromised network access? Read our white paper, Selling Breaches: The Transfer of Enterprise Network Access on Criminal Forums, for the full story behind this research and how it can inform your security efforts.

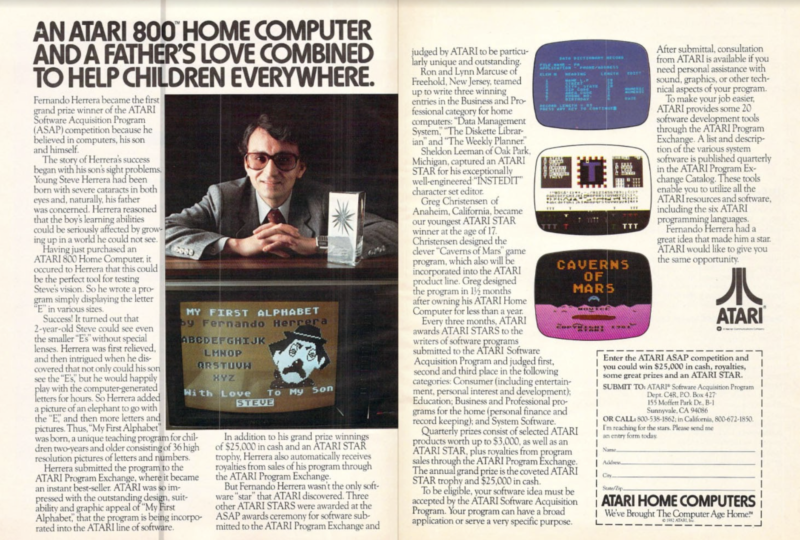

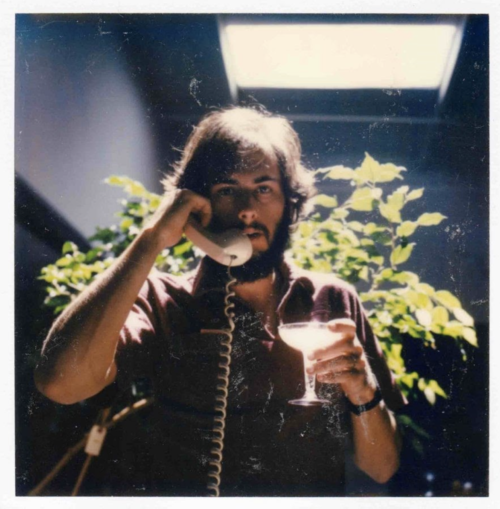

Behind the scenes at Atari

Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/behind-the-scenes-at-atari/

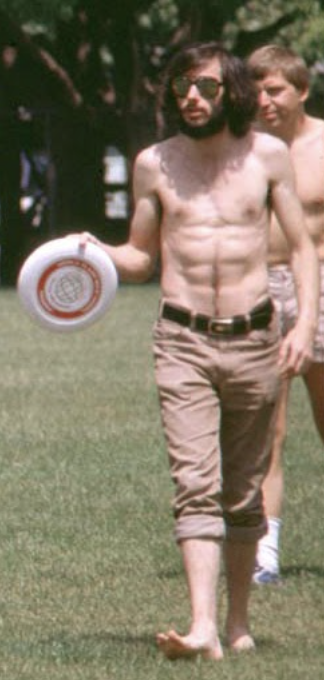

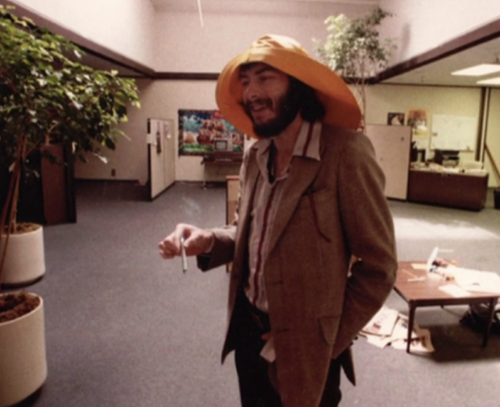

We love Wireframe magazine’s regular feature ‘The principles of game design’. They’re written by video game pioneer Howard Scott Warshaw, who authored several of Atari’s most famous and infamous titles. In the latest issue of Wireframe, he provides a snapshot of the hell-raising that went on behind the scenes at Atari…

Video game creation is unusual in that developers need to be focused intently on achieving design goals while simultaneously battling tunnel vision and re-evaluating those goals. It’s a demanding and frustrating predicament. Therefore, a solid video game creator needs two things: a way to let ideas simmer (since rumination is how games grow from mediocre to fabulous) and a way to blow off steam (since frustration abounds while trying to achieve fabulous). At Atari, there was one place where things both simmered and got steamy… the hot tub. The only thing we couldn’t do was keep a lid on the antics cooked up inside.

The hot tub was situated in the two-storey engineering building. This was ironic, because the hot tub generated way more than two stories in that building. The VCS/2600 and Home Computer development groups were upstairs. The first floor held coin-op development, a kitchen/cafeteria, and an extremely well-appointed gym. The gym featured two appendages: a locker area and the hot tub room. Many shenanigans were hatched and/or executed in the hot tub. One from the more epic end of the spectrum comes to mind: the executive birthday surprise.

It was during the birthday celebration of a VP who shall remain nameless, but it might have been the one who used to keep a canister of nitrous oxide and another of pure oxygen in his office. The nitrous oxide was for getting high and laughing some time away, while the oxygen was used for rapid sobering up in the event a spontaneous meeting was called (which happened regularly at Atari). As the party raged on, a small crew of revellers migrated to the small but accommodating hot tub room. Various intoxicants (well beyond the scope of nitrous) were being consumed in celebration of the special event (although by this standard, nearly every day was a special event at Atari).

As the party rolled on, inhibitions were shed along with numerous articles of clothing. At one point, the birthday boy was adjudged to be in dire need of a proper tubbing as he hadn’t lost sufficient layers to keep pace with the party at large. The birthday boy disagreed, and the ensuing negotiation took the form of a lively chase around the area. The VP ran out of the hot tub room and headed for the workout area with a wet posse in hot pursuit, all in varying stages of undress.

It’s important to note here that although refreshments and revelry were widely available at Atari, one item in short supply was conference rooms. Consequently, meetings could pop up in odd locales. Any place an aggregation could be achieved was a potential meeting spot. The sensitivity of the subject matter would determine the level of privacy required on a case-by-case basis. Since people weren’t always working out, the gym had enough places to sit that it could serve as a decent host for gatherings. And as for sensitivity, the hot tub room was well sound-proofed, so intruding ears weren’t a concern.

As the crew of rowdy revellers followed the VP into the workout area, they were confronted by just such a collection of executives who happened to be meeting at the time. I don’t think the birthday party was on the agenda. However, they may have been pleased that the absentee VP had ultimately decided to join their number. It was embarrassing for some, entertaining for others, and nearly career-ending for a couple. The moral of this story being that Atari executives should never go anywhere without their oxygen tanks in tow.

But morals aside, there was work to be done at Atari. In a place where work can lead to antics and antics can lead to work breakthroughs, it’s difficult at times to suss out the precise boundary between work and antics. It takes passion and commitment to pursue side quests productively and yet remain on task when necessary.

The main reason this was a challenge comes down to the fact there are so many distractions constantly going on. Creative people tend to be creative frequently and spontaneously. Also, their creativity is much more motivated by fascination and interest than it is by task lists or project plans. Fun can break out at any moment, and answering the call isn’t always the right choice, no matter how compelling the siren.

Rob Fulop, creator of Missile Command and Demon Attack for the Atari 2600 (among many other hits) isn’t only a great game maker, he’s also a keen observer of human nature. We used to chat about just where the edge is between work and play at Atari. Those who misjudge it can easily fall off the cliff.

Likewise, we explored the concept of what makes a good game designer. Rob said it’s just the right combination of silly and anal. He believed that the people who did well at Atari (and as game makers in general) were the people who could be silly enough to recognise fun, and anal enough to get all the minutia and details aligned correctly in order to deliver the fun. Of course, Rob (being the poet he is) created a wonderful phrasing to describe those with the right stuff. He put it like this: the people who did well at Atari were the people who could goof around as much as possible but still go to heaven.

Get your copy of Wireframe issue 53

You can read more features like this one in Wireframe issue 53, available directly from Raspberry Pi Press — we deliver worldwide.

And if you’d like a handy digital version of the magazine, you can also download issue 53 for free in PDF format.

The post Behind the scenes at Atari appeared first on Raspberry Pi.

Comic for 2021.08.24

Post Syndicated from Explosm.net original http://explosm.net/comics/5958/

New Cyanide and Happiness Comic

Convert and Watermark Documents Automatically with Amazon S3 Object Lambda

Post Syndicated from Joseph Simon original https://aws.amazon.com/blogs/architecture/convert-and-watermark-documents-automatically-with-amazon-s3-object-lambda/

When you provide access to a sensitive document to someone outside of your organization, you likely need to ensure that the document is read-only. In this case, your document should be associated with a specific user in case it is shared.

For example, authors often embed user-specific watermarks into their ebooks. This way, if their ebook gets posted to a file-sharing site, they can prevent the purchaser from downloading copies of the ebook in the future.

In this blog post, we provide you a cost-efficient, scalable, and secure solution to efficiently generate user-specific versions of sensitive documents. This solution helps users track who their documents are shared with. This helps prevent fraud and ensure that private information isn’t leaked. Our solution uses a RESTful API, which uses Amazon S3 Object Lambda to convert documents to PDF and apply a watermark based on the requesting user. It also provides a method for authentication and tracks access to the original document.

Architectural overview

S3 Object Lambda processes and transforms data that is requested from Amazon Simple Storage Service (Amazon S3) before it’s sent back to a client. The AWS Lambda function is invoked inline via a standard S3 GET request. It can return different results from the same document based on parameters, such as who is requesting the document. Figure 1 provides a high-level view of the different components that make up the solution.

Authenticating users with Amazon Cognito

This architecture defines a RESTful API, but users will likely be using a mobile or web application that calls the API. Thus, the application will first need to authenticate users. We do this via Amazon Cognito, which functions as its own identity provider (IdP). You could also use an external IdP, including those that support OpenID Connect and SAML.

Validating the JSON Web Token with API Gateway

Once the user is successfully authenticated with Amazon Cognito, the application will be sent a JSON Web Token (JWT). This JWT contains information about the user and will be used in subsequent requests to the API.

Now that the application has a token, it will make a request to the API, which is provided by Amazon API Gateway. API Gateway provides a secure, scalable entryway into your application. The API Gateway validates the JWT sent from the client with Amazon Cognito to make sure it is valid. If it is validated, the request is accepted and sent on to the Lambda API Handler. If it’s not, the client gets rejected and sent an error code.

Storing user data with DynamoDB

When the Lambda API Handler receives the request, it parses the JWT to extract the user making the request. It then logs that user, file, and access time into Amazon DynamoDB. Optionally, you may use DynamoDB to store an encoded string that will be used as the watermark, rather than something in plaintext, like user name or email.

Generating the PDF and user-specific watermark

At this point, the Lambda API Handler sends an S3 GET request. However, instead of going to Amazon S3 directly, it goes to a different endpoint that invokes the S3 Object Lambda function. This endpoint is called an S3 Object Lambda Access Point. The S3 GET request contains the original file name and the string that will be used for the watermark.

The S3 Object Lambda function transforms the original file that it downloads from its source S3 bucket. It uses the open-source office suite LibreOffice (and specifically this Lambda layer) to convert the source document to PDF. Once it is converted, a JavaScript library (PDF-Lib) embeds the watermark into the PDF before it’s sent back to the Lambda API Handler function.

The Lambda API Handler stores the converted file in a temporary S3 bucket, generates a presigned URL, and sends that URL back to the client as a 302 redirect. Then the client sends a request to that presigned URL to get the converted file.

To keep the temporary S3 bucket tidy, we use an S3 lifecycle configuration with an expiration policy.

Alternate approach

Before S3 Object Lambda was available, Lambda@Edge was used. However, there are three main issues with using Lambda@Edge instead of S3 Object Lambda:

- It is designed to run code closer to the end user to decrease latency, but in this case, latency is not a major concern.

- It requires using an Amazon CloudFront distribution, and the single-download pattern described here will not take advantage of Lamda@Edge’s caching.

- It has quotas on memory that don’t lend themselves to complex libraries like OfficeLibre.

Extending this solution

This blog post describes the basic building blocks for the solution, but it can be extended relatively easily. For example, you could add another function to the API that would convert, resize, and watermark images. To do this, create an S3 Object Lambda function to perform those tasks. Then, add an S3 Object Lambda Access Point to invoke it based on a different API call.

API Gateway has many built-in security features, but you may want to enhance the security of your RESTful API. To do this, add enhanced security rules via AWS WAF. Integrating your IdP into Amazon Cognito can give you a single place to manage your users.

Monitoring any solution is critical, and understanding how an application is behaving end to end can greatly benefit optimization and troubleshooting. Adding AWS X-Ray and Amazon CloudWatch Lambda Insights will show you how functions and their interactions are performing.

Should you decide to extend this architecture, follow the architectural principles defined in AWS Well-Architected, and pay particular attention to the Serverless Application Lens.

Conclusion

You can implement this solution in a number of ways. However, by using S3 Object Lambda, you can transform documents without needing intermediary storage. S3 Object Lambda will also decouple your file logic from the rest of the application.

The Serverless on AWS components mentioned in this post allow you to reduce administrative overhead, saving you time and money.

Finally, the extensible nature of this architecture allows you to add functionality easily as your organization’s needs grow and change.

The following links provide more information on how to use S3 Object Lambda in your architectures:

The Five Ws episode 1: Accreditation models for secure cloud adoption whitepaper

Post Syndicated from Jana Kay original https://aws.amazon.com/blogs/security/the-five-ws-episode-1-accreditation-models-for-secure-cloud-adoption-whitepaper/

AWS whitepapers are a great way to expand your knowledge of the cloud. Authored by Amazon Web Services (AWS) and the AWS community, they provide in-depth content that often addresses specific customer situations.

We’re featuring some of our whitepapers in a new video series, The Five Ws. These short videos outline the who, what, when, where, and why of each whitepaper so you can decide whether to dig into it further.

The first whitepaper we’re featuring is Accreditation Models for Secure Cloud Adoption. This whitepaper provides cloud accreditation best practices to help you capitalize on the security benefits of commercial cloud computing while maximizing efficiency, scalability, and cost reduction. The paper includes a comparative analysis of different accreditation models in use today. Although the paper highlights public sector examples, the best practices also apply to private sector organizations considering cloud adoption.

If you have feedback about this post, submit comments in the Comments section below.

Want more AWS Security how-to content, news, and feature announcements? Follow us on Twitter.

Build Next-Generation Microservices with .NET 5 and gRPC on AWS

Post Syndicated from Matt Cline original https://aws.amazon.com/blogs/devops/next-generation-microservices-dotnet-grpc/

Modern architectures use multiple microservices in conjunction to drive customer experiences. At re:Invent 2015, AWS senior project manager Rob Brigham described Amazon’s architecture of many single-purpose microservices – including ones that render the “Buy” button, calculate tax at checkout, and hundreds more.

Microservices commonly communicate with JSON over HTTP/1.1. These technologies are ubiquitous and human-readable, but they aren’t optimized for communication between dozens or hundreds of microservices.

Next-generation Web technologies, including gRPC and HTTP/2, significantly improve communication speed and efficiency between microservices. AWS offers the most compelling experience for builders implementing microservices. Moreover, the addition of HTTP/2 and gRPC support in Application Load Balancer (ALB) provides an end-to-end solution for next-generation microservices. ALBs can inspect and route gRPC calls, enabling features like health checks, access logs, and gRPC-specific metrics.

This post demonstrates .NET microservices communicating with gRPC via Application Load Balancers. The microservices run on AWS Graviton2 instances, utilizing a custom-built 64-bit Arm processor to deliver up to 40% better price/performance than x86.

Architecture Overview

Modern Tacos is a new restaurant offering delivery. Customers place orders via mobile app, then they receive real-time status updates as their order is prepared and delivered.

The tutorial includes two microservices: “Submit Order” and “Track Order”. The Submit Order service receives orders from the app, then it calls the Track Order service to initiate order tracking. The Track Order service provides streaming updates to the app as the order is prepared and delivered.

Each microservice is deployed in an Amazon EC2 Auto Scaling group. Each group is behind an ALB that routes gRPC traffic to instances in the group.

containers for elastic scaling, improved reliability, and efficient resource utilization. ALB, gRPC, and .NET all work equally effectively in these architectures.

Comparing gRPC and JSON for microservices

Microservices typically communicate by sending JSON data over HTTP. As a text-based format, JSON is readable, flexible, and widely compatible. However, JSON also has significant weaknesses as a data interchange format. JSON’s flexibility makes enforcing a strict API specification difficult — clients can send arbitrary or invalid data, so developers must write rigorous data validation code. Additionally, performance can suffer at scale due to JSON’s relatively high bandwidth and parsing requirements. These factors also impact performance in constrained environments, such as smartphones and IoT devices. gRPC addresses all of these issues.

gRPC is an open-source framework designed to efficiently connect services. Instead of JSON, gRPC sends messages via a compact binary format called Protocol Buffers, or protobuf. Although protobuf messages are not human-readable, they utilize less network bandwidth and are faster to encode and decode. Operating at scale, these small differences multiply to a significant performance gain.

gRPC APIs define a strict contract that is automatically enforced for all messages. Based on this contract, gRPC implementations generate client and server code libraries in multiple programming languages. This allows developers to use higher-level constructs to call services, rather than programming against “raw” HTTP requests.

gRPC also benefits from being built on HTTP/2, a major revision of the HTTP protocol. In addition to the foundational performance and efficiency improvements from HTTP/2, gRPC utilizes the new protocol to support bi-directional streaming data. Implementing real-time streaming prior to gRPC typically required a completely separate protocol (such as WebSockets) that might not be supported by every client.

gRPC for .NET developers

Several recent updates have made gRPC more useful to .NET developers. .NET 5 includes significant performance improvements to gRPC, and AWS has broad support for .NET 5. In May 2021, the .NET team announced their focus on a gRPC implementation written entirely in C#, called “grpc-dotnet”, which follows C# conventions very closely.

Instead of working with JSON, dynamic objects, or strings, C# developers calling a gRPC service use a strongly-typed client, automatically generated from the protobuf specification. This obviates much of the boilerplate validation required by JSON APIs, and it enables developers to use rich data structures. Additionally, the generated code enables full IntelliSense support in Visual Studio.

For example, the “Submit Order” microservice executes this code in order to call the “Track Order” microservice:

using var channel = GrpcChannel.ForAddress("https://track-order.example.com");

var trackOrderClient = new TrackOrder.Protos.TrackOrder.TrackOrderClient(channel);

var reply = await trackOrderClient.StartTrackingOrderAsync(new TrackOrder.Protos.Order

{

DeliverTo = "Address",

LastUpdated = Timestamp.FromDateTime(DateTime.UtcNow),

OrderId = order.OrderId,

PlacedOn = order.PlacedOn,

Status = TrackOrder.Protos.OrderStatus.Placed

});

This code calls the StartTrackingOrderAsync method on the Track Order client, which looks just like a local method call. The method intakes a data structure that supports rich data types like DateTime and enumerations, instead of the loosely-typed JSON. The methods and data structures are defined by the Track Order service’s protobuf specification, and the .NET gRPC tools automatically generate the client and data structure classes without requiring any developer effort.

Configuring ALB for gRPC

To make gRPC calls to targets behind an ALB, create a load balancer target group and select gRPC as the protocol version. You can do this through the AWS Management Console, AWS Command Line Interface (CLI), AWS CloudFormation, or AWS Cloud Development Kit (CDK).

This CDK code creates a gRPC target group:

var targetGroup = new ApplicationTargetGroup(this, "TargetGroup", new ApplicationTargetGroupProps

{

Protocol = ApplicationProtocol.HTTPS,

ProtocolVersion = ApplicationProtocolVersion.GRPC,

Vpc = vpc,

Targets = new IApplicationLoadBalancerTarget {...}

});

gRPC requests work with target groups utilizing HTTP/2, but the gRPC protocol enables additional features including health checks, request count metrics, access logs that differentiate gRPC requests, and gRPC-specific response headers. gRPC also works with native ALB features like stickiness, multiple load balancing algorithms, and TLS termination.

Deploy the Tutorial

The sample provisions AWS resources via the AWS Cloud Development Kit (CDK). The CDK code is provided in C# so that .NET developers can use a familiar language.

The solution deployment steps include:

- Configuring a domain name in Route 53.

- Deploying the microservices.

- Running the mobile app on AWS Device Farm.

The source code is available on GitHub.

Prerequisites

For this tutorial, you should have these prerequisites:

- Sign up for an AWS account.

- Complete the AWS CDK Getting Started guide.

- Install the AWS CLI and set up your AWS credentials for command-line use – or, you can use the AWS Tools for PowerShell and set up your AWS credentials for PowerShell.

- Create a public hosted zone in Amazon Route 53 for a domain name that you control. This will be the “parent” domain name for the microservices.

- Install Visual Studio 2019.

- Clone the GitHub repository to your computer.

- Open a terminal (such as Bash) or a PowerShell prompt.

Configure the environment variables needed by the CDK. In the sample commands below, replace AWS_ACCOUNT_ID with your numeric AWS account ID. Replace AWS_REGION with the name of the region where you will deploy the sample, such as us-east-1 or us-west-2.

If you’re using a *nix shell such as Bash, run these commands:

export CDK_DEFAULT_ACCOUNT=AWS_ACCOUNT_ID

export CDK_DEFAULT_REGION=AWS_REGIONIf you’re using PowerShell, run these commands:

$Env:CDK_DEFAULT_ACCOUNT="AWS_ACCOUNT_ID"

$Env:CDK_DEFAULT_REGION="AWS_REGION"

Set-DefaultAWSRegion -Region AWS_REGIONThroughout this tutorial, replace RED TEXT with the appropriate value.

Save the directory path where you cloned the GitHub repository. In the sample commands below, replace EXAMPLE_DIRECTORY with this path.

In your terminal or PowerShell, run these commands:

The CDK output includes the name of the S3 bucket that will store deployment packages. Save the name of this bucket. In the sample commands below, replace SHARED_BUCKET_NAME with this name.

Deploy the Track Order microservice

Compile the Track Order microservice for the Arm microarchitecture utilized by AWS Graviton2 processors. The TrackOrder.csproj file includes a target that automatically packages the compiled microservice into a ZIP file. You will upload this ZIP file to S3 for use by CodeDeploy. Next, you will utilize the CDK to deploy the microservice’s AWS infrastructure, and then install the microservice on the EC2 instance via CodeDeploy.

The CDK stack deploys these resources:

- An Amazon EC2 Auto Scaling group.

- An Application Load Balancer (ALB) using gRPC, targeting the Auto Scaling group and configured with microservice health checks.

- A subdomain for the microservice, targeting the ALB.

- A DynamoDB table used by the microservice.

- CodeDeploy infrastructure to deploy the microservice to the Auto Scaling group.

If you’re using the AWS CLI, run these commands:

cd EXAMPLE_DIRECTORY/src/ModernTacoShop/TrackOrder/src/

dotnet publish --runtime linux-arm64 --self-contained

aws s3 cp ./bin/TrackOrder.zip s3://SHARED_BUCKET_NAME

etag=$(aws s3api head-object --bucket SHARED_BUCKET_NAME \

--key TrackOrder.zip --query ETag --output text)

cd ../cdk

cdk deployThe CDK output includes the name of the CodeDeploy deployment group. Use this name to run the next command:

aws deploy create-deployment --application-name ModernTacoShop-TrackOrder \

--deployment-group-name TRACK_ORDER_DEPLOYMENT_GROUP_NAME \

--s3-location bucket=SHARED_BUCKET_NAME,bundleType=zip,key=TrackOrder.zip,etag=$etag \

--file-exists-behavior OVERWRITEIf you’re using PowerShell, run these commands:

cd EXAMPLE_DIRECTORY/src/ModernTacoShop/TrackOrder/src/

dotnet publish --runtime linux-arm64 --self-contained

Write-S3Object -BucketName SHARED_BUCKET_NAME `

-Key TrackOrder.zip `

-File ./bin/TrackOrder.zip

Get-S3ObjectMetadata -BucketName SHARED_BUCKET_NAME `

-Key TrackOrder.zip `

-Select ETag `

-OutVariable etag

cd ../cdk

cdk deployThe CDK output includes the name of the CodeDeploy deployment group. Use this name to run the next command:

New-CDDeployment -ApplicationName ModernTacoShop-TrackOrder `

-DeploymentGroupName TRACK_ORDER_DEPLOYMENT_GROUP_NAME `

-S3Location_Bucket SHARED_BUCKET_NAME `

-S3Location_BundleType zip `

-S3Location_Key TrackOrder.zip `

-S3Location_ETag $etag[0] `

-RevisionType S3 `

-FileExistsBehavior OVERWRITEDeploy the Submit Order microservice

The steps to deploy the Submit Order microservice are identical to the Track Order microservice. See that section for details.

If you’re using the AWS CLI, run these commands:

cd EXAMPLE_DIRECTORY/src/ModernTacoShop/SubmitOrder/src/

dotnet publish --runtime linux-arm64 --self-contained

aws s3 cp ./bin/SubmitOrder.zip s3://SHARED_BUCKET_NAME

etag=$(aws s3api head-object --bucket SHARED_BUCKET_NAME \

--key SubmitOrder.zip --query ETag --output text)

cd ../cdk

cdk deployThe CDK output includes the name of the CodeDeploy deployment group. Use this name to run the next command:

aws deploy create-deployment --application-name ModernTacoShop-SubmitOrder \

--deployment-group-name SUBMIT_ORDER_DEPLOYMENT_GROUP_NAME \

--s3-location bucket=SHARED_BUCKET_NAME,bundleType=zip,key=SubmitOrder.zip,etag=$etag \

--file-exists-behavior OVERWRITEIf you’re using PowerShell, run these commands:

cd EXAMPLE_DIRECTORY/src/ModernTacoShop/SubmitOrder/src/

dotnet publish --runtime linux-arm64 --self-contained

Write-S3Object -BucketName SHARED_BUCKET_NAME `

-Key SubmitOrder.zip `

-File ./bin/SubmitOrder.zip

Get-S3ObjectMetadata -BucketName SHARED_BUCKET_NAME `

-Key SubmitOrder.zip `

-Select ETag `

-OutVariable etag

cd ../cdk

cdk deployThe CDK output includes the name of the CodeDeploy deployment group. Use this name to run the next command:

New-CDDeployment -ApplicationName ModernTacoShop-SubmitOrder `

-DeploymentGroupName SUBMIT_ORDER_DEPLOYMENT_GROUP_NAME `

-S3Location_Bucket SHARED_BUCKET_NAME `

-S3Location_BundleType zip `

-S3Location_Key SubmitOrder.zip `

-S3Location_ETag $etag[0] `

-RevisionType S3 `

-FileExistsBehavior OVERWRITEData flow diagram

- The app submits an order via gRPC.

- The Submit Order ALB routes the gRPC call to an instance.

- The Submit Order instance stores order data.

- The Submit Order instance calls the Track Order service via gRPC.

- The Track Order ALB routes the gRPC call to an instance.

- The Track Order instance stores tracking data.

- The app calls the Track Order service, which streams the order’s location during delivery.

Test the microservices

Once the CodeDeploy deployments have completed, test both microservices.

First, check the load balancers’ status. Go to Target Groups in the AWS Management Console, which will list one target group for each microservice. Click each target group, then click “Targets” in the lower details pane. Every EC2 instance in the target group should have a “healthy” status.

Next, verify each microservice via gRPCurl. This tool lets you invoke gRPC services from the command line. Install gRPCurl using the instructions, and then test each microservice:

If a service is healthy, it will return an empty JSON object.

Run the mobile app

You will run a pre-compiled version of the app on AWS Device Farm, which lets you test on a real device without managing any infrastructure. Alternatively, compile your own version via the AndroidApp.FrontEnd project within the solution located at EXAMPLE_DIRECTORY/src/ModernTacoShop/AndroidApp/AndroidApp.sln.

Go to Device Farm in the AWS Management Console. Under “Mobile device testing projects”, click “Create a new project”. Enter “ModernTacoShop” as the project name, and click “Create Project”. In the ModernTacoShop project, click the “Remote access” tab, then click “Start a new session”. Under “Choose a device”, select the Google Pixel 3a running OS version 10, and click “Confirm and start session”.

Once the session begins, click “Upload” in the “Install applications” section. Unzip and upload the APK file located at EXAMPLE_DIRECTORY/src/ModernTacoShop/AndroidApp/com.example.modern_tacos.grpc_tacos.apk.zip, or upload an APK that you created.

Once the app has uploaded, drag up from the bottom of the device screen in order to reach the “All apps” screen. Click the ModernTacos app to launch it.

Once the app launches, enter the parent domain name in the “Domain Name” field. Click the “+” and “-“ buttons next to each type of taco in order to create your order, then click “Submit Order”. The order status will initially display as “Preparing”, and will switch to “InTransit” after about 30 seconds. The Track Order service will stream a random route to the app, updating with new position data every 5 seconds. After approximately 2 minutes, the order status will change to “Delivered” and the streaming updates will stop.

Once you’ve run a successful test, click “Stop session” in the console.

Cleaning up

To avoid incurring charges, use the cdk destroy command to delete the stacks in the reverse order that you deployed them.

You can also delete the resources via CloudFormation in the AWS Management Console.

In addition to deleting the stacks, you must delete the Route 53 hosted zone and the Device Farm project.

Conclusion

This post demonstrated multiple next-generation technologies for microservices, including end-to-end HTTP/2 and gRPC communication over Application Load Balancer, AWS Graviton2 processors, and .NET 5. These technologies enable builders to create microservices applications with new levels of performance and efficiency.

Matt Cline

Matt Cline is a Solutions Architect at Amazon Web Services, supporting customers in his home city of Pittsburgh PA. With a background as a full-stack developer and architect, Matt is passionate about helping customers deliver top-quality applications on AWS. Outside of work, Matt builds (and occasionally finishes) scale models and enjoys running a tabletop role-playing game for his friends.

Ulili Nhaga

Ulili Nhaga is a Cloud Application Architect at Amazon Web Services in San Diego, California. He helps customers modernize, architect, and build highly scalable cloud-native applications on AWS. Outside of work, Ulili loves playing soccer, cycling, Brazilian BBQ, and enjoying time on the beach.

How MEDHOST’s cardiac risk prediction successfully leveraged AWS analytic services

Post Syndicated from Pandian Velayutham original https://aws.amazon.com/blogs/big-data/how-medhosts-cardiac-risk-prediction-successfully-leveraged-aws-analytic-services/

MEDHOST has been providing products and services to healthcare facilities of all types and sizes for over 35 years. Today, more than 1,000 healthcare facilities are partnering with MEDHOST and enhancing their patient care and operational excellence with its integrated clinical and financial EHR solutions. MEDHOST also offers a comprehensive Emergency Department Information System with business and reporting tools. Since 2013, MEDHOST’s cloud solutions have been utilizing Amazon Web Services (AWS) infrastructure, data source, and computing power to solve complex healthcare business cases.

MEDHOST can utilize the data available in the cloud to provide value-added solutions for hospitals solving complex problems, like predicting sepsis, cardiac risk, and length of stay (LOS) as well as reducing re-admission rates. This requires a solid foundation of data lake and elastic data pipeline to keep up with multi-terabyte data from thousands of hospitals. MEDHOST has invested a significant amount of time evaluating numerous vendors to determine the best solution for its data needs. Ultimately, MEDHOST designed and implemented machine learning/artificial intelligence capabilities by leveraging AWS Data Lab and an end-to-end data lake platform that enables a variety of use cases such as data warehousing for analytics and reporting.

| Since you’re reading this post, you may also be interested in the following: |

Getting started

MEDHOST’s initial objectives in evaluating vendors were to:

- Build a low-cost data lake solution to provide cardiac risk prediction for patients based on health records

- Provide an analytical solution for hospital staff to improve operational efficiency

- Implement a proof of concept to extend to other machine learning/artificial intelligence solutions

The AWS team proposed AWS Data Lab to architect, develop, and test a solution to meet these objectives. The collaborative relationship between AWS and MEDHOST, AWS’s continuous innovation, excellent support, and technical solution architects helped MEDHOST select AWS over other vendors and products. AWS Data Lab’s well-structured engagement helped MEDHOST define clear, measurable success criteria that drove the implementation of the cardiac risk prediction and analytical solution platform. The MEDHOST team consisted of architects, builders, and subject matter experts (SMEs). By connecting MEDHOST experts directly to AWS technical experts, the MEDHOST team gained a quick understanding of industry best practices and available services allowing MEDHOST team to achieve most of the success criteria at the end of a four-day design session. MEDHOST is now in the process of moving this work from its lower to upper environment to make the solution available for its customers.

Solution

For this solution, MEDHOST and AWS built a layered pipeline consisting of ingestion, processing, storage, analytics, machine learning, and reinforcement components. The following diagram illustrates the Proof of Concept (POC) that was implemented during the four-day AWS Data Lab engagement.

Ingestion layer

The ingestion layer is responsible for moving data from hospital production databases to the landing zone of the pipeline.

The hospital data was stored in an Amazon RDS for PostgreSQL instance and moved to the landing zone of the data lake using AWS Database Migration Service (DMS). DMS made migrating databases to the cloud simple and secure. Using its ongoing replication feature, MEDHOST and AWS implemented change data capture (CDC) quickly and efficiently so MEDHOST team could spend more time focusing on the most interesting parts of the pipeline.

Processing layer

The processing layer was responsible for performing extract, tranform, load (ETL) on the data to curate them for subsequent uses.

MEDHOST used AWS Glue within its data pipeline for crawling its data layers and performing ETL tasks. The hospital data copied from RDS to Amazon S3 was cleaned, curated, enriched, denormalized, and stored in parquet format to act as the heart of the MEDHOST data lake and a single source of truth to serve any further data needs. During the four-day Data Lab, MEDHOST and AWS targeted two needs: powering MEDHOST’s data warehouse used for analytics and feeding training data to the machine learning prediction model. Even though there were multiple challenges, data curation is a critical task which requires an SME. AWS Glue’s serverless nature, along with the SME’s support during the Data Lab, made developing the required transformations cost efficient and uncomplicated. Scaling and cluster management was addressed by the service, which allowed the developers to focus on cleaning data coming from homogenous hospital sources and translating the business logic to code.

Storage layer

The storage layer provided low-cost, secure, and efficient storage infrastructure.

MEDHOST used Amazon S3 as a core component of its data lake. AWS DMS migration tasks saved data to S3 in .CSV format. Crawling data with AWS Glue made this landing zone data queryable and available for further processing. The initial AWS Glue ETL job stored the parquet formatted data to the data lake and its curated zone bucket. MEDHOST also used S3 to store the .CSV formatted data set that will be used to train, test, and validate its machine learning prediction model.

Analytics layer

The analytics layer gave MEDHOST pipeline reporting and dashboarding capabilities.

The data was in parquet format and partitioned in the curation zone bucket populated by the processing layer. This made querying with Amazon Athena or Amazon Redshift Spectrum fast and cost efficient.

From the Amazon Redshift cluster, MEDHOST created external tables that were used as staging tables for MEDHOST data warehouse and implemented an UPSERT logic to merge new data in its production tables. To showcase the reporting potential that was unlocked by the MEDHOST analytics layer, a connection was made to the Redshift cluster to Amazon QuickSight. Within minutes MEDHOST was able to create interactive analytics dashboards with filtering and drill-down capabilities such as a chart that showed the number of confirmed disease cases per US state.

Machine learning layer

The machine learning layer used MEDHOST’s existing data sets to train its cardiac risk prediction model and make it accessible via an endpoint.

Before getting into Data Lab, the MEDHOST team was not intimately familiar with machine learning. AWS Data Lab architects helped MEDHOST quickly understand concepts of machine learning and select a model appropriate for its use case. MEDHOST selected XGBoost as its model since cardiac prediction falls within regression technique. MEDHOST’s well architected data lake enabled it to quickly generate training, testing, and validation data sets using AWS Glue.

Amazon SageMaker abstracted underlying complexity of setting infrastructure for machine learning. With few clicks, MEDHOST started Jupyter notebook and coded the components leading to fitting and deploying its machine learning prediction model. Finally, MEDHOST created the endpoint for the model and ran REST calls to validate the endpoint and trained model. As a result, MEDHOST achieved the goal of predicting cardiac risk. Additionally, with Amazon QuickSight’s SageMaker integration, AWS made it easy to use SageMaker models directly in visualizations. QuickSight can call the model’s endpoint, send the input data to it, and put the inference results into the existing QuickSight data sets. This capability made it easy to display the results of the models directly in the dashboards. Read more about QuickSight’s SageMaker integration here.

Reinforcement layer

Finally, the reinforcement layer guaranteed that the results of the MEDHOST model were captured and processed to improve performance of the model.

The MEDHOST team went beyond the original goal and created an inference microservice to interact with the endpoint for prediction, enabled abstracting of the machine learning endpoint with the well-defined domain REST endpoint, and added a standard security layer to the MEDHOST application.

When there is a real-time call from the facility, the inference microservice gets inference from the SageMaker endpoint. Records containing input and inference data are fed to the data pipeline again. MEDHOST used Amazon Kinesis Data Streams to push records in real time. However, since retraining the machine learning model does not need to happen in real time, the Amazon Kinesis Data Firehose enabled MEDHOST to micro-batch records and efficiently save them to the landing zone bucket so that the data could be reprocessed.

Conclusion

Collaborating with AWS Data Lab enabled MEDHOST to:

- Store single source of truth with low-cost storage solution (data lake)

- Complete data pipeline for a low-cost data analytics solution

- Create an almost production-ready code for cardiac risk prediction

The MEDHOST team learned many concepts related to data analytics and machine learning within four days. AWS Data Lab truly helped MEDHOST deliver results in an accelerated manner.

About the Authors

Pandian Velayutham is the Director of Engineering at MEDHOST. His team is responsible for delivering cloud solutions, integration and interoperability, and business analytics solutions. MEDHOST utilizes modern technology stack to provide innovative solutions to our customers. Pandian Velayutham is a technology evangelist and public cloud technology speaker.

Pandian Velayutham is the Director of Engineering at MEDHOST. His team is responsible for delivering cloud solutions, integration and interoperability, and business analytics solutions. MEDHOST utilizes modern technology stack to provide innovative solutions to our customers. Pandian Velayutham is a technology evangelist and public cloud technology speaker.

George Komninos is a Data Lab Solutions Architect at AWS. He helps customers convert their ideas to a production-ready data product. Before AWS, he spent 3 years at Alexa Information domain as a data engineer. Outside of work, George is a football fan and supports the greatest team in the world, Olympiacos Piraeus.

George Komninos is a Data Lab Solutions Architect at AWS. He helps customers convert their ideas to a production-ready data product. Before AWS, he spent 3 years at Alexa Information domain as a data engineer. Outside of work, George is a football fan and supports the greatest team in the world, Olympiacos Piraeus.

Queue Integration with Third-party Services on AWS

Post Syndicated from Rostislav Markov original https://aws.amazon.com/blogs/architecture/queue-integration-with-third-party-services-on-aws/

Commercial off-the-shelf software and third-party services can present an integration challenge in event-driven workflows when they do not natively support AWS APIs. This is even more impactful when a workflow is subject to unpredicted usage spikes, and you want to increase decoupling and fault tolerance. Given the third-party nature of services, polling an Amazon Simple Queue Service (SQS) queue and having built-in AWS API handling logic may not be an immediate option.

In such cases, AWS Lambda helps out-task the Amazon SQS queue integration and AWS API handling to an additional layer. The success of this depends on how well exception handling is implemented across the different interacting services. In this blog post, we outline issues to consider when adopting this design pattern. We also share a reusable solution.

Design pattern for third-party integration with SQS

With this design pattern, one or more services (producers) asynchronously invoke other third-party downstream consumer services. They publish messages to an Amazon SQS queue, which acts as buffer for requests. Producers provide all commands and other parameters required for consumer service execution with the message.

As messages are written to the queue, the queue is configured to invoke a message broker (implemented as AWS Lambda) for each message. AWS Lambda can interact natively with target AWS services such as Amazon EC2, Amazon Elastic Container Service (ECS), or Amazon Elastic Kubernetes Service (EKS). It can also be configured to use an Amazon Virtual Private Cloud (VPC) interface endpoint to establish a connection to VPC resources without traversing the internet. The message broker assigns the tasks to consumer services by invoking the RunTask API of Amazon ECS and AWS Fargate (see Figure 1.)

Figure 1. On-premises and AWS queue integration for third-party services using AWS Lambda

The message broker asynchronously invokes the API in ‘fire-and-forget’ mode. Therefore, error handling must be built in to respond to API invocation errors. In an event-driven scenario, an error will be invoked if you asynchronously call the third-party service hundreds or thousands of times and reach Service Quotas. This is a potential issue with RunTask API actions, or a large volume of concurrent tasks running on AWS Fargate. Two mechanisms can help implement troubleshooting API request errors.

- API retries with exponential backoff. The message broker retries for a number of times with configurable sleep intervals and exponential backoff in-between. This enforces progressively longer waits between retries for consecutive error responses. If the RunTask API fails to process the request and initiate the third-party service, the message remains in the queue for a subsequent retry. The AWS General Reference provides further guidance.

- API error handling. Error handling and consequent logging should be implemented at every step. Since there are several services working together in tandem, crucial debugging information from errors may be lost. Additionally, error handling also provides opportunity to define automated corrective actions or notifications on event occurrence. The message broker can publish failure notifications including the root cause to an Amazon Simple Notification Service (SNS) topic.

SNS topic subscription can be configured via different protocols. You can email a distribution group for active monitoring and processing of errors. If persistence is required for messages that failed to process, error handling can be associated directly with SQS by configuring a dead letter queue.

Reference implementation for third-party integration with SQS

We implemented the design pattern in Figure 1, with Broad Institute’s Cell Painting application workflow. This is for morphological profiling from microscopy cell images running on Amazon EC2. It interacts with CellProfiler version 3.0 cell image analysis software as the downstream consumer hosted on ECS/Fargate. Every invocation of CellProfiler required approximately 1,500 tasks for a single processing step.

Resource constraints determined the rate of scale-out. In this case, it was for an Amazon ECS task creation. Address space for Amazon ECS subnets should be large enough to prevent running out of available IPs within your VPC. If Amazon ECS Service Quotas provide further constraints, a quota increase can be requested.

Exceptions must be handled both when validating and initiating requests. As part of the validation workflow, exceptions are captured as follows, also shown in Figure 2.

1. Invalid arguments exception. The message broker validates that the SQS message contains all the needed information to initiate the ECS task. This information includes subnets, security groups and container names required to start the ECS task, and else raises exception.

2. Retry limit exception. On each iteration, the message broker will evaluate whether the SQS retry limit has been reached, before invoking the RunTask API. It will then exit, by sending failure notification to SNS when the retry limit is reached.

Figure 2. Exception handling flow during request validation

As part of the initiation workflow, exceptions are handled as follows, shown in Figure 3:

1. ECS/Fargate API and concurrent execution limitations. The message broker catches API exceptions when calling the API RunTask operation. These exceptions can include:

-

- When the call to the launch tasks exceeds the maximum allowed API request limit for your AWS account

- When failing to retrieve security group information

- When you have reached the limit on the number of tasks you can run concurrently

With each of the preceding exceptions, the broker will increase the retry count.

2. Networking and IP space limitations. Network interface timeouts received after initiating the ECS task set off an Amazon CloudWatch Events rule, causing the message broker to re-initiate the ECS task.

Figure 3. Exception handling flow during request initiation

While we specifically address downstream consumer services running on ECS/Fargate, this solution can be adjusted for third-party services running on Amazon EC2 or EKS. With EC2, the message broker must be adjusted to interact with the RunInstances API, and include troubleshooting API request errors. Integration with downstream consumers on Amazon EKS requires that the AWS Lambda function is associated via the IAM role with a Kubernetes service account. A Python client for Kubernetes can be used to simplify interaction with the Kubernetes REST API and AWS Lambda would invoke the run API.

Conclusion

This pattern is useful when queue polling is not an immediate option. This is typical with event-driven workflows involving third-party services and vendor applications subject to unpredictable, intermittent load spikes. Exception handling is essential for these types of workflows. Offloading AWS API handling to a separate layer orchestrated by AWS Lambda can improve the resiliency of such third-party services on AWS. This pattern represents an incremental optimization until the third party provides native SQS integration. It can be achieved with the initial move to AWS, for example as part of the V1 AWS design strategy for third-party services.

Some limitations should be acknowledged. While the pattern enables graceful failure, it does not prevent the overloading of the ECS RunTask API. By invoking Amazon ECS RunTask API in ‘fire-and-forget’ mode, it does not monitor service execution once a task was successfully invoked. Therefore, it should be adopted when direct queue polling is not an option. In our example, Broad Institute’s CellProfiler application enabled direct queue polling with its subsequent product version of Distributed CellProfiler.

Further reading

The referenced deployment with consumer services on Amazon ECS can be accessed via AWSLabs.

Simplify data discovery for business users by adding data descriptions in the AWS Glue Data Catalog

Post Syndicated from Karim Hammouda original https://aws.amazon.com/blogs/big-data/simplify-data-discovery-for-business-users-by-adding-data-descriptions-in-the-aws-glue-data-catalog/

In this post, we discuss how to use AWS Glue Data Catalog to simplify the process for adding data descriptions and allows data analysts to access, search, and discover this cataloged metadata with BI tools.

In this solution, we use AWS Glue Data Catalog, to break the silos between cross-functional data producer teams, sometimes also known as domain data experts, and business-focused consumer teams that author business intelligence (BI) reports and dashboards.

| Since you’re reading this post, you may also be interested in the following: |

Data democratization and the need for self-service BI

To be able to extract insights and get value out of organizational-wide data assets, data consumers like data analysts need to understand the meaning of existing data assets. They rely on data platform engineers to perform such data discovery tasks on their behalf.

Although data platform engineers can programmatically extract and obtain some technical and operational metadata, such as database and table names and sizes, column schemas, and keys, this metadata is primarily used for organizing and manipulating data inside the data lake. They still rely on source data domain experts to gain more knowledge about the meaning of the data, its business context, and classification. It becomes more challenging when data domain experts tend to prioritize operational-critical requests and delay the analytical-related ones.

Such a cycled dependency, as illustrated in the following figure, can delay the organizational strategic vision for implementing a self-service data analytics platform to reduce the time of the data-to-insights process.

Solution overview

The Data Catalog fundamentally holds basic information about the actual data stored in various data sources, including but not limited to Amazon Simple Storage Service (Amazon S3), Amazon Relational Database Service (Amazon RDS), and Amazon Redshift. Information like data location, format, and columns schema can be automatically discovered and stored as tables, where each table specifies a single data store.

Throughout this post, we see how we can use the Data Catalog to make it easy for domain experts to add data descriptions, and for data analysts to access this metadata with BI tools.

First, we use the comment field in Data Catalog schema tables to store data descriptions with more business meaning. Comment fields aren’t like the other schema table fields (such as column name, data type, and partition key), which are typically populated automatically by an AWS Glue crawler.

We also use Amazon AI/ML capabilities to initially identify the description of each data entity. One way to do that is by using the Amazon Comprehend text analysis API. When we provide a sample of values for each data entity type, Amazon Comprehend natural language processing (NLP) models can identify a standard range of data classification, and we can use this as a description for identified data entities.

Next, because we need to identify entities unique to our domain or organization, we can use custom named entity recognition (NER) in Amazon Comprehend to add more metadata that is related to our business domain. One way to train custom NER models is to use Amazon SageMaker Ground Truth; for more information, see Developing NER models with Amazon SageMaker Ground Truth and Amazon Comprehend.

For this post, we use a dataset that has a table schema defined as per TPC-DS, and was generated using a data generator developed as part of AWS Analytics Reference Architecture code samples.

In this example, Amazon Comprehend API recognizes PII-related fields like Aid as a MAC address. While the none PII-related fields like Estatus, aren’t recognized. Therefore, the user enters a custom description manually, and we use the custom NER to automatically populate those fields, as shown in the following diagram.

After we add data meanings, we need to expose all the metadata captured in the Data Catalog to various data consumers. This can be done two different ways:

- Via the AWS Glue Data Catalog API, which is more suitable for technical users comprehending ETL jobs programmatically using services like AWS Glue ETL or Amazon EMR

- Via SQL-querying to information_schema for data analysts performing ad hoc SQL querying using services like Amazon Athena

We can also use the latter method to expose the Data Catalog to BI authors comprehending data analyses and dashboards using Amazon QuickSight, so we use the second method for this post.

We do this by defining an Athena dataset that queries the information_schema and allows BI authors to use the QuickSight capability of text search filter to search and discover data using its business meaning (see the following diagram).

Solution details

The core part of this solution is done using AWS Glue jobs. We use two AWS Glue jobs, which are responsible for calling Amazon Comprehend APIs and updating the AWS Glue Data Catalog with added data descriptions accordingly.

The first job (Glue_Comprehend_Job) performs the first stage of detection using the Amazon Comprehend Detect PII API, and the second job (Glue_Comprehend_Custom) uses Amazon Comprehend custom entity recognition for entities labeled by domain experts. The following diagram illustrates this workflow.

We describe the details of each stage in the upcoming sections.

You can integrate this workflow into your existing data processing pipeline, which might be orchestrated with AWS services like AWS Step Functions, Amazon Managed Workflows for Apache Airflow (Amazon MWAA), AWS Glue workflows, or any third-party orchestrator.

The workflow can complement AWS Glue crawler functionality and inherit the same logic for scheduling and running crawlers. On the other end, we can query the updated Data Catalog with data descriptions via Athena (see the following diagram).

To show an end-to-end implementation of this solution, we have adopted a choreographically built architecture with additional AWS Lambda helper functions, which communicate between AWS services, triggering the AWS Glue crawler and AWS Glue jobs.

Stage-one: Enrich the Data Catalog with a standard built-in Amazon Comprehend entity detector

To get started, Choose ![]() to launch a CloudFormation stack.

to launch a CloudFormation stack.

Define unique S3 bucket name and on the CloudFormation console, accept default values for the parameters.

This CloudFormation stack consists of the following:

- An AWS Identity Access Management (IAM) role called

Lambda-S3-Glue-comprehend. - An S3 bucket with a bucket name that can be defined based on preference.

- A Lambda function called

trigger_data_cataloging. This function is automatically triggered when any CSV file is uploaded to the folderrow_datainside our S3 bucket. Then it creates an AWS Glue database if one doesn’t exist, and creates and runs an AWS Glue crawler calledglue_crawler_comprehend. - An AWS Glue job called

Glue_Comprehend_Job, which calls Amazon Comprehend APIs and updates the AWS Glue Data Catalog table accordingly. - A Lambda function called

Glue_comprehend_workflow, which is triggered when the AWS Glue Crawler successfully finishes and calls the AWS Glue jobGlue_Comprehend_Job.

To test the solution, create a prefix called row_data under the S3 bucket created from the CF stack, then upload the customer dataset sample to the prefix.

The first Lambda function is triggered to run the subsequent AWS Glue crawler and AWS Glue job to get data descriptions using Amazon Comprehend, and it updates the comment section of the dataset created in the AWS Glue Data Catalog.