Post Syndicated from Benjamin Smith original https://aws.amazon.com/blogs/compute/deploying-state-machines-incrementally-with-versions-and-aliases-in-aws-step-functions/

This post is written by Peter Smith, Principal Engineer for AWS Step Functions

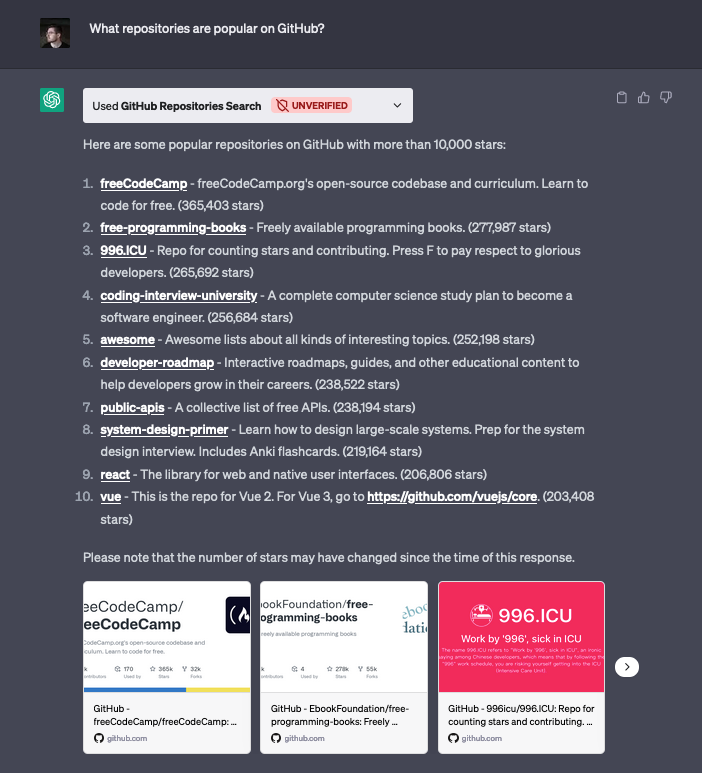

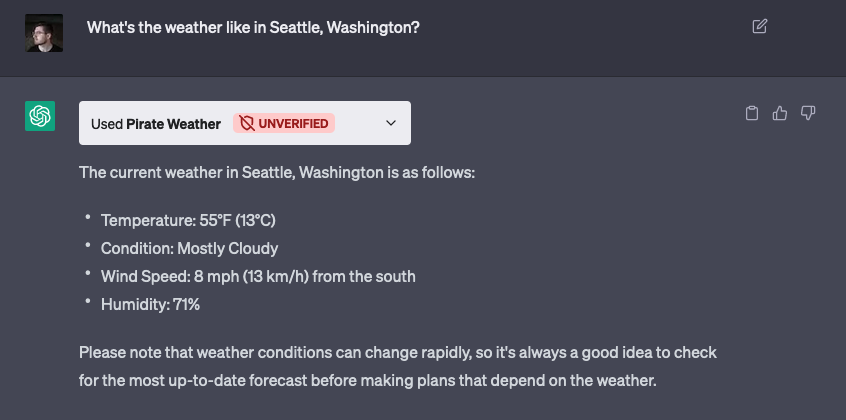

This blog post explains the new versions and aliases feature in AWS Step Functions, allowing you to run specific revisions of the state machine instead of always using the latest. This allows for more reliable deployments that help control risk, and provide visibility into exactly which version is run. This post describes how to use this feature, with incremental deployment patterns such as blue/green, canary, and linear deployments, each providing greater assurance that your state machine updates are sufficiently tested.

Step Functions is a low-code, visual workflow service to build distributed applications. Developers use the service to automate IT and business processes, and orchestrate AWS services with minimal code. It uses the Amazon States Language (ASL) to describe state machines and you can modify their definition over time. Until now, when a state machine was run, it used the ASL definition from the most recent update. If the latest change contained defects, disruptions could occur. The resolution either required another ASL update to fix the problem, or an explicit action to revert the state machine to a previous definition.

Using versions and aliases

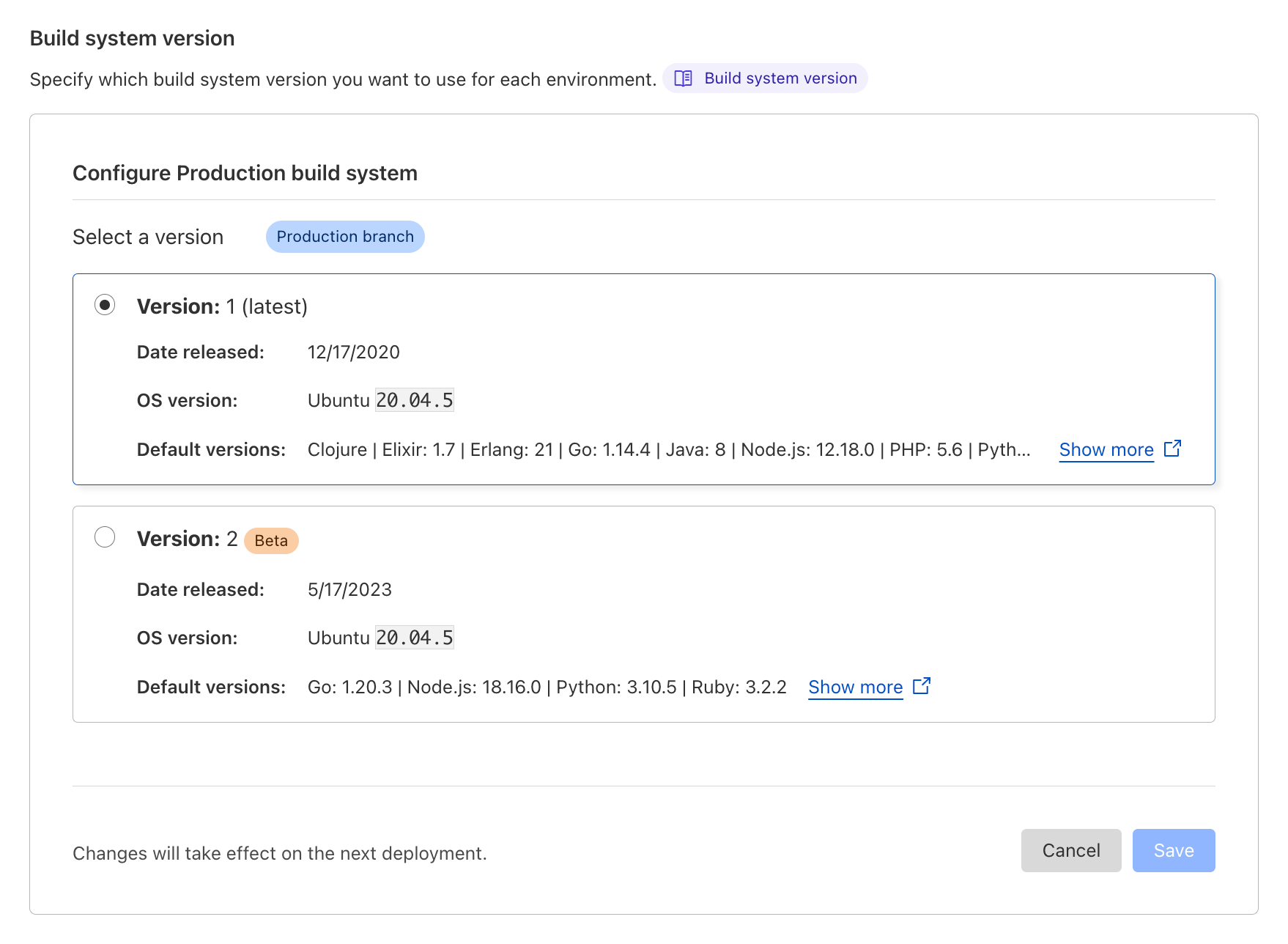

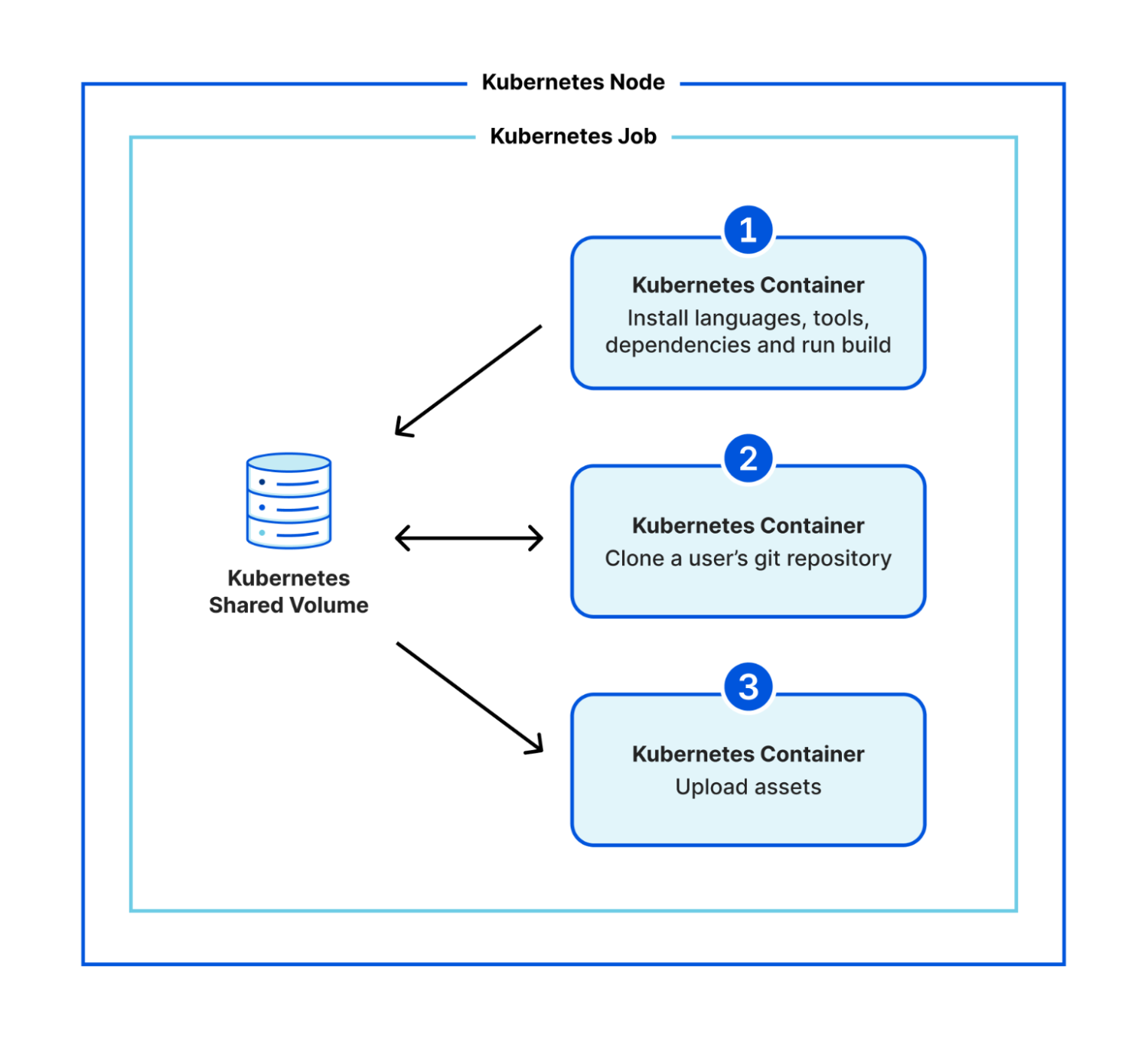

Every update to a state machine’s ASL definition can now be versioned, either via the Step Functions console, the AWS SDK, the AWS CLI, AWS CloudFormation, or a similar tool. You must choose to publish a new version explicitly, usually at the same time your ASL definition is updated. Version numbers are automatically assigned, starting with version 1.

To control which version of a state machine runs, you can now append a version number to the state machine ARN:

aws stepfunctions start-execution –-state-machine-arn \

arn:aws:states:us-east-1:123456789012:stateMachine:demo:5This example starts version 5 of the demo state machine. Even if the state machine has since been updated, qualifying the state machine ARN ensures that version 5’s definition is used. You can now test newer versions (such as version 6) with confidence that executions of version 5 continue without interruption.

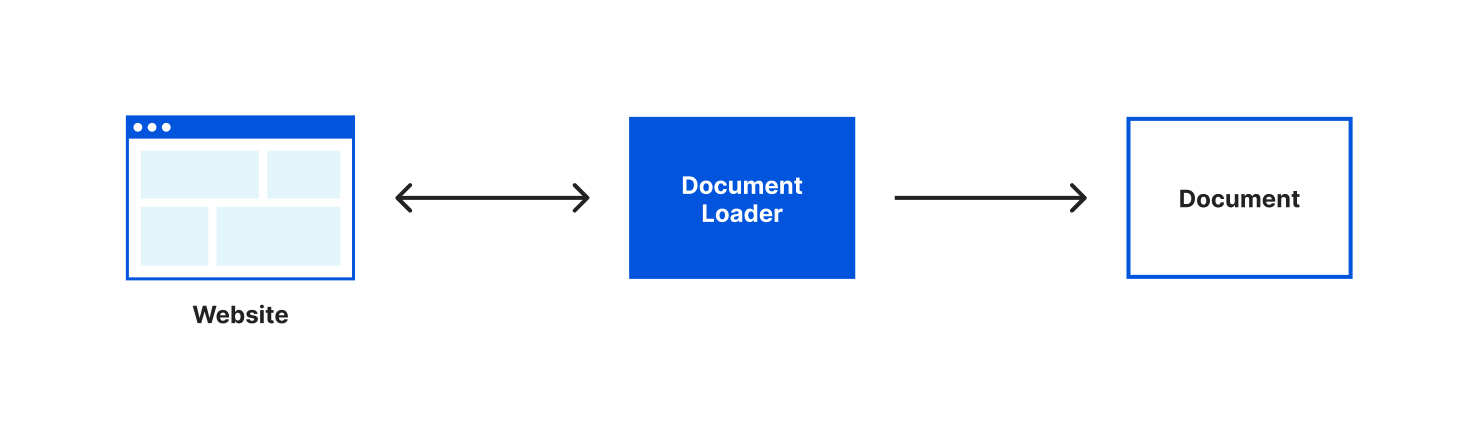

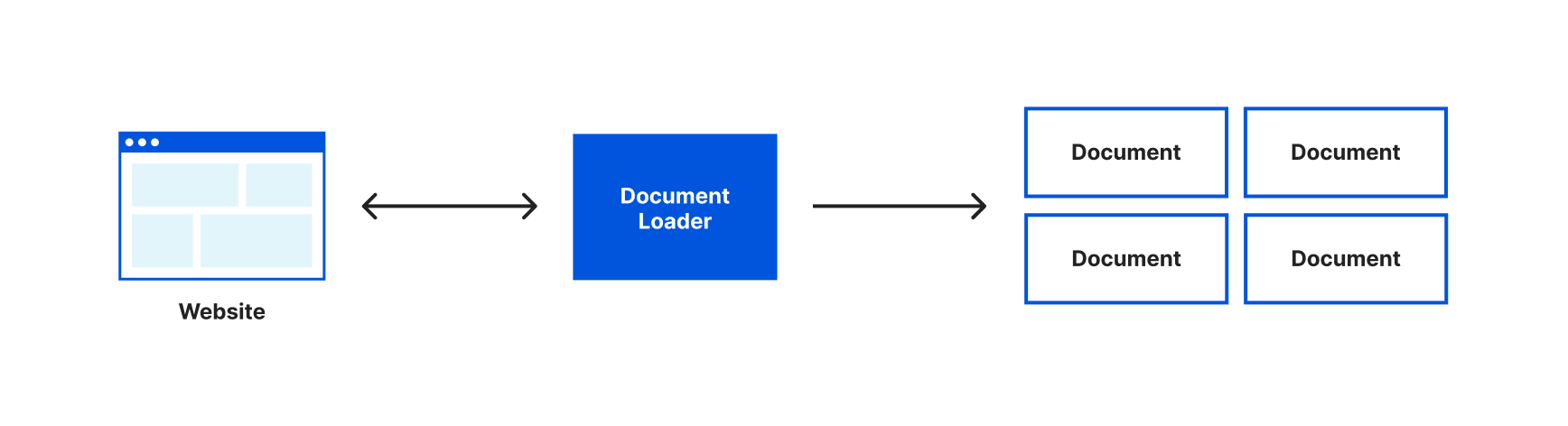

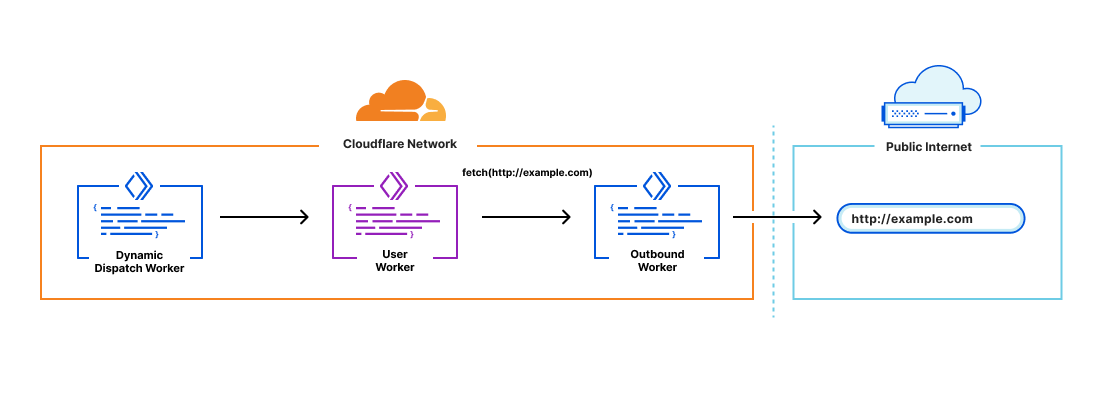

To ease the management of versions, symbolic aliases can be assigned to a specific version, but then be updated at any time to refer to a different version. It’s also possible for an alias to split execution requests between two different versions. For example, 90% of executions use version 5, and 10% use version 6.

To start a state machine execution using an alias, you can now append the alias name (such as prod) to the state machine ARN:

aws stepfunctions start-execution –-state-machine-arn \

arn:aws:states:us-east-1:123456789012:stateMachine:demo:prodThis example runs the state machine version that the prod alias currently refers to. If prod splits executions between two versions, one of them is selected based on the assigned weights. For example, version 5 is chosen 90% of the time, and version 6 is chosen 10% of the time.

Incremental deployment use cases

Using common deployment patterns helps avoid the pitfalls of traditional “big bang” updates, such as all executions failing when new software is deployed. By using an alias to gradually transition state machine executions to the newly published version (for example, 10% at a time), newly introduced bugs have limited impact. Once there’s confidence in the new version, it can be used for the entire production workload.

Blue/green deployments

In this approach, the existing state machine version (currently used in production) is the “blue” version, whereas a newly deployed state machine is the “green” version. As a rule, you should deploy the blue version in production, while testing the newer green version in a separate environment. Once the green version is validated, use it in production (it becomes the new blue version).

If version 6 causes issues in production, roll back the “blue” alias to the previous value so that executions revert to version 5.

This approach provides a higher degree of quality assurance for state machines. However, unless your test suite provides an accurate representation of your production workload, you should also consider canary or linear (or rolling) deployments to validate with real data.

Canary and linear deployments

With canary deployments, configure the prod alias to split traffic between the earlier version (for example, 95% of requests) and the new version (5% of requests). If there’s no resulting increase in failures, you can adjust the alias to direct 100% of requests to the new version. On failure, revert the alias to send 100% of requests to the earlier version.

A linear deployment takes a similar approach, but incrementally adjusts the weights over time until the new version receives 100% of requests. For example, start with 10%/90%, then 20%/80%, continuing at regular intervals until you reach 100%/0%. If an elevated number of failures is detected, immediately rollback to the earlier version.

Deploying a full application

Another scenario is when state machines are deployed as part of a larger application, with the application code and state machine being updated in lock-step. The following example shows a blue/green deployment where the application version 56 uses state machine version 5, and application version 64 uses version 6.

The application must use the correct version ARN when invoking the state machine. This avoids unexpected behavior changes in the blue version when the green version (still to be tested) is first deployed. If you unintentionally use the unqualified ARN (without the version number), the outdated application (version 56) would incorrectly use the latest state machine definition (version 6) instead of the previously deployed version 5.

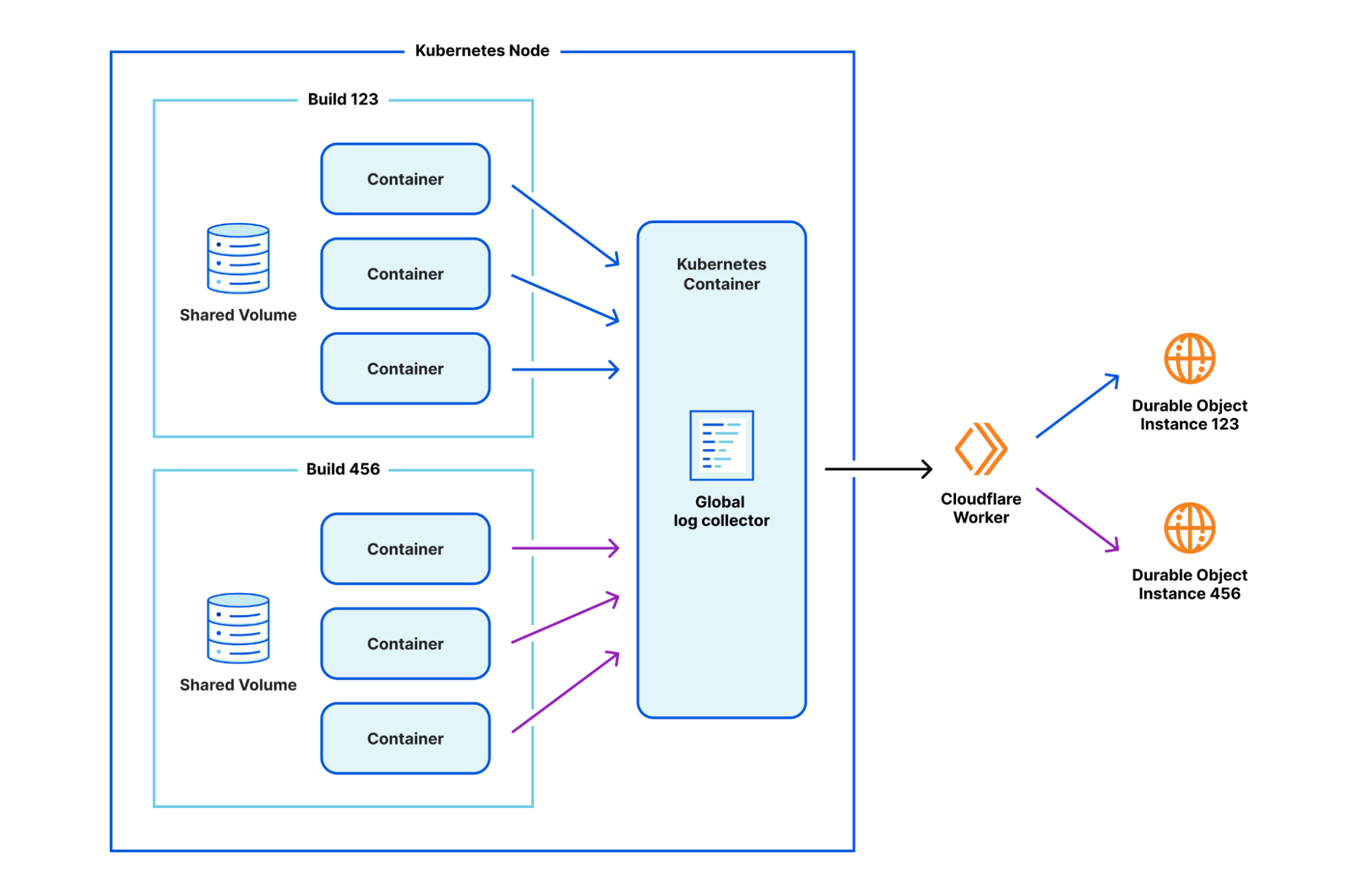

Observability and auditing use cases

A significant benefit of using version ARNs is seen when examining execution history, especially with long-running executions. State machines can run for up to one year, accessing other AWS resources (such as AWS Lambda functions) throughout this time. For the sake of auditing resources, it’s important to know the version of each running state machine. Once all executions have completed, you can remove the resources they depend on (in the following example, the ProcessInventory Lambda function).

Depending on your use case, you may have other auditing or compliance needs where it’s important to know exactly which version of the state machine you’re running.

Feature walkthrough

To create a new state machine version in the Step Functions console, choose Publish Version immediately after saving your state machine definition. You are prompted to enter an optional description, such as “Initial Implementation”.

You can also choose Publish Version after updating an existing state machine, adding an optional description for the recent changes, such as “Add retry logic”.

On the main state machine detail page, there are two new tabs: Aliases and Versions. The Versions tab shows a list of state machine versions, their descriptions, when each was last run, and which aliases refer to that version. This example shows several new versions.

To start running a specific version, select the radio button to the left of the version number, then choose Start execution.

On the state machine detail page, choose the Executions tab to see the completed and in-progress executions. Additional columns indicate which version or alias started each execution. You can filter the execution list by version or alias to refine the list.

To create a state machine alias, return to the state machine detail page, select the Alias tab, then choose Create Alias. Provide an alias name, an optional description, and a routing configuration. For the simple case, select a single version to use (100% of executions) whenever an execution is started using the alias.

To create an alias that routes traffic to two versions (as seen in the incremental-deployment examples), provide a routing configuration with two different version numbers. Specify the percentage of the state machine executions for each of the versions.

Implementing CI/CD Deployments with AWS CloudFormation

To support incremental deployments, new AWS CloudFormation resources are able to publish state machine versions, define aliases, and to incrementally deploy state machine updates using a blue/green, canary, or linear approach.

The following example shows the AWS::StepFunctions::StateMachine, AWS::StepFunctions::StateMachineVersion, and AWS::StepFunctions::StateMachineAlias resources used to define a state machine, to publish a single version, and to deploy using the prod alias linearly.

Description: "Example of Linear Deployment of a State Machine"

Parameters:

StateMachineBucket:

Type: "String"

StateMachineKey:

Type: "String"

StateMachineRole:

Type: "String"

Resources:

DemoStateMachine:

Type: "AWS::StepFunctions::StateMachine"

Properties:

StateMachineName: DemoStateMachine

DefinitionS3Location:

Bucket: !Ref StateMachineBucket

Key: !Ref StateMachineKey

RoleArn: !Ref StateMachineRole

DemoStateMachineVersion:

Type: "AWS::StepFunctions::StateMachineVersion"

Properties:

StateMachineArn: !Ref DemoStateMachine

StateMachineRevisionId: !GetAtt DemoStateMachine.StateMachineRevisionId

DemoAlias:

Type: "AWS::StepFunctions::StateMachineAlias"

Properties:

Name: prod

DeploymentPreference:

StateMachineVersionArn: !Ref DemoStateMachineVersion

Type: LINEAR

Interval: 2

Percentage: 20

Alarms:

- !Ref DemoCloudWatchAlarm

Each time you modify the state machine, update the StateMachineKey parameter with a new date-stamped file, such as state_machine-202305251336.asl.json, then redeploy the CloudFormation template. Executions of this state machine linearly transition from the previous version to the new version over a ten-minute period, using five equal intervals of two minutes each. If the specified Amazon CloudWatch Alarm is triggered, the alias automatically rolls back to the previous state machine version.

Additionally, for users of common third-party CI/CD tools, such as Jenkins or Spinnaker, or even your custom systems, a reference implementation demonstrates how to implement incremental deployments using the AWS SDK or AWS CLI, complete with automated rollback if a CloudWatch alarm is triggered.

Pricing and availability

Customers can use Step Functions versions and aliases within all Regions where Step Functions is available. Step Functions versions and aliases is included in Step Functions pricing at no additional fee.

Conclusion

The new Step Functions versions and aliases feature allows you to run specific revisions of the state machine, instead of always using the latest. This allows for more reliable deployments that help control deployment risks, and also provide visibility into exactly which version was run. After updating your state machine definition, you may optionally publish a version of that state machine, then run the version by using a versioned state machine ARN.

Likewise, an alias (such as test or prod) can reference state machine versions that change over time. For example, starting an execution using the prod alias ensures that you only use well-tested revisions of the state machine, even if newer non-production-ready revisions are present.

Aliases can split executions between two different versions, using percentage weights to choose between them. This feature supports incremental-deployment patterns such as blue/green, canary, and linear deployments, each providing greater assurance that your state machine updates deploy successfully.

For more serverless learning resources, visit Serverless Land.

Michael Hamilton is a Sr Analytics Solutions Architect focusing on helping enterprise customers in the south east modernize and simplify their analytics workloads on AWS. He enjoys mountain biking and spending time with his wife and three children when not working.

Michael Hamilton is a Sr Analytics Solutions Architect focusing on helping enterprise customers in the south east modernize and simplify their analytics workloads on AWS. He enjoys mountain biking and spending time with his wife and three children when not working. Angus Ferguson is a Solutions Architect at AWS who is passionate about meeting customers across the world, helping them solve their technical challenges. Angus specializes in Data & Analytics with a focus on customers in the financial services industry.

Angus Ferguson is a Solutions Architect at AWS who is passionate about meeting customers across the world, helping them solve their technical challenges. Angus specializes in Data & Analytics with a focus on customers in the financial services industry.