Post Syndicated from Wakana Vilquin-Sakashita original https://aws.amazon.com/blogs/big-data/manage-users-and-group-memberships-on-amazon-quicksight-using-scim-events-generated-in-iam-identity-center-with-azure-ad/

Amazon QuickSight is cloud-native, scalable business intelligence (BI) service that supports identity federation. AWS Identity and Access Management (IAM) allows organizations to use the identities managed in their enterprise identity provider (IdP) and federate single sign-on (SSO) to QuickSight. As more organizations are building centralized user identity stores with all their applications, including on-premises apps, third-party apps, and applications on AWS, they need a solution to automate user provisioning into these applications and keep their attributes in sync with their centralized user identity store.

When architecting a user repository, some organizations decide to organize their users in groups or use attributes (such as department name), or a combination of both. If your organization uses Microsoft Azure Active Directory (Azure AD) for centralized authentication and utilizes its user attributes to organize the users, you can enable federation across all QuickSight accounts as well as manage users and their group membership in QuickSight using events generated in the AWS platform. This allows system administrators to centrally manage user permissions from Azure AD. Provisioning, updating, and de-provisioning users and groups in QuickSight no longer requires management in two places with this solution. This makes sure that users and groups in QuickSight stay consistent with information in Azure AD through automatic synchronization.

In this post, we walk you through the steps required to configure federated SSO between QuickSight and Azure AD via AWS IAM Identity Center (Successor to AWS Single Sign-On) where automatic provisioning is enabled for Azure AD. We also demonstrate automatic user and group membership update using a System for Cross-domain Identity Management (SCIM) event.

Solution overview

The following diagram illustrates the solution architecture and user flow.

In this post, IAM Identity Center provides a central place to bring together administration of users and their access to AWS accounts and cloud applications. Azure AD is the user repository and configured as the external IdP in IAM Identity Center. In this solution, we demonstrate the use of two user attributes (department, jobTitle) specifically in Azure AD. IAM Identity Center supports automatic provisioning (synchronization) of user and group information from Azure AD into IAM Identity Center using the SCIM v2.0 protocol. With this protocol, the attributes from Azure AD are passed along to IAM Identity Center, which inherits the defined attribute for the user’s profile in IAM Identity Center. IAM Identity Center also supports identity federation with SAML (Security Assertion Markup Language) 2.0. This allows IAM Identity Center to authenticate identities using Azure AD. Users can then SSO into applications that support SAML, including QuickSight. The first half of this post focuses on how to configure this end to end (see Sign-In Flow in the diagram).

Next, user information starts to get synchronized between Azure AD and IAM Identity Center via SCIM protocol. You can automate creating a user in QuickSight using an AWS Lambda function triggered by the CreateUser SCIM event originated from IAM Identity Center, which was captured in Amazon EventBridge. In the same Lambda function, you can subsequently update the user’s membership by adding into the specified group (whose name is comprised of two user attributes: department-jobTitle, otherwise create the group if it doesn’t exist yet, prior to adding the membership.

In this post, this automation part is omitted because it would be redundant with the content discussed in the following sections.

This post explores and demonstrates an UpdateUser SCIM event triggered by the user profile update on Azure AD. The event is captured in EventBridge, which invokes a Lambda function to update the group membership in QuickSight (see Update Flow in the diagram). Because a given user is supposed to belong to only one group at a time in this example, the function will replace the user’s current group membership with the new one.

In Part I, you set up SSO to QuickSight from Azure AD via IAM Identity Center (the sign-in flow):

- Configure Azure AD as the external IdP in IAM Identity Center.

- Add and configure an IAM Identity Center application in Azure AD.

- Complete configuration of IAM Identity Center.

- Set up SCIM automatic provisioning on both Azure AD and IAM Identity Center, and confirm in IAM Identity Center.

- Add and configure a QuickSight application in IAM Identity Center.

- Configure a SAML IdP and SAML 2.0 federation IAM role.

- Configure attributes in the QuickSight application.

- Create a user, group, and group membership manually via the AWS Command Line Interface (AWS CLI) or API.

- Verify the configuration by logging in to QuickSight from the IAM Identity Center portal.

In Part II, you set up automation to change group membership upon an SCIM event (the update flow):

- Understand SCIM events and event patterns for EventBridge.

- Create attribute mapping for the group name.

- Create a Lambda function.

- Add an EventBridge rule to trigger the event.

- Verify the configuration by changing the user attribute value at Azure AD.

Prerequisites

For this walkthrough, you should have the following prerequisites:

- IAM Identity Center. For instructions, refer to Steps 1–2 in the AWS IAM Identity Center Getting Started guide.

- A QuickSight account subscription.

- Basic understanding of IAM and privileges required to create an IAM IdP, roles, and policies.

- An Azure AD subscription. You need at least one user with the following attributes to be registered in Azure AD:

- userPrincipalName – Mandatory field for Azure AD user.

- displayName – Mandatory field for Azure AD user.

- Mail – Mandatory field for IAM Identity Center to work with QuickSight.

- jobTitle – Used to allocate user to group

- department – Used to allocate user to group.

- givenName – Optional field.

- surname – Optional field.

Part I: Set up SSO to QuickSight from Azure AD via IAM Identity Center

This section presents the steps to set up the sign-in flow.

Configure an external IdP as Azure AD in IAM Identity Center

To configure your external IdP, complete the following steps:

- On the IAM Identity Center console, choose Settings.

- Choose Actions on the Identity source tab, then choose Change identity source.

- Choose External identity provider, then choose Next.

The IdP metadata is displayed. Keep this browser tab open.

Add and configure an IAM Identity Center application in Azure AD

To set up your IAM Identity Center application, complete the following steps:

- Open a new browser tab.

- Log in to the Azure AD portal using your Azure administrator credentials.

- Under Azure services, choose Azure Active Directory.

- In the navigation pane, under Manage, choose Enterprise applications, then choose New application.

- In the Browse Azure AD Galley section, search for IAM Identity Center, then choose AWS IAM Identity Center (successor to AWS Single Sign-On).

- Enter a name for the application (in this post, we use

IIC-QuickSight) and choose Create. - In the Manage section, choose Single sign-on, then choose SAML.

- In the Assign users and groups section, choose Assign users and groups.

- Choose Add user/group and add at least one user.

- Select User as its role.

- In the Set up single sign on section, choose Get started.

- In the Basic SAML Configuration section, choose Edit, and fill out following parameters and values:

- Identifier – The value in the IAM Identity Center issuer URL field.

- Reply URL – The value in the IAM Identity Center Assertion Consumer Service (ACS) URL field.

- Sign on URL – Leave blank.

- Relay State – Leave blank.

- Logout URL – Leave blank.

- Choose Save.

The configuration should look like the following screenshot.

- In the SAML Certificates section, download the Federation Metadata XML file and the Certificate (Raw) file.

You’re all set with Azure AD SSO configuration at this moment. Later on, you’ll return to this page to configure automated provisioning, so keep this browser tab open.

Complete configuration of IAM Identity Center

Complete your IAM Identity Center configuration with the following steps:

- Go back to the browser tab for IAM Identity Center console which you have kept open in previous step.

- For IdP SAML metadata under the Identity provider metadata section, choose Choose file.

- Choose the previously downloaded metadata file (

IIC-QuickSight.xml). - For IdP certificate under the Identity provider metadata section, choose Choose file.

- Choose the previously downloaded certificate file (

IIC-QuickSight.cer). - Choose Next.

- Enter ACCEPT, then choose Change Identity provider source.

Set up SCIM automatic provisioning on both Azure AD and IAM Identity Center

Your provisioning method is still set as Manual (non-SCIM). In this step, we enable automatic provisioning so that IAM Identity Center becomes aware of the users, which allows identity federation to QuickSight.

- In the Automatic provisioning section, choose Enable.

- Choose Access token to show your token.

- Go back to the browser tab (Azure AD), which you kept open in Step 1.

- In the Manage section, choose Enterprise applications.

- Choose

IIC-QuickSight, then choose Provisioning. - Choose Automatic in Provisioning Mode and enter the following values:

- Tenant URL – The value in the SCIM endpoint field.

- Secret Token – The value in the Access token field.

- Choose Test Connection.

- After the test connection is successfully complete, set Provisioning Status to On.

- Choose Save.

- Choose Start provisioning to start automatic provisioning using the SCIM protocol.

When provisioning is complete, it will result in propagating one or more users from Azure AD to IAM Identity Center. The following screenshot shows the users that were provisioned in IAM Identity Center.

Note that upon this SCIM provisioning, the users in QuickSight should be created using the Lambda function triggered by the event originated from IAM Identity Center. In this post, we create a user and group membership via the AWS CLI (Step 8).

Add and configure a QuickSight application in IAM Identity Center

In this step, we create a QuickSight application in IAM Identity Center. You also configure an IAM SAML provider, role, and policy for the application to work. Complete the following steps:

- On the IAM Identity Center console, on the Applications page, choose Add Application.

- For Pre-integrated application under Select an application, enter

quicksight. - Select Amazon QuickSight, then choose Next.

- Enter a name for Display name, such as

Amazon QuickSight. - Choose Download under IAM Identity Center SAML metadata file and save it in your computer.

- Leave all other fields as they are, and save the configuration.

- Open the application you’ve just created, then choose Assign Users.

The users provisioned via SCIM earlier will be listed.

- Choose all of the users to assign to the application.

Configure a SAML IdP and a SAML 2.0 federation IAM role

To set up your IAM SAML IdP for IAM Identity Center and IAM role, complete the following steps:

- On the IAM console, in the navigation pane, choose Identity providers, then choose Add provider.

- Choose SAML as Provider type, and enter

Azure-IIC-QSas Provider name. - Under Metadata document, choose Choose file and upload the metadata file you downloaded earlier.

- Choose Add provider to save the configuration.

- In the navigation pane, choose Roles, then choose Create role.

- For Trusted entity type, select SAML 2.0 federation.

- For Choose a SAML 2.0 provider, select the SAML provider that you created, then choose Allow programmatic and AWS Management Console access.

- Choose Next.

- On the Add Permission page, choose Next.

In this post, we create QuickSight users via an AWS CLI command, therefore we’re not creating any permission policy. However, if the self-provisioning feature in QuickSight is required, the permission policy for the CreateReader, CreateUser, and CreateAdmin actions (depending on the role of the QuickSight users) is required.

- On the Name, review, and create page, under Role details, enter

qs-reader-azurefor the role. - Choose Create role.

- Note the ARN of the role.

You use the ARN to configure attributes in your IAM Identity Center application.

Configure attributes in the QuickSight application

To associate the IAM SAML IdP and IAM role to the QuickSight application in IAM Identity Center, complete the following steps:

- On the IAM Identity Center console, in the navigation pane, choose Applications.

- Select the

Amazon QuickSightapplication, and on the Actions menu, choose Edit attribute mappings. - Choose Add new attribute mapping.

- Configure the mappings in the following table.

| User attribute in the application | Maps to this string value or user attribute in IAM Identity Center |

| Subject | ${user:email} |

| https://aws.amazon.com/SAML/Attributes/RoleSessionName | ${user:email} |

| https://aws.amazon.com/SAML/Attributes/Role | arn:aws:iam::<ACCOUNTID>:role/qs-reader-azure,arn:aws:iam::<ACCOUNTID>:saml-provider/Azure-IIC-QS |

| https://aws.amazon.com/SAML/Attributes/PrincipalTag:Email | ${user:email} |

Note the following values:

- Replace <ACCOUNTID> with your AWS account ID.

PrincipalTag:Emailis for the email syncing feature for self-provisioning users that need to be enabled on the QuickSight admin page. In this post, don’t enable this feature because we register the user with an AWS CLI command.

- Choose Save changes.

Create a user, group, and group membership with the AWS CLI

As described earlier, users and groups in QuickSight are being created manually in this solution. We create them via the following AWS CLI commands.

The first step is to create a user in QuickSight specifying the IAM role created earlier and email address registered in Azure AD. The second step is to create a group with the group name as combined attribute values from Azure AD for the user created in the first step. The third step is to add the user into the group created earlier; member-name indicates the user name created in QuickSight that is comprised of <IAM Role name>/<session name>. See the following code:

At this point, the end-to-end configuration of Azure AD, IAM Identity Center, IAM, and QuickSight is complete.

Verify the configuration by logging in to QuickSight from the IAM Identity Center portal

Now you’re ready to log in to QuickSight using the IdP-initiated SSO flow:

- Open a new private window in your browser.

- Log in to the IAM Identity Center portal (

https://d-xxxxxxxxxx.awsapps.com/start).

You’re redirected to the Azure AD login prompt.

- Enter your Azure AD credentials.

You’re redirected back to the IAM Identity Center portal.

- In the IAM Identity Center portal, choose Amazon QuickSight.

You’re automatically redirected to your QuickSight home.

Part II: Automate group membership change upon SCIM events

In this section, we configure the update flow.

Understand the SCIM event and event pattern for EventBridge

When an Azure AD administrator makes any changes to the attributes on the particular user profile, the change will be synced with the user profile in IAM Identity Center via SCIM protocol, and the activity is recorded in an AWS CloudTrail event called UpdateUser by sso-directory.amazonaws.com (IAM Identity Center) as the event source. Similarly, the CreateUser event is recorded when a user is created on Azure AD, and the DisableUser event is for when a user is disabled.

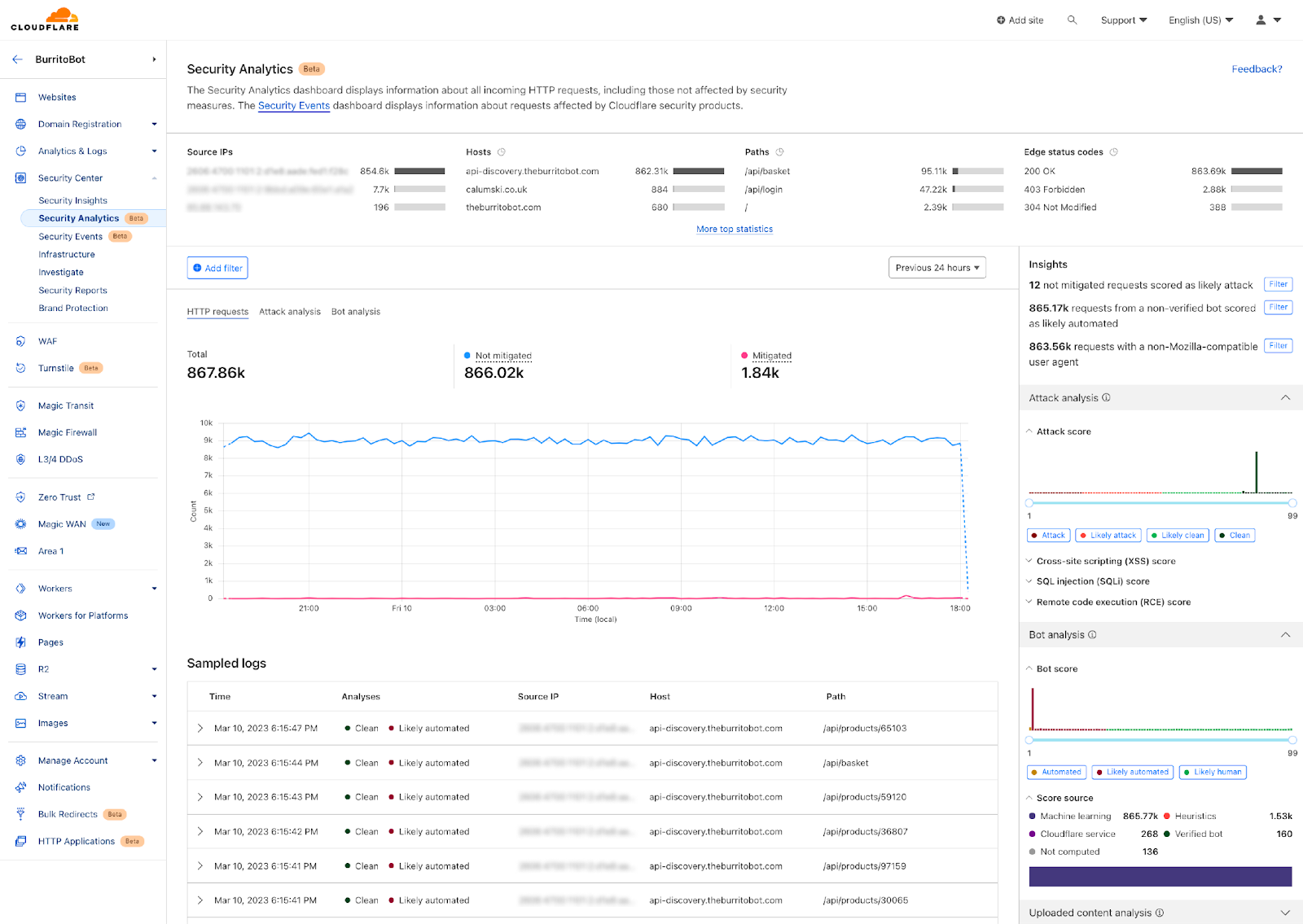

The following screenshot on the Event history page shows two CreateUser events: one is recorded by IAM Identity Center, and the other one is by QuickSight. In this post, we use the one from IAM Identity Center.

In order for EventBridge to be able to handle the flow properly, each event must specify the fields of an event that you want the event pattern to match. The following event pattern is an example of the UpdateUser event generated in IAM Identity Center upon SCIM synchronization:

In this post, we demonstrate an automatic update of group membership in QuickSight that is triggered by the UpdateUser SCIM event.

Create attribute mapping for the group name

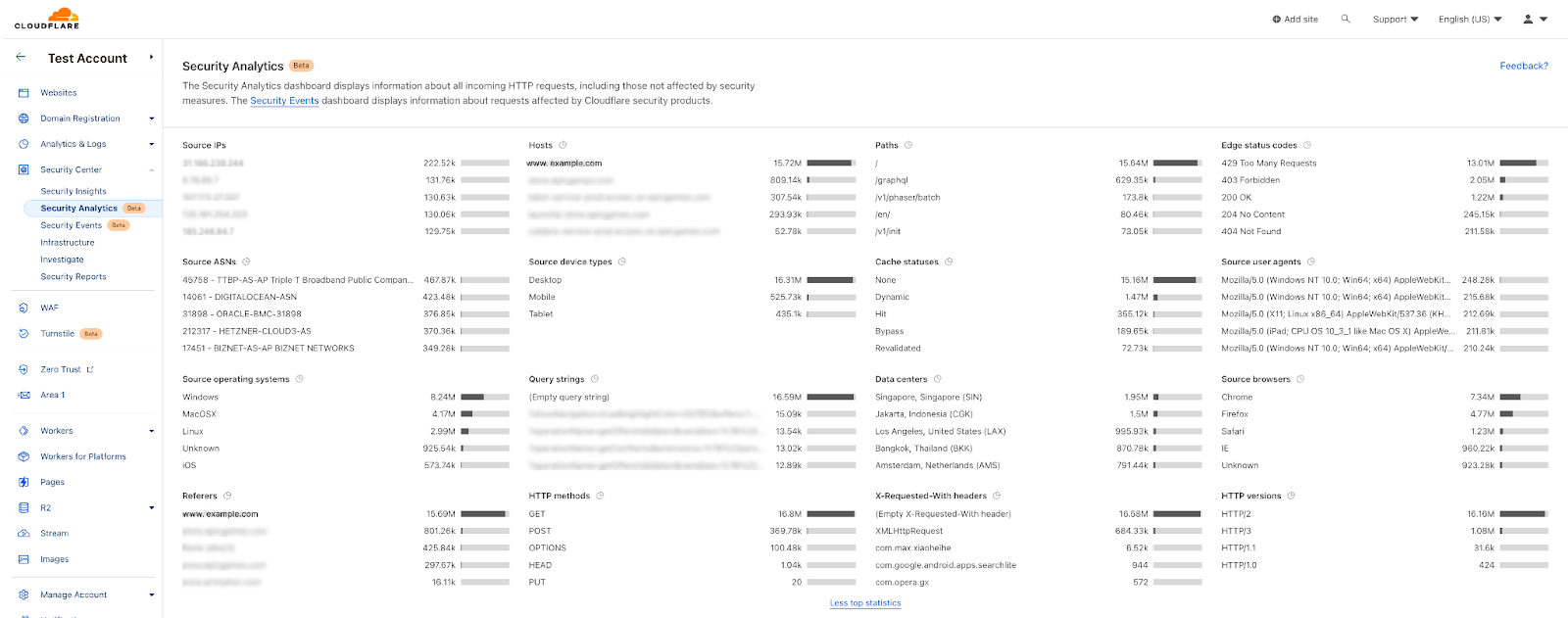

In order for the Lambda function to manage group membership in QuickSight, it must obtain the two user attributes (department and jobTitle). To make the process simpler, we’re combining two attributes in Azure AD (department, jobTitle) into one attribute in IAM Identity Center (title), using the attribute mappings feature in Azure AD. IAM Identity Center then uses the title attribute as a designated group name for this user.

- Log in to the Azure AD console, navigate to Enterprise Applications,

IIC-QuickSight, and Provisioning. - Choose Edit attribute mappings.

- Under Mappings, choose Provision Azure Active Directory Users.

- Choose

jobTitlefrom the list of Azure Active Directory Attributes. - Change the following settings:

- Mapping Type –

Expression - Expression –

Join("-", [department], [jobTitle]) - Target attribute –

title

- Mapping Type –

- Choose Save.

- You can leave the provisioning page.

The attribute is automatically updated in IAM Identity Center. The updated user profile looks like the following screenshots (Azure AD on the left, IAM Identity Center on the right).

Create a Lambda function

Now we create a Lambda function to update QuickSight group membership upon the SCIM event. The core part of the function is to obtain the user’s title attribute value in IAM Identity Center based on the triggered event information, and then to ensure that the user exists in QuickSight. If the group name doesn’t exist yet, it creates the group in QuickSight and then adds the user into the group. Complete the following steps:

- On the Lambda console, choose Create function.

- For Name, enter

UpdateQuickSightUserUponSCIMEvent. - For Runtime, choose Python 3.9.

- For Time Out, set to 15 seconds.

- For Permissions, create and attach an IAM role that includes the following permissions (the trusted entity (principal) should be

lambda.amazonaws.com): - Write Python code using the Boto3 SDK for IdentityStore and QuickSight. The following is the entire sample Python code:

Note that this Lambda function requires Boto3 1.24.64 or later. If the Boto3 included in the Lambda runtime is older than this, use a Lambda layer to use the latest version of Boto3. For more details, refer to How do I resolve “unknown service”, “parameter validation failed”, and “object has no attribute” errors from a Python (Boto 3) Lambda function.

Add an EventBridge rule to trigger the event

To create an EventBridge rule to invoke the previously created Lambda function, complete the following steps:

- On the EventBridge console, create a new rule.

- For Name, enter

updateQuickSightUponSCIMEvent. - For Event pattern, enter the following code:

- For Targets, choose the Lambda function you created (

UpdateQuickSightUserUponSCIMEvent). - Enable the rule.

Verify the configuration by changing a user attribute value at Azure AD

Let’s modify a user’s attribute at Azure AD, and then check if the new group is created and that the user is added into the new one.

- Go back to the Azure AD console.

- From Manage, click Users.

- Choose one of the users you previously used to log in to QuickSight from the IAM Identity Center portal.

- Choose Edit properties, then edit the values for Job title and Department.

- Save the configuration.

- From Manage, choose Enterprise application, your application name, and Provisioning.

- Choose Stop provisioning and then Start provisioning in sequence.

In Azure AD, the SCIM provisioning interval is fixed to 40 minutes. To get immediate results, we manually stop and start the provisioning.

- Navigate to the QuickSight console.

- On the drop-down user name menu, choose Manage QuickSight.

- Choose Manage groups.

Now you should find that the new group is created and the user is assigned to this group.

Clean up

When you’re finished with the solution, clean up your environment to minimize cost impact. You may want to delete the following resources:

- Lambda function

- Lambda layer

- IAM role for the Lambda function

- CloudWatch log group for the Lambda function

- EventBridge rule

- QuickSight account

- Note : There can only be one QuickSight account per AWS account. So your QuickSight account might already be used by other users in your organization. Delete the QuickSight account only if you explicitly set it up to follow this blog and are absolutely sure that it is not being used by any other users.

- IAM Identity Center instance

- IAM ID Provider configuration for Azure AD

- Azure AD instance

Summary

This post provided step-by-step instructions to configure IAM Identity Center SCIM provisioning and SAML 2.0 federation from Azure AD for centralized management of QuickSight users. We also demonstrated automated group membership updates in QuickSight based on user attributes in Azure AD, by using SCIM events generated in IAM Identity Center and setting up automation with EventBridge and Lambda.

With this event-driven approach to provision users and groups in QuickSight, system administrators can have full flexibility in where the various different ways of user management could be expected depending on the organization. It also ensures the consistency of users and groups between QuickSight and Azure AD whenever a user accesses QuickSight.

We are looking forward to hearing any questions or feedback.

About the authors

Takeshi Nakatani is a Principal Bigdata Consultant on Professional Services team in Tokyo. He has 25 years of experience in IT industry, expertised in architecting data infrastructure. On his days off, he can be a rock drummer or a motorcyclyst.

Takeshi Nakatani is a Principal Bigdata Consultant on Professional Services team in Tokyo. He has 25 years of experience in IT industry, expertised in architecting data infrastructure. On his days off, he can be a rock drummer or a motorcyclyst.

Wakana Vilquin-Sakashita is Specialist Solution Architect for Amazon QuickSight. She works closely with customers to help making sense of the data through visualization. Previously Wakana worked for S&P Global assisting customers to access data, insights and researches relevant for their business.

Wakana Vilquin-Sakashita is Specialist Solution Architect for Amazon QuickSight. She works closely with customers to help making sense of the data through visualization. Previously Wakana worked for S&P Global assisting customers to access data, insights and researches relevant for their business.

Mahesh Pasupuleti is a VP of Data & Machine Learning Engineering at Poshmark. He has helped several startups succeed in different domains, including media streaming, healthcare, the financial sector, and marketplaces. He loves software engineering, building high performance teams, and strategy, and enjoys gardening and playing badminton in his free time.

Mahesh Pasupuleti is a VP of Data & Machine Learning Engineering at Poshmark. He has helped several startups succeed in different domains, including media streaming, healthcare, the financial sector, and marketplaces. He loves software engineering, building high performance teams, and strategy, and enjoys gardening and playing badminton in his free time. Gaurav Shah is Director of Data Engineering and ML at Poshmark. He and his team help build data-driven solutions to drive growth at Poshmark.

Gaurav Shah is Director of Data Engineering and ML at Poshmark. He and his team help build data-driven solutions to drive growth at Poshmark. Raghu Mannam is a Sr. Solutions Architect at AWS in San Francisco. He works closely with late-stage startups, many of which have had recent IPOs. His focus is end-to-end solutioning including security, DevOps automation, resilience, analytics, machine learning, and workload optimization in the cloud.

Raghu Mannam is a Sr. Solutions Architect at AWS in San Francisco. He works closely with late-stage startups, many of which have had recent IPOs. His focus is end-to-end solutioning including security, DevOps automation, resilience, analytics, machine learning, and workload optimization in the cloud. Deepesh Malviya is Solutions Architect Manager on the AWS Data Lab team. He and his team help customers architect and build data, analytics, and machine learning solutions to accelerate their key initiatives as part of the AWS Data Lab.

Deepesh Malviya is Solutions Architect Manager on the AWS Data Lab team. He and his team help customers architect and build data, analytics, and machine learning solutions to accelerate their key initiatives as part of the AWS Data Lab.

John Telford is a Senior Consultant at Amazon Web Services. He is a specialist in big data and data warehouses. John has a Computer Science degree from Brunel University.

John Telford is a Senior Consultant at Amazon Web Services. He is a specialist in big data and data warehouses. John has a Computer Science degree from Brunel University. Anwar Rizal is a Senior Machine Learning consultant based in Paris. He works with AWS customers to develop data and AI solutions to sustainably grow their business.

Anwar Rizal is a Senior Machine Learning consultant based in Paris. He works with AWS customers to develop data and AI solutions to sustainably grow their business. Pauline Ting is a Data Scientist in the AWS Professional Services team. She supports customers in achieving and accelerating their business outcome by developing sustainable AI/ML solutions. In her spare time, Pauline enjoys traveling, surfing, and trying new dessert places.

Pauline Ting is a Data Scientist in the AWS Professional Services team. She supports customers in achieving and accelerating their business outcome by developing sustainable AI/ML solutions. In her spare time, Pauline enjoys traveling, surfing, and trying new dessert places.

Alexander Plumb is a Product Manager at Mitratech. Alexander has been a product leader with over 5 years of experience leading to highly successful product launches that meet customer needs.

Alexander Plumb is a Product Manager at Mitratech. Alexander has been a product leader with over 5 years of experience leading to highly successful product launches that meet customer needs. Bani Sharma is a Sr Solutions Architect with Amazon Web Services (AWS), based out of Denver, Colorado. As a Solutions Architect, she works with a large number of Small and Medium businesses, and provides technical guidance and solutions on AWS. She has an area of depth in Containers and Modernization. Prior to AWS, Bani worked in various technical roles for a large Telecom provider Dish Networks and worked as a Senior Developer for HSBC Bank Software development.

Bani Sharma is a Sr Solutions Architect with Amazon Web Services (AWS), based out of Denver, Colorado. As a Solutions Architect, she works with a large number of Small and Medium businesses, and provides technical guidance and solutions on AWS. She has an area of depth in Containers and Modernization. Prior to AWS, Bani worked in various technical roles for a large Telecom provider Dish Networks and worked as a Senior Developer for HSBC Bank Software development. Brian Klein is a Sr Technical Account Manager with Amazon Web Services (AWS), helping digital native businesses utilize AWS services to bring value to their organizations. Brian has worked with AWS technologies for 9 years, designing and operating production internet-facing workloads, with a focus on security, availability, and resilience while demonstrating operational efficiency.

Brian Klein is a Sr Technical Account Manager with Amazon Web Services (AWS), helping digital native businesses utilize AWS services to bring value to their organizations. Brian has worked with AWS technologies for 9 years, designing and operating production internet-facing workloads, with a focus on security, availability, and resilience while demonstrating operational efficiency.

Anish Moorjani is a Data Engineer in the Data and Analytics team at SafetyCulture. He helps SafetyCulture’s analytics infrastructure scale with the exponential increase in the volume and variety of data.

Anish Moorjani is a Data Engineer in the Data and Analytics team at SafetyCulture. He helps SafetyCulture’s analytics infrastructure scale with the exponential increase in the volume and variety of data. Randy Chng is an Analytics Solutions Architect at Amazon Web Services. He works with customers to accelerate the solution of their key business problems.

Randy Chng is an Analytics Solutions Architect at Amazon Web Services. He works with customers to accelerate the solution of their key business problems.

Gowtham Dandu is an Engineering Lead at Infomedia Ltd with a passion for building efficient and effective solutions on the cloud, especially involving data, APIs, and modern SaaS applications. He specializes in building microservices and data platforms that are cost-effective and highly scalable.

Gowtham Dandu is an Engineering Lead at Infomedia Ltd with a passion for building efficient and effective solutions on the cloud, especially involving data, APIs, and modern SaaS applications. He specializes in building microservices and data platforms that are cost-effective and highly scalable. Praveen Kumar is a Specialist Solution Architect at AWS with expertise in designing, building, and implementing modern data and analytics platforms using cloud-native services. His areas of interests are serverless technology, streaming applications, and modern cloud data warehouses.

Praveen Kumar is a Specialist Solution Architect at AWS with expertise in designing, building, and implementing modern data and analytics platforms using cloud-native services. His areas of interests are serverless technology, streaming applications, and modern cloud data warehouses.

Udayasimha Theepireddy is an Elastic Principal Solution Architect, where he works with customers to solve real world technology problems using Elastic and AWS services. He has a strong background in technology, business, and analytics.

Udayasimha Theepireddy is an Elastic Principal Solution Architect, where he works with customers to solve real world technology problems using Elastic and AWS services. He has a strong background in technology, business, and analytics. Antony Prasad Thevaraj is a Sr. Partner Solutions Architect in Data and Analytics at AWS. He has over 12 years of experience as a Big Data Engineer, and has worked on building complex ETL and ELT pipelines for various business units.

Antony Prasad Thevaraj is a Sr. Partner Solutions Architect in Data and Analytics at AWS. He has over 12 years of experience as a Big Data Engineer, and has worked on building complex ETL and ELT pipelines for various business units. Mostafa Mansour is a Principal Product Manager – Tech at Amazon Web Services where he works on Amazon Kinesis Data Firehose. He specializes in developing intuitive product experiences that solve complex challenges for customers at scale. When he’s not hard at work on Amazon Kinesis Data Firehose, you’ll likely find Mostafa on the squash court, where he loves to take on challengers and perfect his dropshots.

Mostafa Mansour is a Principal Product Manager – Tech at Amazon Web Services where he works on Amazon Kinesis Data Firehose. He specializes in developing intuitive product experiences that solve complex challenges for customers at scale. When he’s not hard at work on Amazon Kinesis Data Firehose, you’ll likely find Mostafa on the squash court, where he loves to take on challengers and perfect his dropshots.

Jimish Shah is a Senior Product Manager at AWS with 15+ years of experience bringing products to market in log analytics, cybersecurity, and IP video streaming. He’s passionate about launching products that offer delightful customer experiences, and solve complex customer problems. In his free time, he enjoys exploring cafes, hiking, and taking long walks

Jimish Shah is a Senior Product Manager at AWS with 15+ years of experience bringing products to market in log analytics, cybersecurity, and IP video streaming. He’s passionate about launching products that offer delightful customer experiences, and solve complex customer problems. In his free time, he enjoys exploring cafes, hiking, and taking long walks

The queries in

The queries in  The following queries are listed:

The following queries are listed:

Rohit Pujari is the Head of Product for Embedded Analytics at QuickSight. He is passionate about shaping the future of infusing data-rich experiences into products and applications we use every day. Rohit brings a wealth of experience in analytics and machine learning from having worked with leading data companies, and their customers. During his free time, you can find him lining up at the local ice cream shop for his second scoop.

Rohit Pujari is the Head of Product for Embedded Analytics at QuickSight. He is passionate about shaping the future of infusing data-rich experiences into products and applications we use every day. Rohit brings a wealth of experience in analytics and machine learning from having worked with leading data companies, and their customers. During his free time, you can find him lining up at the local ice cream shop for his second scoop.

Kalyan Janaki is Senior Big Data & Analytics Specialist with Amazon Web Services. He helps customers architect and build highly scalable, performant, and secure cloud-based solutions on AWS.

Kalyan Janaki is Senior Big Data & Analytics Specialist with Amazon Web Services. He helps customers architect and build highly scalable, performant, and secure cloud-based solutions on AWS.

Satesh Sonti is a Sr. Analytics Specialist Solutions Architect based out of Atlanta, specialized in building enterprise data platforms, data warehousing, and analytics solutions. He has over 16 years of experience in building data assets and leading complex data platform programs for banking and insurance clients across the globe.

Satesh Sonti is a Sr. Analytics Specialist Solutions Architect based out of Atlanta, specialized in building enterprise data platforms, data warehousing, and analytics solutions. He has over 16 years of experience in building data assets and leading complex data platform programs for banking and insurance clients across the globe. Tanya Rhodes is a Senior Solutions Architect based out of San Francisco, focused on games customers with emphasis on analytics, scaling, and performance enhancement of games and supporting systems. She has over 25 years of experience in enterprise and solutions architecture specializing in very large business organizations across multiple lines of business including games, banking, healthcare, higher education, and state governments.

Tanya Rhodes is a Senior Solutions Architect based out of San Francisco, focused on games customers with emphasis on analytics, scaling, and performance enhancement of games and supporting systems. She has over 25 years of experience in enterprise and solutions architecture specializing in very large business organizations across multiple lines of business including games, banking, healthcare, higher education, and state governments.

Flora Wu is a Sr. Resident Architect at AWS Data Lab. She helps enterprise customers create data analytics strategies and build solutions to accelerate their businesses outcomes. In her spare time, she enjoys playing tennis, dancing salsa, and traveling.

Flora Wu is a Sr. Resident Architect at AWS Data Lab. She helps enterprise customers create data analytics strategies and build solutions to accelerate their businesses outcomes. In her spare time, she enjoys playing tennis, dancing salsa, and traveling. Daniel Li is a Sr. Solutions Architect at Amazon Web Services. He focuses on helping customers develop, adopt, and implement cloud services and strategy. When not working, he likes spending time outdoors with his family.

Daniel Li is a Sr. Solutions Architect at Amazon Web Services. He focuses on helping customers develop, adopt, and implement cloud services and strategy. When not working, he likes spending time outdoors with his family.

Kachi Odoemene is an Applied Scientist at AWS AI. He builds AI/ML solutions to solve business problems for AWS customers.

Kachi Odoemene is an Applied Scientist at AWS AI. He builds AI/ML solutions to solve business problems for AWS customers. Taylor McNally is a Deep Learning Architect at Amazon Machine Learning Solutions Lab. He helps customers from various industries build solutions leveraging AI/ML on AWS. He enjoys a good cup of coffee, the outdoors, and time with his family and energetic dog.

Taylor McNally is a Deep Learning Architect at Amazon Machine Learning Solutions Lab. He helps customers from various industries build solutions leveraging AI/ML on AWS. He enjoys a good cup of coffee, the outdoors, and time with his family and energetic dog. Austin Welch is a Data Scientist in the Amazon ML Solutions Lab. He develops custom deep learning models to help AWS public sector customers accelerate their AI and cloud adoption. In his spare time, he enjoys reading, traveling, and jiu-jitsu.

Austin Welch is a Data Scientist in the Amazon ML Solutions Lab. He develops custom deep learning models to help AWS public sector customers accelerate their AI and cloud adoption. In his spare time, he enjoys reading, traveling, and jiu-jitsu.

Sushant Majithia is a Principal Product Manager for EMR at Amazon Web Services.

Sushant Majithia is a Principal Product Manager for EMR at Amazon Web Services. Vishal Vyas is a Senior Software Engineer for EMR at Amazon Web Services.

Vishal Vyas is a Senior Software Engineer for EMR at Amazon Web Services. Matthew Liem is a Senior Solution Architecture Manager at AWS.

Matthew Liem is a Senior Solution Architecture Manager at AWS.