Post Syndicated from João Tomé original https://blog.cloudflare.com/2022-attacks-an-august-reading-list-to-go-shields-up/

In 2022, cybersecurity is a must-have for those who don’t want to take chances on getting caught in a cyberattack with difficult to deal consequences. And with a war in Europe (Ukraine) still going on, cyberwar also doesn’t show signs of stopping in a time when there never were so many people online, 4.95 billion in early 2022, 62.5% of the world’s total population (estimates say it grew around 4% during 2021 and 7.3% in 2020).

Throughout the year we, at Cloudflare, have been making new announcements of products, solutions and initiatives that highlight the way we have been preventing, mitigating and constantly learning, over the years, with several thousands of small and big cyberattacks. Right now, we block an average of 124 billion cyber threats per day. The more we deal with attacks, the more we know how to stop them, and the easier it gets to find and deal with new threats — and for customers to forget we’re there, protecting them.

In 2022, we have been onboarding many customers while they’re being attacked, something we know well from the past (Wikimedia/Wikipedia or Eurovision are just two case-studies of many, and last year there was a Fortune Global 500 company example we wrote about). Recently, we dealt and did a rundown about an SMS phishing attack.

Providing services for almost 20% of websites online and to millions of Internet properties and customers using our global network in more than 270 cities (recently we arrived to Guam) also plays a big role. For example, in Q1’22 Cloudflare blocked an average of 117 billion cyber threats each day (much more than in previous quarters).

Now that August is here, and many in the Northern Hemisphere are enjoying the summer and vacations, let’s do a reading list that is also a sum up focused on cyberattacks that also gives, by itself, some 2022 guide on this more than ever relevant area.

War & Cyberwar: Attacks increasing

But first, some context. There are all sorts of attacks, but they have been generally speaking increasing and just to give some of our data regarding DDoS attacks in 2022 Q2: application-layer attacks increased by 72% YoY (Year over Year) and network-layer DDoS attacks increased by 109% YoY.

The US government gave “warnings” back in March, after the war in Ukraine started, to all in the country but also allies and partners to be aware of the need to “enhance cybersecurity”. The US Cybersecurity and Infrastructure Security Agency (CISA) created the Shields Up initiative, given how the “Russia’s invasion of Ukraine could impact organizations both within and beyond the region”. The UK and Japan, among others, also issued warnings.

That said, here are the two first and more general about attacks reading list suggestions:

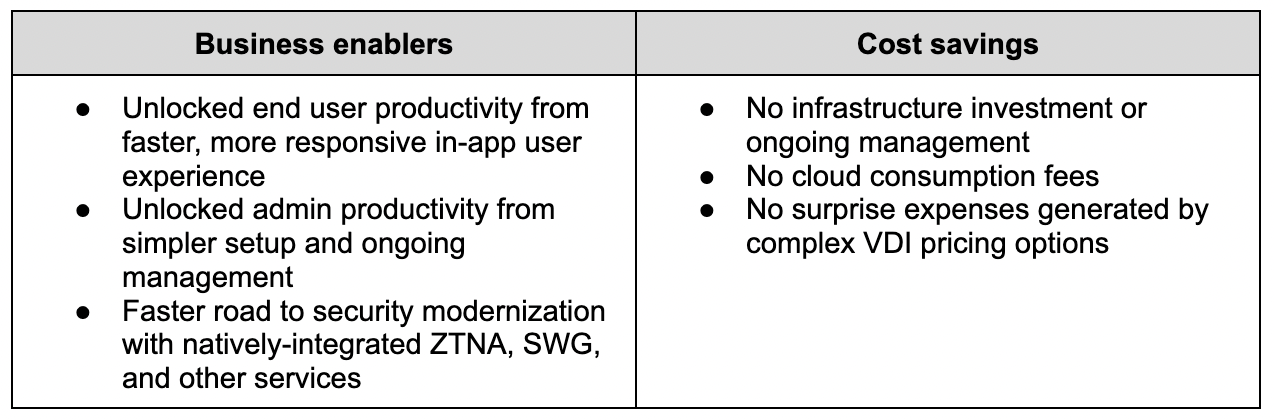

Shields up: free Cloudflare services to improve your cyber readiness (✍️)

After the war started and governments released warnings, we did this free Cloudflare services cyber readiness sum up blog post. If you’re a seasoned IT professional or a novice website operator, you can see a variety of services for websites, apps, or APIs, including DDoS mitigation and protection of teams or even personal devices (from phones to routers). If this resonates with you, this announcement of collaboration to simplify the adoption of Zero Trust for IT and security teams could also be useful: CrowdStrike’s endpoint security meets Cloudflare’s Zero Trust Services.

In Ukraine and beyond, what it takes to keep vulnerable groups online (✍️)

This blog post is focused on the eighth anniversary of our Project Galileo, that has been helping human-rights, journalism and non-profits public interest organizations or groups. We highlight the trends of the past year, including the dozens of organizations related to Ukraine that were onboarded (many while being attacked) since the war started. Between July 2021 and May 2022, we’ve blocked an average of nearly 57.9 million cyberattacks per day, an increase of nearly 10% over last year in a total of 18 billion attacks.

In terms of attack methods to Galileo protected organizations, the largest fraction (28%) of mitigated requests were classified as “HTTP Anomaly”, with 20% of mitigated requests tagged as SQL injection or SQLi attempts (to target databases) and nearly 13% as attempts to exploit specific CVEs (publicly disclosed cybersecurity vulnerabilities) — you can find more insights about those here, including the Spring4Shell vulnerability, the Log4j or the Atlassian one.

And now, without further ado, here’s the full reading list/attacks guide where we highlight some blog posts around four main topics:

1. DDoS attacks & solutions

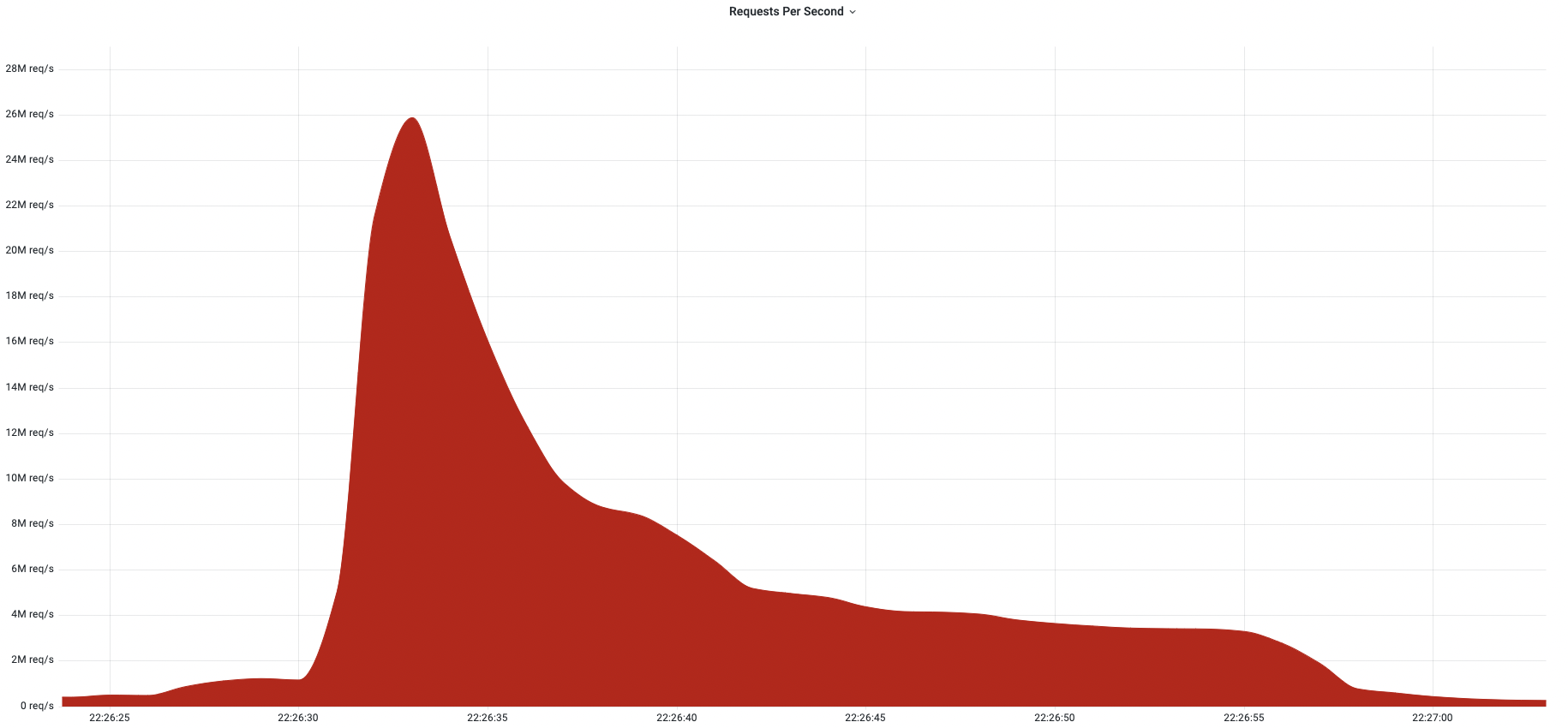

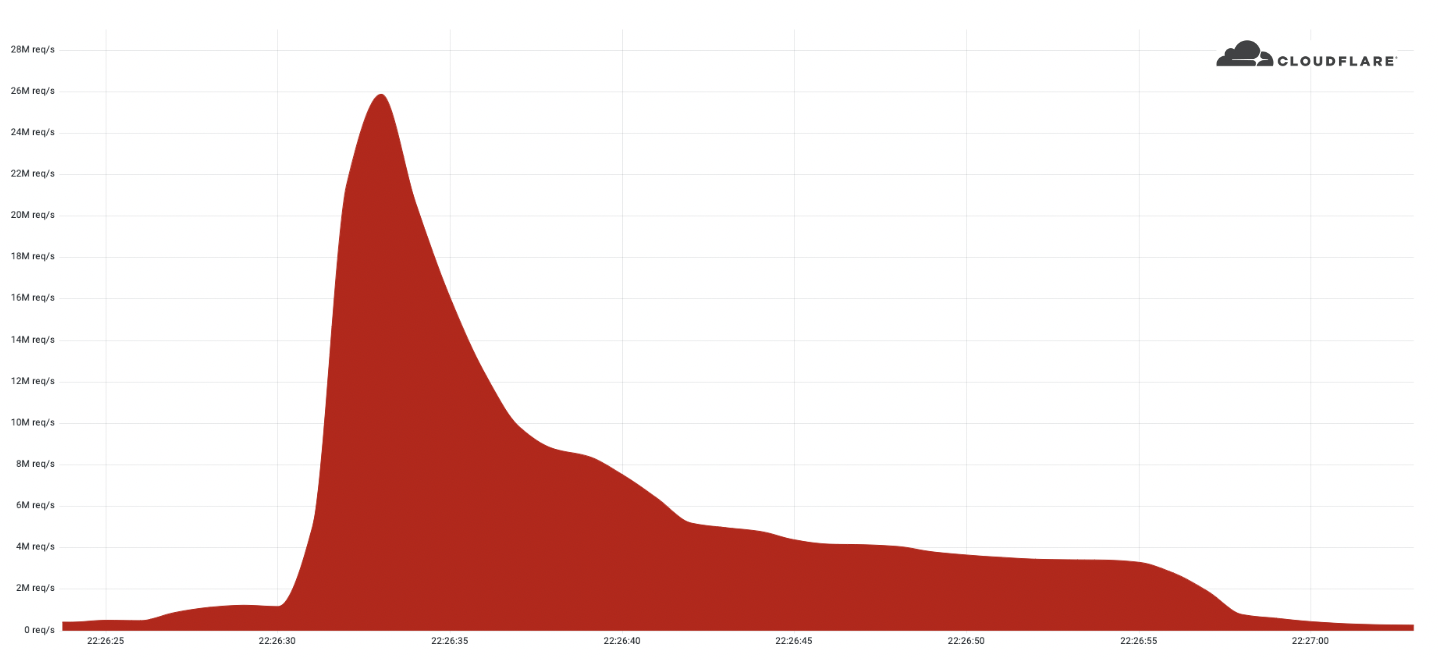

Cloudflare mitigates 26 million request per second DDoS attack (✍️)

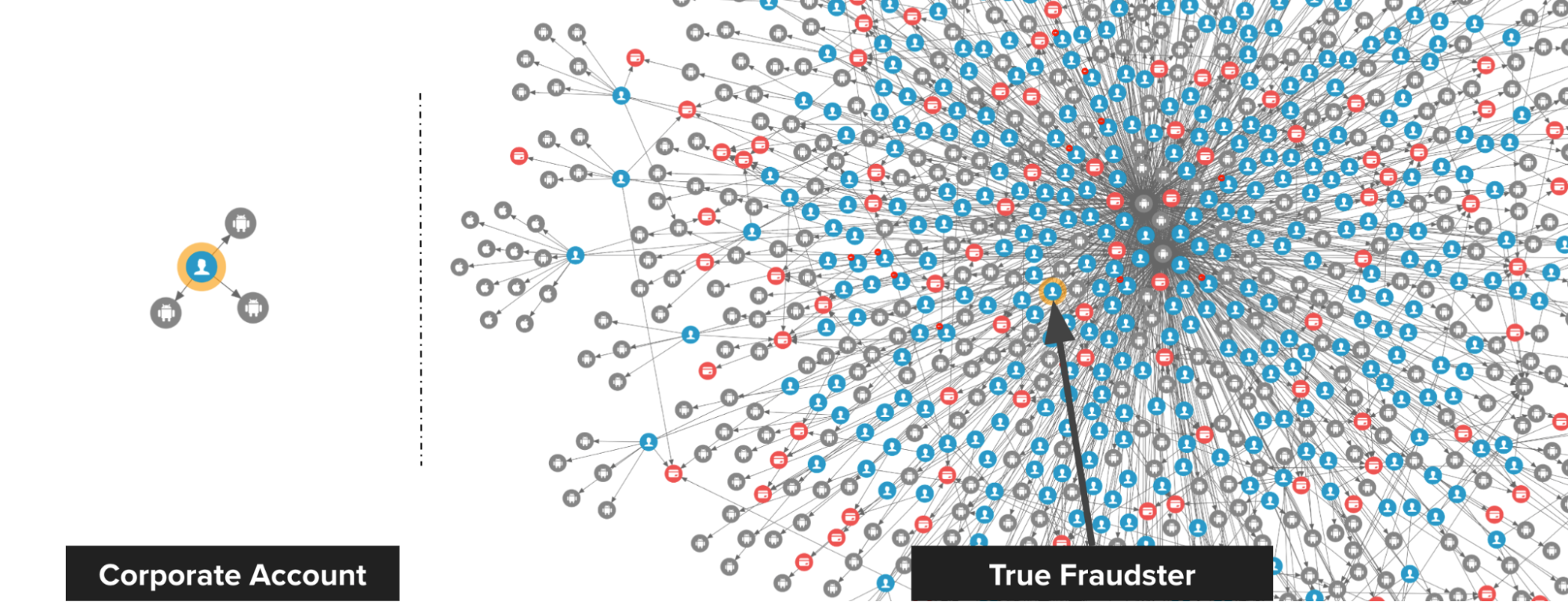

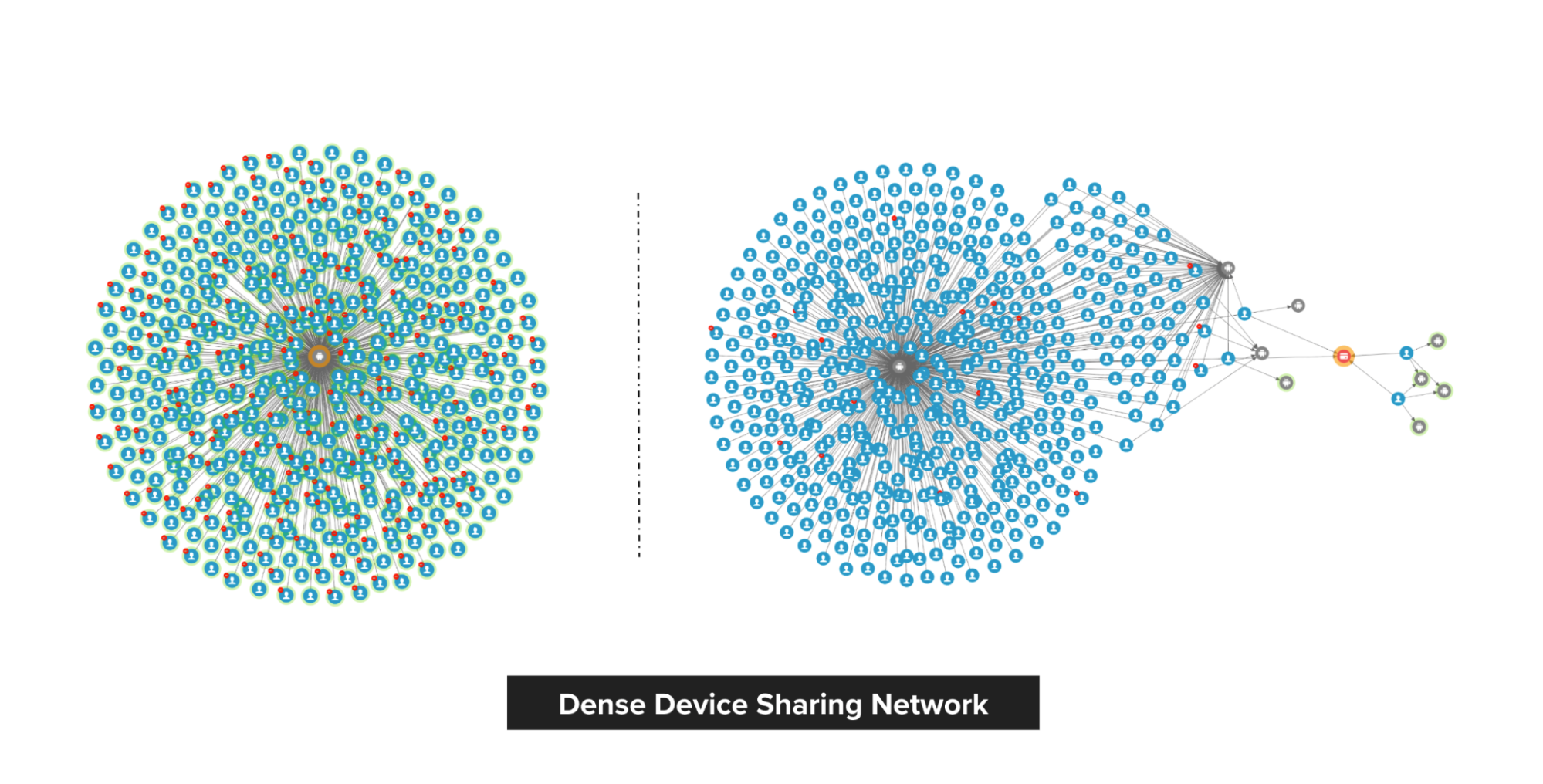

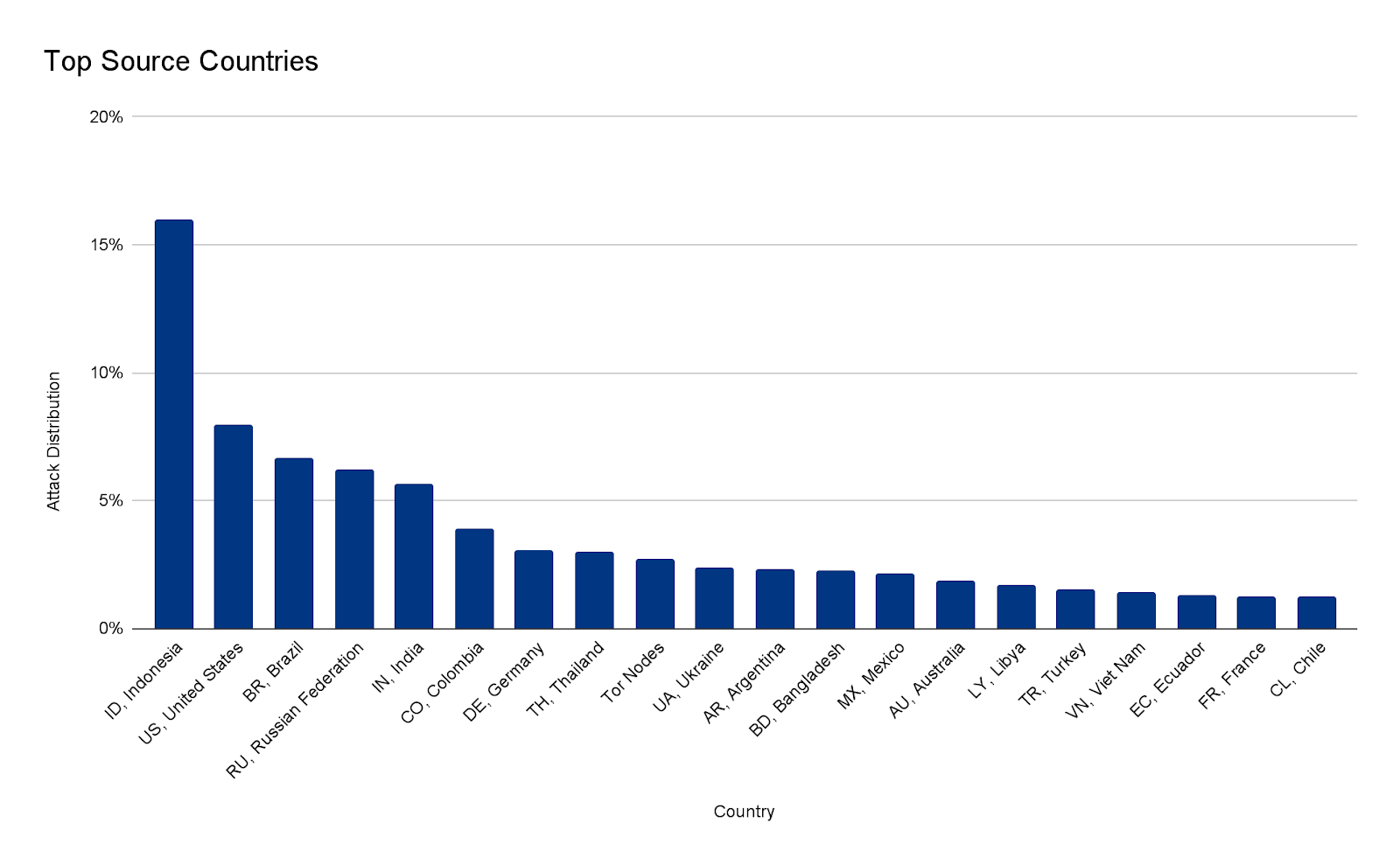

Distributed Denial of Service (DDoS) are the bread and butter of state-based attacks, and we’ve been automatically detecting and mitigating them. Regardless of which country initiates them, bots are all around the world and in this blog post you can see a specific example on how big those attacks can be (in this case the attack targeted a customer website using Cloudflare’s Free plan). We’ve named this most powerful botnet to date, Mantis.

That said, we also explain that although most of the attacks are small, e.g. cyber vandalism, even small attacks can severely impact unprotected Internet properties.

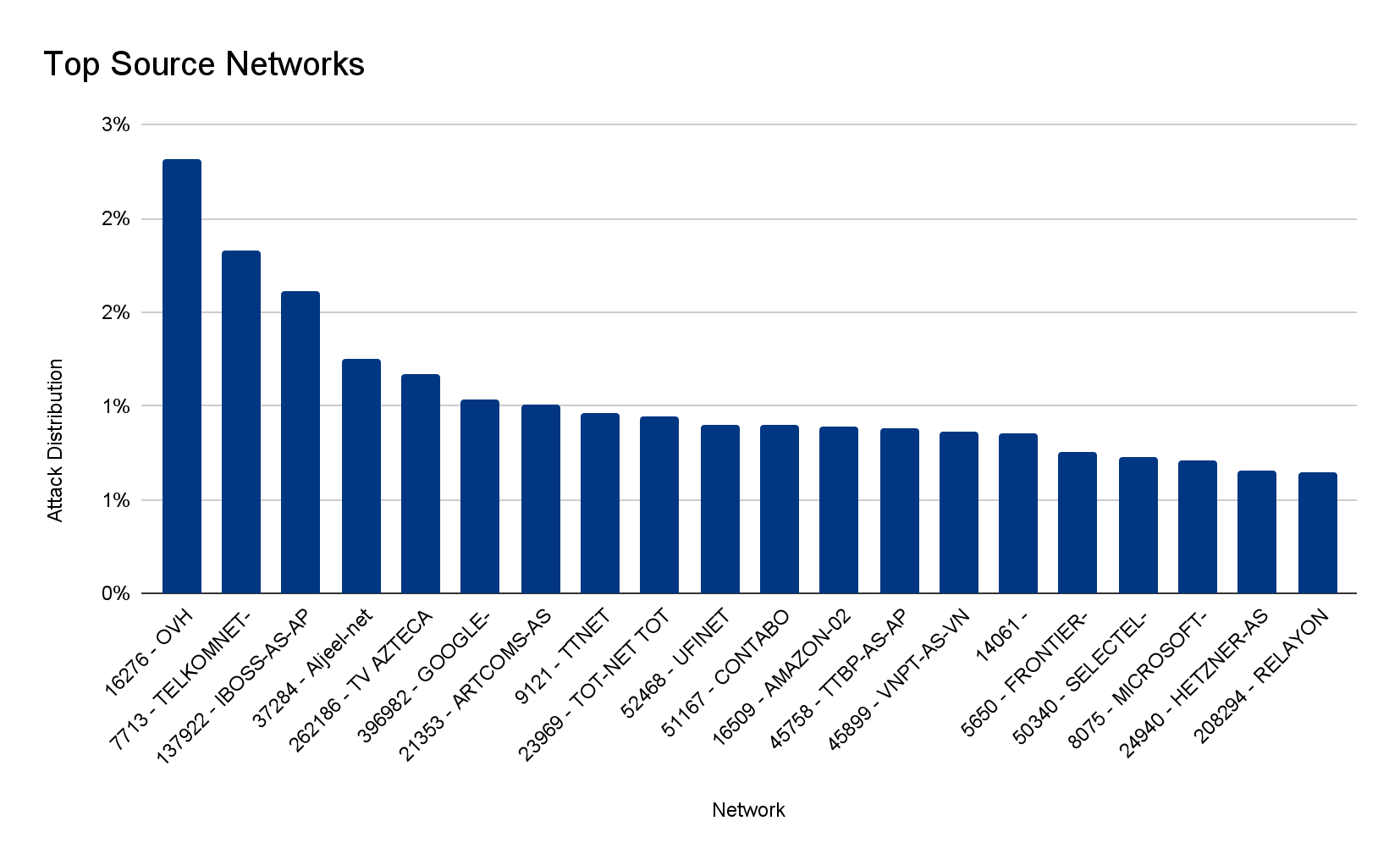

DDoS attack trends for 2022 Q2 (✍️)

We already mentioned how application (72%) and network-layer (109%) attacks have been growing year over year — in the latter, attacks of 100 Gbps and larger increased by 8% QoQ, and attacks lasting more than 3 hours increased by 12% QoQ. Here you can also find interesting trends, like how Broadcast Media companies in Ukraine were the most targeted in Q2 2022 by DDoS attacks. In fact, all the top five most attacked industries are all in online/Internet media, publishing, and broadcasting.

Cloudflare customers on Free plans can now also get real-time DDoS alerts (✍️)

A DDoS is cyber-attack that attempts to disrupt your online business and can be used in any type of Internet property, server, or network (whether it relies on VoIP servers, UDP-based gaming servers, or HTTP servers). That said, our Free plan can now get real-time alerts about HTTP DDoS attacks that were automatically detected and mitigated by us.

One of the benefits of Cloudflare is that all of our services and features can work together to protect your website and also improve its performance. Here’s our specialist, Omer Yoachimik, top 3 tips to leverage a Cloudflare free account (and put your settings more efficient to deal with DDoS attacks):

-

Put Cloudflare in front of your website:

- Onboard your website to Cloudflare and ensure all of your HTTP traffic routes through Cloudflare. Lock down your origin server, so it only accepts traffic from Cloudflare IPs.

-

Leverage Cloudflare’s free security features

- DDoS Protection: it’s enabled by default, and if needed you can also override the action to Block for rules that have a different default value.

- Security Level: this feature will automatically issue challenges to requests that originate from IP addresses with low IP reputation. Ensure it’s set to Medium at least.

- Block bad bots – Cloudflare’s free tier of bot protection can help ward off simple bots (from cloud ASNs) and headless browsers by issuing a computationally expensive challenge.

- Firewall rules: you can create up to five free custom firewall rules to block or challenge traffic that you never want to receive.

- Managed Ruleset: in addition to your custom rule, enable Cloudflare’s Free Managed Ruleset to protect against high and wide impacting vulnerabilities

-

Move your content to the cloud

- Cache as much of your content as possible on the Cloudflare network. The fewer requests that hit your origin, the better — including unwanted traffic.

2. Application level attacks & WAF

Application security: Cloudflare’s view (✍️)

Did you know that around 8% of all Cloudflare HTTP traffic is mitigated? That is something we explain in this application’s general trends March 2022 blog post. That means that overall, ~2.5 million requests per second are mitigated by our global network and never reach our caches or the origin servers, ensuring our customers’ bandwidth and compute power is only used for clean traffic.

You can also have a sense here of what the top mitigated traffic sources are — Layer 7 DDoS and Custom WAF (Web Application Firewall) rules are at the top — and what are the most common attacks. Other highlights include that at that time 38% of HTTP traffic we see is automated (right the number is actually lower, 31% — current trends can be seen on Radar), and the already mentioned (about Galileo) SQLi is the most common attack vector on API endpoints.

WAF for everyone: protecting the web from high severity vulnerabilities (✍️)

This blog post shares a relevant announcement that goes hand in hand with Cloudflare mission of “help build a better Internet” and that also includes giving some level of protection even without costs (something that also help us be better in preventing and mitigating attacks). So, since March we are providing a Cloudflare WAF Managed Ruleset that is running by default on all FREE zones, free of charge.

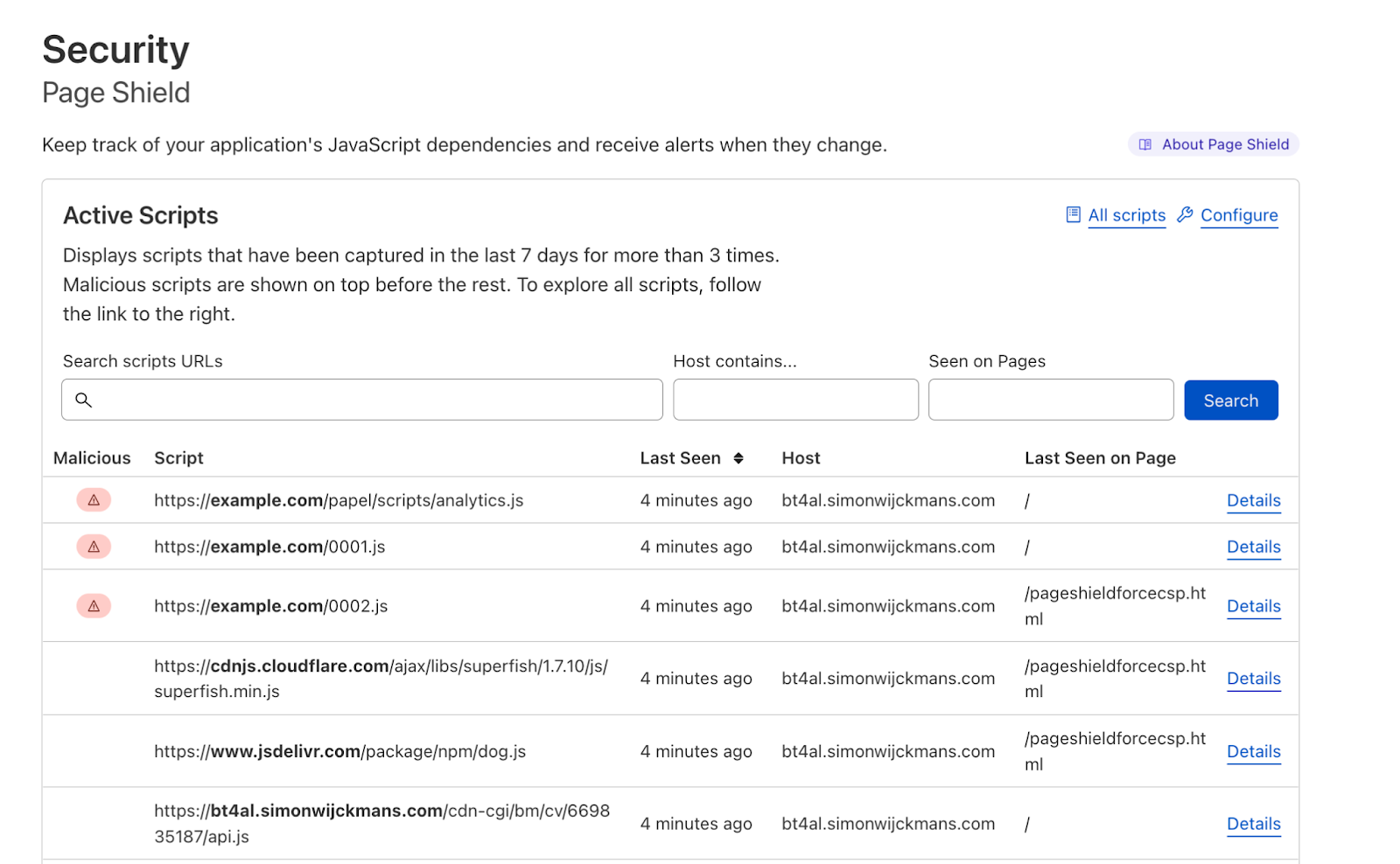

On this topic, there has also been a growing client side security number of threats that concerns CIOs and security professionals that we mention when we gave, in December, all paid plans access to Page Shield features (last month we made Page Shield malicious code alerts more actionable. Another example is how we detect Magecart-Style attacks that have impacted large organizations like British Airways and Ticketmaster, resulting in substantial GDPR fines in both cases.

3. Phishing (Area 1)

Why we are acquiring Area 1 (✍️)

Phishing remains the primary way to breach organizations. According to CISA, 90% of cyber attacks begin with it. And, in a recent report, the FBI referred to Business Email Compromise as the $43 Billion problem facing organizations.

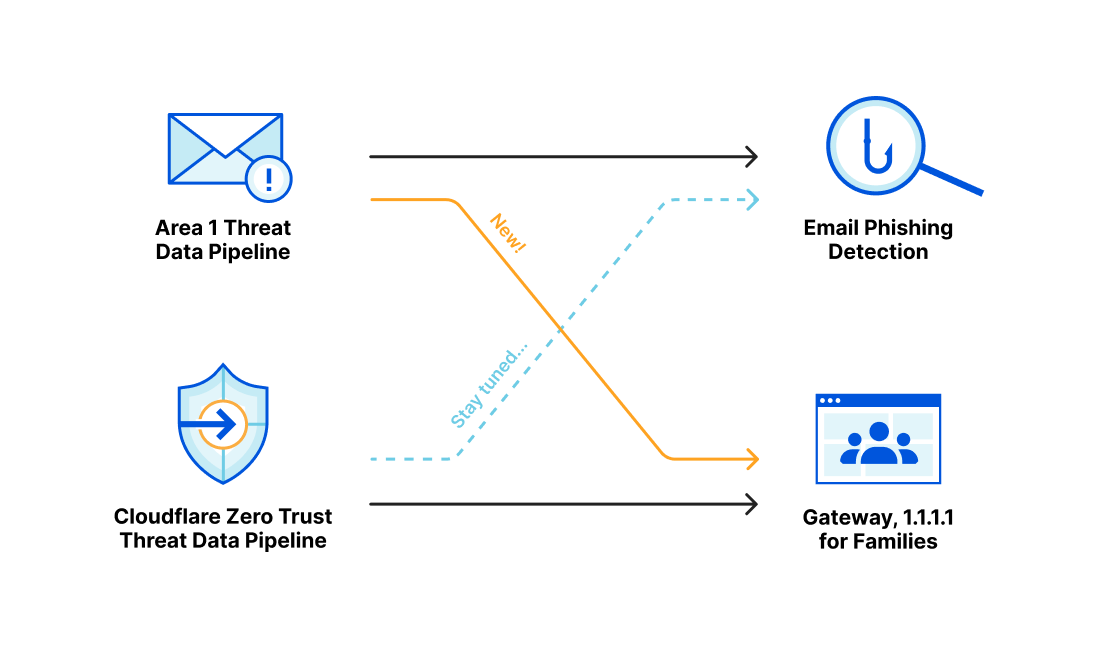

It was in late February that it was announced that Cloudflare had agreed to acquire Area 1 Security to help organizations combat advanced email attacks and phishing campaigns. Our blog post explains that “Area 1’s team has built exceptional cloud-native technology to protect businesses from email-based security threats”. So, all that technology and expertise has been integrated since then with our global network to give customers the most complete Zero Trust security platform available.

The mechanics of a sophisticated phishing scam and how we stopped it (✍️)

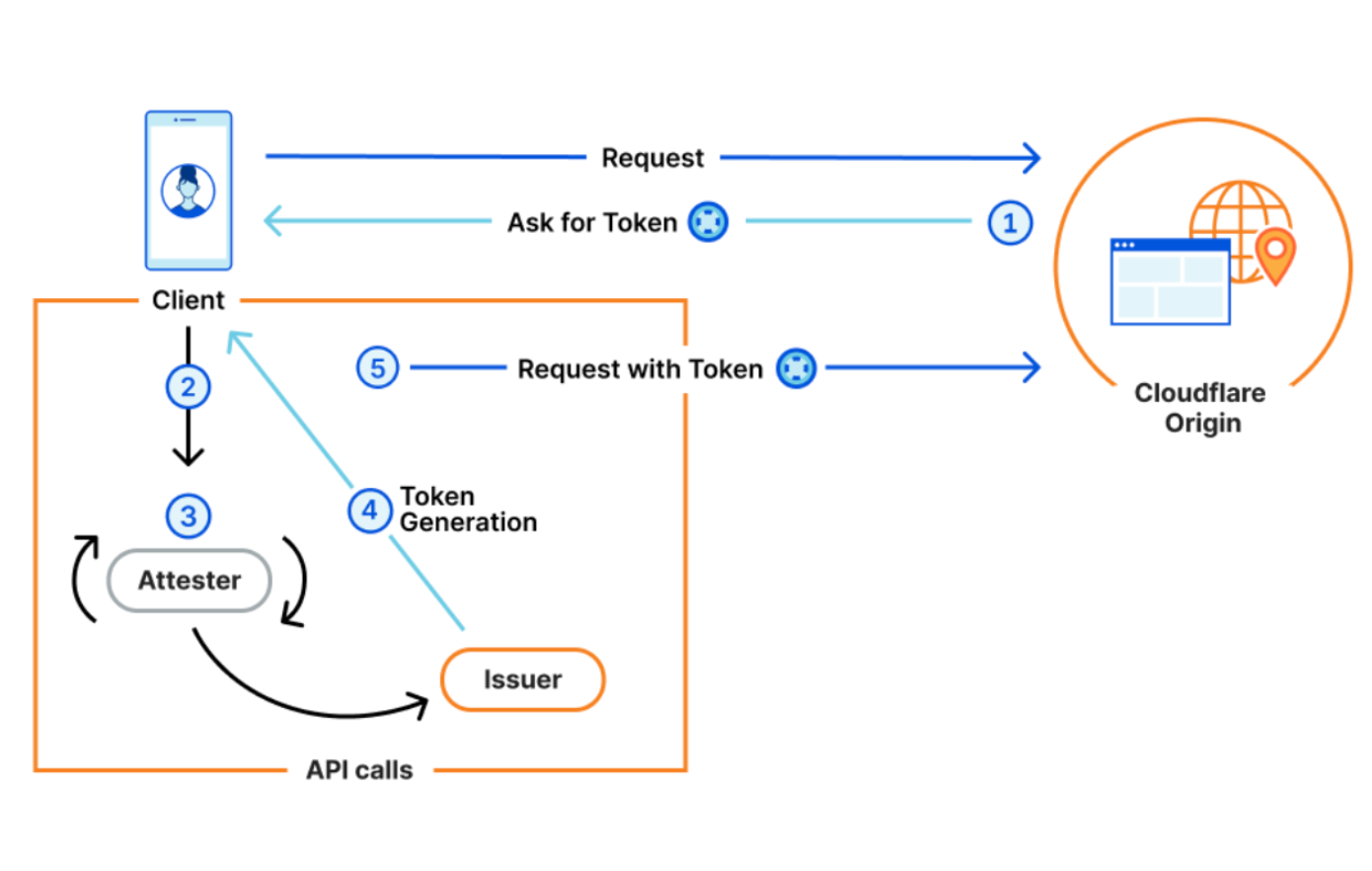

What’s in a message? Possibly a sophisticated attack targeting employees and systems. On August 8, 2022, Twilio shared that they’d been compromised by a targeted SMS phishing attack. We saw an attack with very similar characteristics also targeting Cloudflare’s employees. Here, we do a rundown on how we were able to thwart the attack that could have breached most organizations, by using our Cloudflare One products, and physical security keys. And how others can do the same. No Cloudflare systems were compromised.

Our Cloudforce One threat intelligence team dissected the attack and assisted in tracking down the attacker.

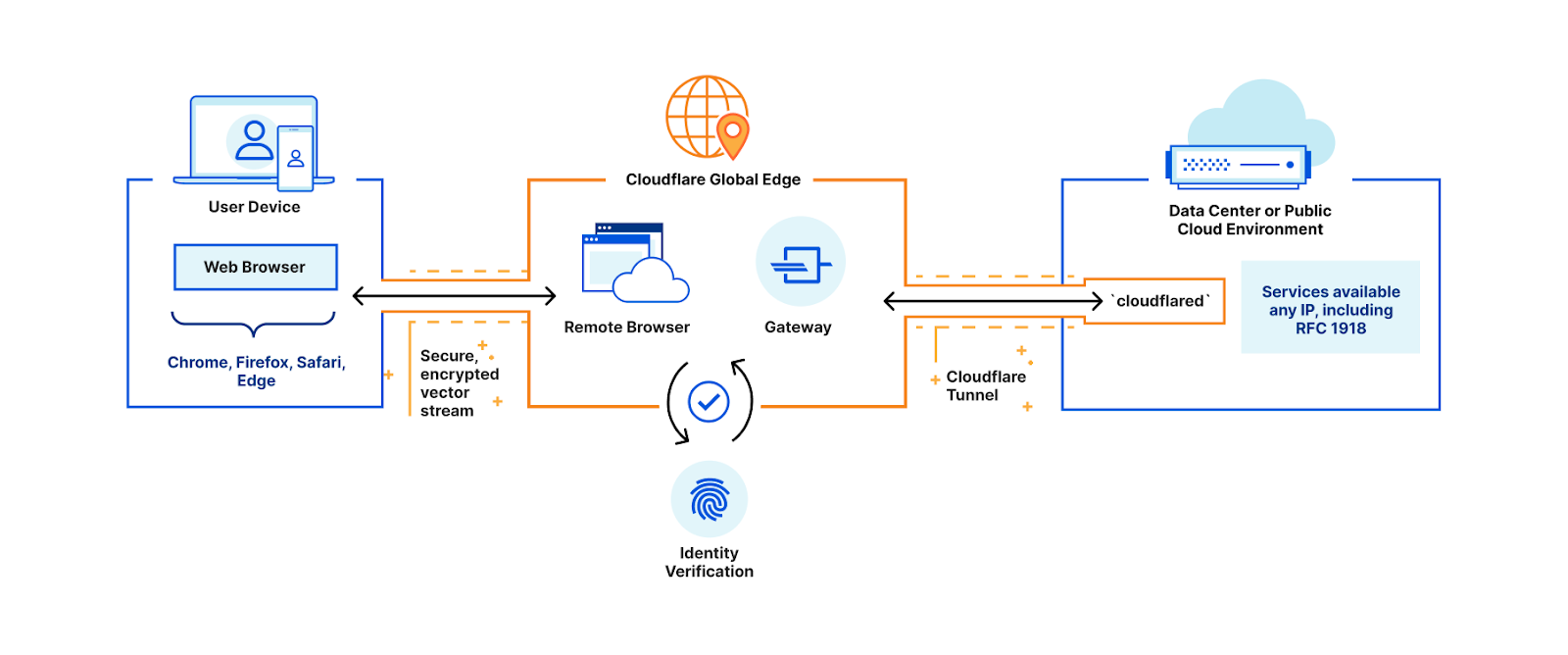

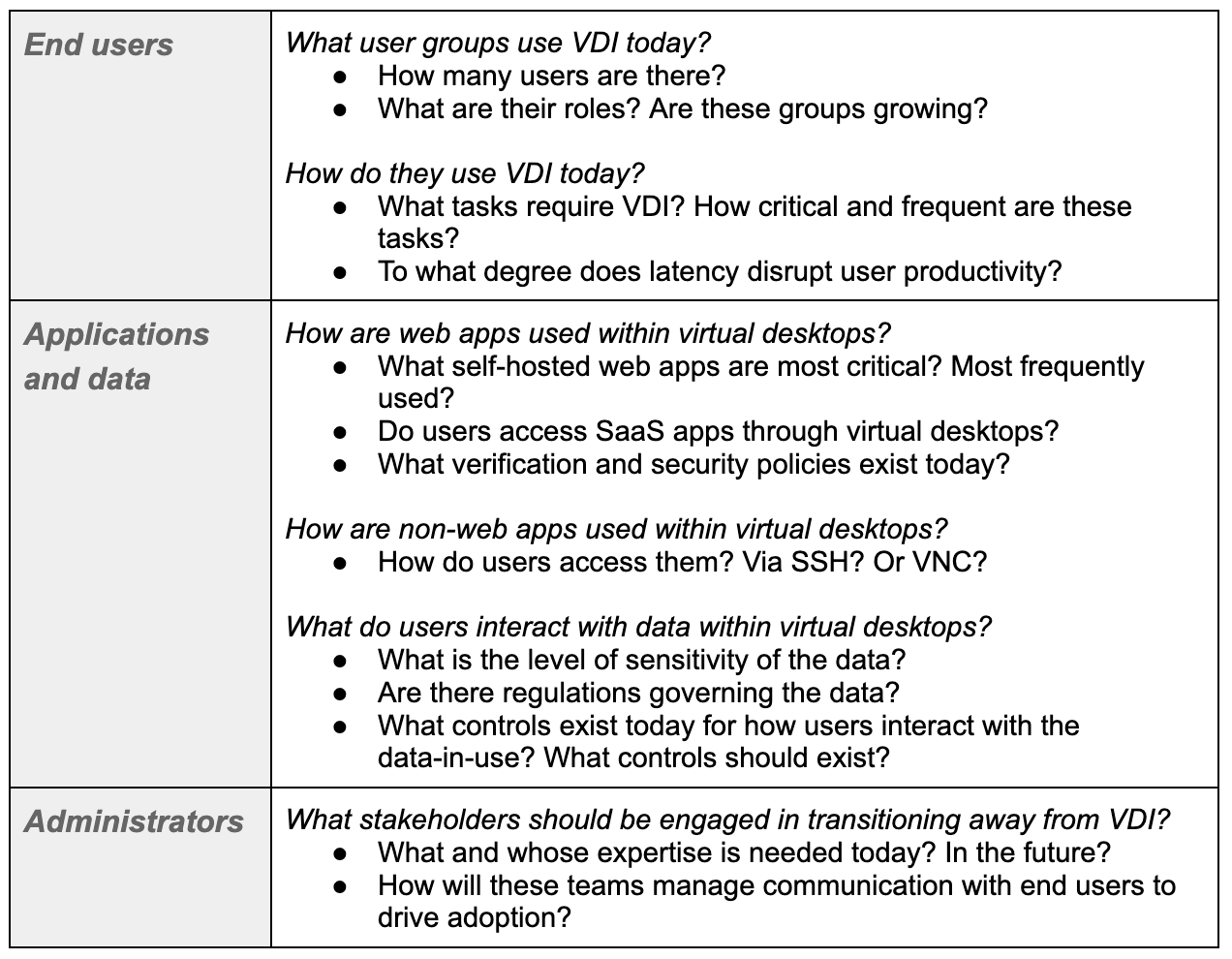

Introducing browser isolation for email links to stop modern phishing threats (✍️)

Why do humans still click on malicious links? It seems that it’s easier to do it than most people think (“human error is human”). Here we explain how an organization nowadays can’t truly have a Zero Trust security posture without securing email; an application that end users implicitly trust and threat actors take advantage of that inherent trust.

As part of our journey to integrate Area 1 into our broader Zero Trust suite, Cloudflare Gateway customers can enable Remote Browser Isolation for email links. With that, we now give unmatched level of protection from modern multi-channel email-based attacks. While we’re at it, you can also learn how to replace your email gateway with Cloudflare Area 1.

About account takeovers, we explained back in March 2021 how we prevent account takeovers on our own applications (on the phishing side we were already using, as a customer, at the time, Area 1).

Also from last year, here’s our research in password security (and the problem of password reuse) — it gets technical. There’s a new password related protocol called OPAQUE (we added a new demo about it on January 2022) that could help better store secrets that our research team is excited about.

4. Malware/Ransomware & other risks

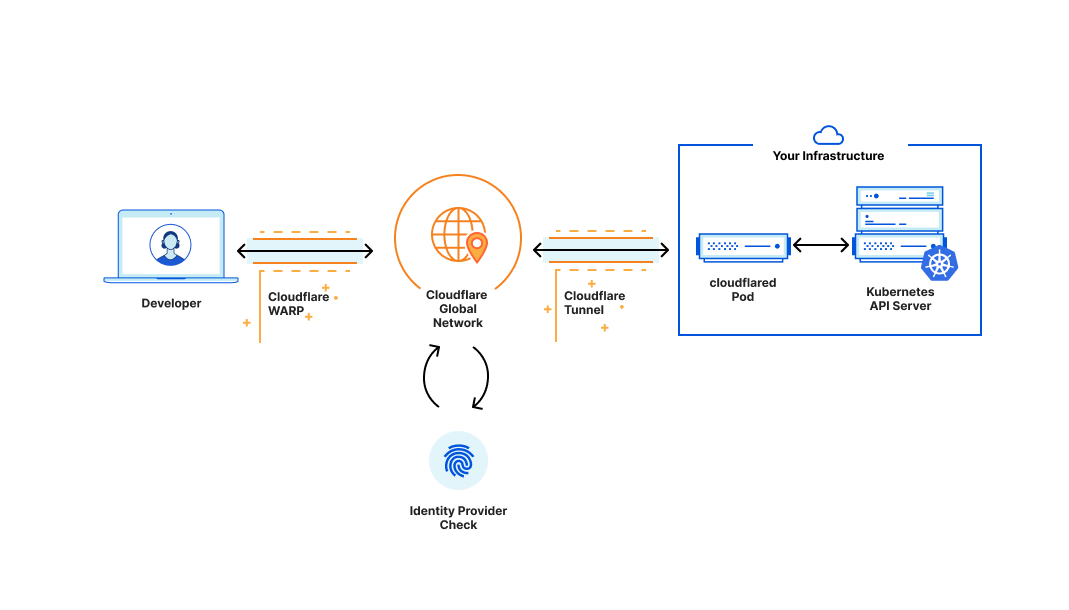

How Cloudflare Security does Zero Trust (✍️)

Security is more than ever part of an ecosystem that the more robust, the more efficient in avoiding or mitigating attacks. In this blog post written for our Cloudflare One week, we explain how that ecosystem, in this case inside our Zero Trust services, can give protection from malware, ransomware, phishing, command & control, shadow IT, and other Internet risks over all ports and protocols.

Since 2020, we launched Cloudflare Gateway focused on malware detection and prevention directly from the Cloudflare edge. Recently, we also include our new CASB product (to secure workplace tools, personalize access, secure sensitive data).

Anatomy of a Targeted Ransomware Attack (✍️)

What a ransomware attack looks like for the victim:

“Imagine your most critical systems suddenly stop operating. And then someone demands a ransom to get your systems working again. Or someone launches a DDoS against you and demands a ransom to make it stop. That’s the world of ransomware and ransom DDoS.”

Ransomware attacks continue to be on the rise and there’s no sign of them slowing down in the near future. That was true more than a year ago, when this blog post was written and is still ongoing, up 105% YoY according to a Senate Committee March 2022 report. And the nature of ransomware attacks is changing. Here, we highlight how Ransom DDoS (RDDoS) attacks work, how Cloudflare onboarded and protected a Fortune 500 customer from a targeted one, and how that Gateway with antivirus we mentioned before helps with just that.

We also show that with ransomware as a service (RaaS) models, it’s even easier for inexperienced threat actors to get their hands on them today (“RaaS is essentially a franchise that allows criminals to rent ransomware from malware authors”). We also include some general recommendations to help you and your organization stay secure. Don’t want to click the link? Here they are:

- Use 2FA everywhere, especially on your remote access entry points. This is where Cloudflare Access really helps.

- Maintain multiple redundant backups of critical systems and data, both onsite and offsite

- Monitor and block malicious domains using Cloudflare Gateway + AV

- Sandbox web browsing activity using Cloudflare RBI to isolate threats at the browser

Investigating threats using the Cloudflare Security Center (✍️)

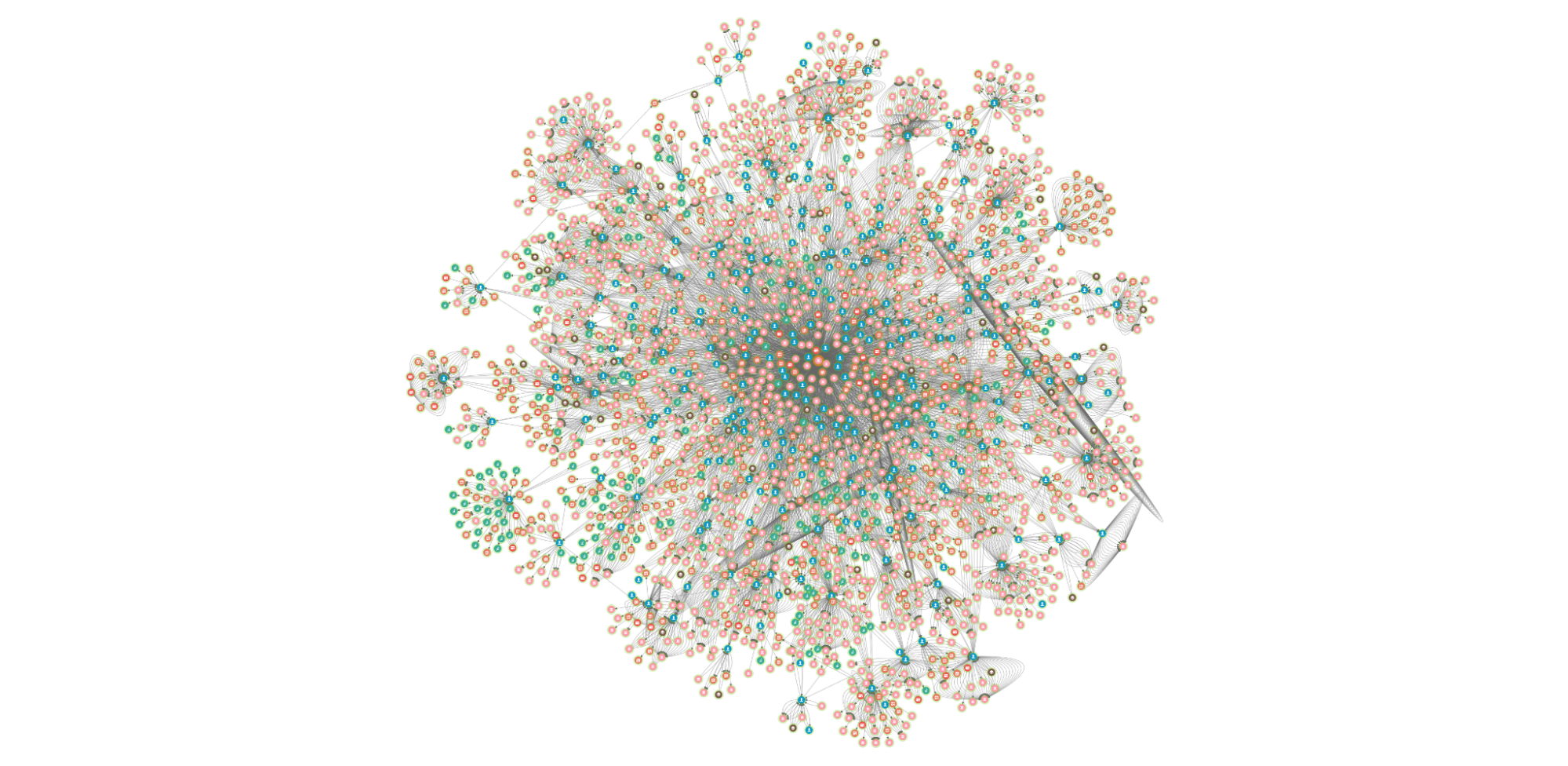

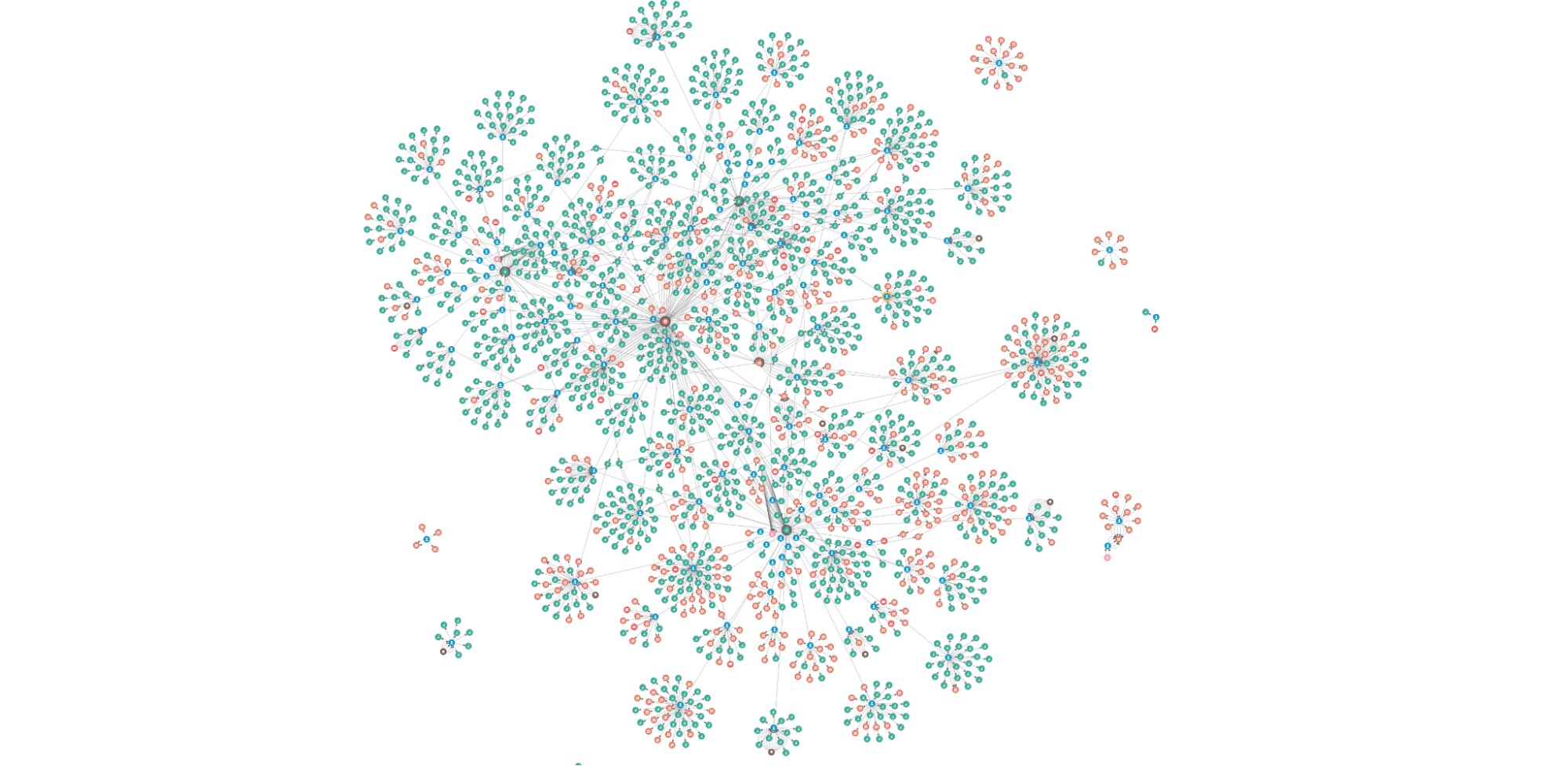

Here, first we announce our new threat investigations portal, Investigate, right in the Cloudflare Security Center, that allows all customers to query directly our intelligence to streamline security workflows and tighten feedback loops.

That’s only possible because we have a global and in-depth view, given that we protect millions of Internet properties from attacks (the free plans help us to have that insight). And the data we glean from these attacks trains our machine learning models and improves the efficacy of our network and application security products.

Steps we’ve taken around Cloudflare’s services in Ukraine, Belarus, and Russia (✍️)

There’s an emergence of the known as wiper malware attacks (intended to erase the computer it infects) and in this blog post, among other things, we explain how when a wiper malware was identified in Ukraine (it took offline government agencies and a major bank), we successfully adapted our Zero Trust products to make sure our customers were protected. Those protections include many Ukrainian organizations, under our Project Galileo that is having a busy year, and they were automatically put available to all our customers. More recently, the satellite provider Viasat was affected.

Zaraz use Workers to make third-party tools secure and fast (✍️)

Cloudflare announced it acquired Zaraz in December 2021 to help us enable cloud loading of third-party tools. Seems unrelated to attacks? Think again (this takes us back to the secure ecosystem I already mentioned). Among other things, here you can learn how Zaraz can make your website more secure (and faster) by offloading third-party scripts.

That allows to avoid problems and attacks. Which? From code tampering to lose control over the data sent to third-parties. My colleague Yo’av Moshe elaborates on what this solution prevents: “the third-party script can intentionally or unintentionally (due to being hacked) collect information it shouldn’t collect, like credit card numbers, Personal Identifiers Information (PIIs), etc.”. You should definitely avoid those.

Introducing Cloudforce One: our new threat operations and research team (✍️)

Meet our new threat operations and research team: Cloudforce One. While this team will publish research, that’s not its reason for being. Its primary objective: track and disrupt threat actors. It’s all about being protected against a great flow of threats with minimal to no involvement.

Wrap up

The expression “if it ain’t broke, don’t fix it” doesn’t seem to apply to the fast pacing Internet industry, where attacks are also in the fast track. If you or your company and services aren’t properly protected, attackers (human or bots) will probably find you sooner than later (maybe they already did).

To end on a popular quote used in books, movies and in life: “You keep knocking on the devil’s door long enough and sooner or later someone’s going to answer you”. Although we have been onboarding many organizations while attacks are happening, that’s not the less hurtful solution — preventing and mitigating effectively and forget the protection is even there.

If you want to try some security features mentioned, the Cloudflare Security Center is a good place to start (free plans included). The same with our Zero Trust ecosystem (or Cloudflare One as our SASE, Secure Access Service Edge) that is available as self-serve, and also includes a free plan (this vendor-agnostic roadmap shows the general advantages of the Zero Trust architecture).

If trends are more your thing, Cloudflare Radar has a near real-time dedicated area about attacks, and you can browse and interact with our DDoS attack trends for 2022 Q2 report.