Post Syndicated from Aigars Kadiķis original https://blog.zabbix.com/agentless-oracle-database-monitoring-with-odbc/15589/

Did you know that Zabbix has an out-of-the-box template for collecting Oracle database metrics? With this template, we can collect data like database, tablespace, ASM, and many other metrics agentlessly, by using ODBC. This blog post will guide you on how to set up ODBC monitoring for Oracle 11.2, 12.1, 18.5, or 19.2 database servers. This post can serve as the perfect set of guidelines for deploying Oracle database monitoring in your environment.

Download Instant client and SQLPlus

The provided commands apply for the following operating systems: CentOS 8, Oracle Linux 8, or Rocky Linux.

First we have to download the following packages:

oracle-instantclient19.12-basic-19.12.0.0.0-1.x86_64.rpm

oracle-instantclient19.12-sqlplus-19.12.0.0.0-1.x86_64.rpm

oracle-instantclient19.12-odbc-19.12.0.0.0-1.x86_64.rpm

Here we are downloading

Oracle instant client – required, to establish connectivity to an Oracle database

SQLPlus – A tool that we can use to test the connectivity to an Oracle database

Oracle ODBC package – contains the required ODBC drivers and configuration scripts to enable ODBC connectivity to an Oracle database

Upload the packages to the Zabbix server (or proxy, if you wish to monitor your Oracle DB on a proxy) and place it in:

/tmp/oracle-instantclient19.12-basic-19.12.0.0.0-1.x86_64.rpm /tmp/oracle-instantclient19.12-sqlplus-19.12.0.0.0-1.x86_64.rpm /tmp/oracle-instantclient19.12-odbc-19.12.0.0.0-1.x86_64.rpm

Solve OS dependencies

Install ‘libaio’ and ‘libnsl’ library:

dnf -y install libaio-devel libnsl

Otherwise, we will receive errors:

# rpm -ivh /tmp/oracle-instantclient19.12-basic-19.12.0.0.0-1.x86_64.rpm

error: Failed dependencies:

libaio is needed by oracle-instantclient19.12-basic-19.12.0.0.0-1.x86_64

libnsl.so.1()(64bit) is needed by oracle-instantclient19.12-basic-19.12.0.0.0-1.x86_64

# rpm -ivh /tmp/oracle-instantclient19.12-basic-19.12.0.0.0-1.x86_64.rpm

error: Failed dependencies:

libnsl.so.1()(64bit) is needed by oracle-instantclient19.12-basic-19.12.0.0.0-1.x86_64

Check if Oracle components have been previously deployed on the system. The commands below should provide an empty output:

rpm -qa | grep oracle ldconfig -p | grep oracle

Install Oracle Instant Client

rpm -ivh /tmp/oracle-instantclient19.12-basic-19.12.0.0.0-1.x86_64.rpm

Make sure that the package ‘oracle-instantclient19.12-basic-19.12.0.0.0-1.x86_64’ is installed:

rpm -qa | grep oracle

LD config

The official Oracle template page at git.zabbix.com talks about the method to configure Oracle ENV Usage for the service. For this version 19.12 of instant client, it is NOT REQUIRED to create a ‘/etc/sysconfig/zabbix-server’ file with content:

export ORACLE_HOME=/usr/lib/oracle/19.12/client64 export PATH=$PATH:$ORACLE_HOME/bin export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/usr/lib64:/usr/lib:$ORACLE_HOME/bin export TNS_ADMIN=$ORACLE_HOME/network/admin

While we did install the rpm package, the Oracle 19.12 client package did auto-configure LD path at the global level – it means every user on the system can use the Oracle instant client. We can see the LD path have been configured under:

cat /etc/ld.so.conf.d/oracle-instantclient.conf

This will print:

/usr/lib/oracle/19.12/client64/lib

To ensure that the required Oracle libraries are recognized by the OS, we can run:

ldconfig -p | grep oracle

It should print:

liboramysql19.so (libc6,x86-64) => /usr/lib/oracle/19.12/client64/lib/liboramysql19.so libocijdbc19.so (libc6,x86-64) => /usr/lib/oracle/19.12/client64/lib/libocijdbc19.so libociei.so (libc6,x86-64) => /usr/lib/oracle/19.12/client64/lib/libociei.so libocci.so.19.1 (libc6,x86-64) => /usr/lib/oracle/19.12/client64/lib/libocci.so.19.1 libnnz19.so (libc6,x86-64) => /usr/lib/oracle/19.12/client64/lib/libnnz19.so libmql1.so (libc6,x86-64) => /usr/lib/oracle/19.12/client64/lib/libmql1.so libipc1.so (libc6,x86-64) => /usr/lib/oracle/19.12/client64/lib/libipc1.so libclntshcore.so.19.1 (libc6,x86-64) => /usr/lib/oracle/19.12/client64/lib/libclntshcore.so.19.1 libclntshcore.so (libc6,x86-64) => /usr/lib/oracle/19.12/client64/lib/libclntshcore.so libclntsh.so.19.1 (libc6,x86-64) => /usr/lib/oracle/19.12/client64/lib/libclntsh.so.19.1 libclntsh.so (libc6,x86-64) => /usr/lib/oracle/19.12/client64/lib/libclntsh.so

Note: If for some reason the ldconfig command shows links to other dynamic libraries – that’s when we might have to create a separate ENV file for Zabbix server/Proxy, which would link the Zabbix application to the correct dynamic libraries, as per the example at the start of this section.

Check if the Oracle service port is reachable

To save us some headache down the line, let’s first check the network connectivity to our Oracle database host. Let’s check if we can reach the default Oracle port at the network level. In this example, we will try to connect to the default Oracle database port, 1521. Depending on which port your Oracle database is listening for connections, adjust accordingly,. Make sure the output says ‘Connected to 10.1.10.15:1521’:

nc -zv 10.1.10.15 1521

Test connection with SQLPlus

We can simulate the connection to the Oracle database before moving on with the ODBC configuration. Make sure that the Oracle username and password used in the command are correct. For this task, we will first need to install the SQLPlus package.:

rpm -ivh /tmp/oracle-instantclient19.12-sqlplus-19.12.0.0.0-1.x86_64.rpm

To simulate the connection, we can use a one-liner command. In the example command I’m using the username ‘system’ together with the password ‘oracle’ to reach out to the Oracle database server ‘10.1.10.15’ via port ‘1521’ and connect to the service name ‘xe’:

sqlplus64 'system/oracle@(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=TCP)(HOST=10.1.10.15)(PORT=1521)))(CONNECT_DATA=(SERVER=DEDICATED)(SERVICE_NAME=xe)))'

In the output we can see: we are using the 19.12 client to connect to 11.2 server:

SQL*Plus: Release 19.0.0.0.0 - Production on Mon Sep 6 13:47:36 2021 Version 19.12.0.0.0 Copyright (c) 1982, 2021, Oracle. All rights reserved. Connected to: Oracle Database 11g Express Edition Release 11.2.0.2.0 - 64bit Production

Note: This gives us an extra hint regarding the Oracle instant client – newer versions of the client are backwards compatible with the older versions of the Oracle database server. Though this doesn’t apply to every version of Oracle client/server, please check the Oracle instant client documentation first.

ODBC connector

When it comes to configuring ODBC, let’s first install the ODBC driver manager

dnf -y install unixODBC

Now we can see that we have two new files – ‘/etc/odbc.ini’ (possibly empty) and ‘/etc/odbcinst.ini’.

The file ‘/etc/odbcinst.ini’ describes driver relation. Currently, when we ‘grep’ the keyword ‘oracle’ there is no oracle relation installed, the output is empty when we run:

grep -i oracle /etc/odbcinst.ini

Our next step is to Install Oracle ODBC driver package:

rpm -ivh /tmp/oracle-instantclient19.12-odbc-19.12.0.0.0-1.x86_64.rpm

The ‘oracle-instantclient*-odbc’ package contains a script that will update the ‘/etc/odbcinst.ini’ configuration automatically:

cd /usr/lib/oracle/19.12/client64/bin ./odbc_update_ini.sh / /usr/lib/oracle/19.12/client64/lib

It will print:

*** ODBCINI environment variable not set,defaulting it to HOME directory!

Now when we print the file on the screen:

cat /etc/odbcinst.ini

We will see that there is the Oracle 19 ODBC driver section added at the end of the file::

[Oracle 19 ODBC driver] Description = Oracle ODBC driver for Oracle 19 Driver = /usr/lib/oracle/19.12/client64/lib/libsqora.so.19.1 Setup = FileUsage = CPTimeout = CPReuse =

It’s important to check if there are no errors produced in the output when executing the ‘ldd’ command. This ensures that the dependencies are satisfied and accessible and there are no conflicts with the library versioning:

ldd /usr/lib/oracle/19.12/client64/lib/libsqora.so.19.1

It will print something similar like:

linux-vdso.so.1 (0x00007fff121b5000) libdl.so.2 => /lib64/libdl.so.2 (0x00007fb18601c000) libm.so.6 => /lib64/libm.so.6 (0x00007fb185c9a000) libpthread.so.0 => /lib64/libpthread.so.0 (0x00007fb185a7a000) libnsl.so.1 => /lib64/libnsl.so.1 (0x00007fb185861000) librt.so.1 => /lib64/librt.so.1 (0x00007fb185659000) libaio.so.1 => /lib64/libaio.so.1 (0x00007fb185456000) libresolv.so.2 => /lib64/libresolv.so.2 (0x00007fb18523f000) libclntsh.so.19.1 => /usr/lib/oracle/19.12/client64/lib/libclntsh.so.19.1 (0x00007fb1810e6000) libclntshcore.so.19.1 => /usr/lib/oracle/19.12/client64/lib/libclntshcore.so.19.1 (0x00007fb180b42000) libodbcinst.so.2 => /lib64/libodbcinst.so.2 (0x00007fb18092c000) libc.so.6 => /lib64/libc.so.6 (0x00007fb180567000) /lib64/ld-linux-x86-64.so.2 (0x00007fb1864da000) libnnz19.so => /usr/lib/oracle/19.12/client64/lib/libnnz19.so (0x00007fb17fdba000) libltdl.so.7 => /lib64/libltdl.so.7 (0x00007fb17fbb0000)

When we executed the ‘odbc_update_ini.sh’ script, a new DSN (data source name) file was made in ‘/root/.odbc.ini’. This is a sample configuration ODBC configuration file which describes what settings this version of ODBC driver supports.

Let’s move this configuration file from the user directories to a location accessible system-wide:

cat /root/.odbc.ini | sudo tee -a /etc/odbc.ini

And remove the file from the user directory completely:

rm /root/.odbc.ini

This way, every user in the system will use only this one ODBC configuration file.

We can now alter the existing configuration – /etc/odbc.ini. I’m highlighting things that have been changed from the defaults:

[Oracle11g] AggregateSQLType = FLOAT Application Attributes = T Attributes = W BatchAutocommitMode = IfAllSuccessful BindAsFLOAT = F CacheBufferSize = 20 CloseCursor = F DisableDPM = F DisableMTS = T DisableRULEHint = T Driver = Oracle 19 ODBC driver DSN = Oracle11g EXECSchemaOpt = EXECSyntax = T Failover = T FailoverDelay = 10 FailoverRetryCount = 10 FetchBufferSize = 64000 ForceWCHAR = F LobPrefetchSize = 8192 Lobs = T Longs = T MaxLargeData = 0 MaxTokenSize = 8192 MetadataIdDefault = F QueryTimeout = T ResultSets = T ServerName = //10.1.10.15:1521/xe SQLGetData extensions = F SQLTranslateErrors = F StatementCache = F Translation DLL = Translation Option = 0 UseOCIDescribeAny = F UserID = system Password = oracle

DNS – Data source name. Should match the section name in brackets, e.g.:[Oracle11g]

ServerName – Oracle server address

UserID – Oracle user name

Password – Oracle user password

To test the connection from the command line, let’s use the isql command-line tool which should simulate the ODBC connection akin to what the Zabbix is doing when gathering metrics:

isql -v Oracle11g

The isql command in this example picks up the ODBC settings (Username, Password, Server address) from the odbc.ini file. All we have to do is reference the particular DSN – Oracle11g

On the other hand, if we do not prefer to keep the password on the filesystem (/etc/odbc.ini), we can erase the lines ‘UserID’ and ‘Password’. Then we can test the ODBC connection with:

isql -v Oracle11g 'system' 'oracle'

In case of a successful connection it should print:

+---------------------------------------+ | Connected! | | | | sql-statement | | help [tablename] | | quit | | | +---------------------------------------+ SQL>

And that’s it for the ODBC configuration! Now we should be able to apply the Oracle by ODBC template in Zabbix

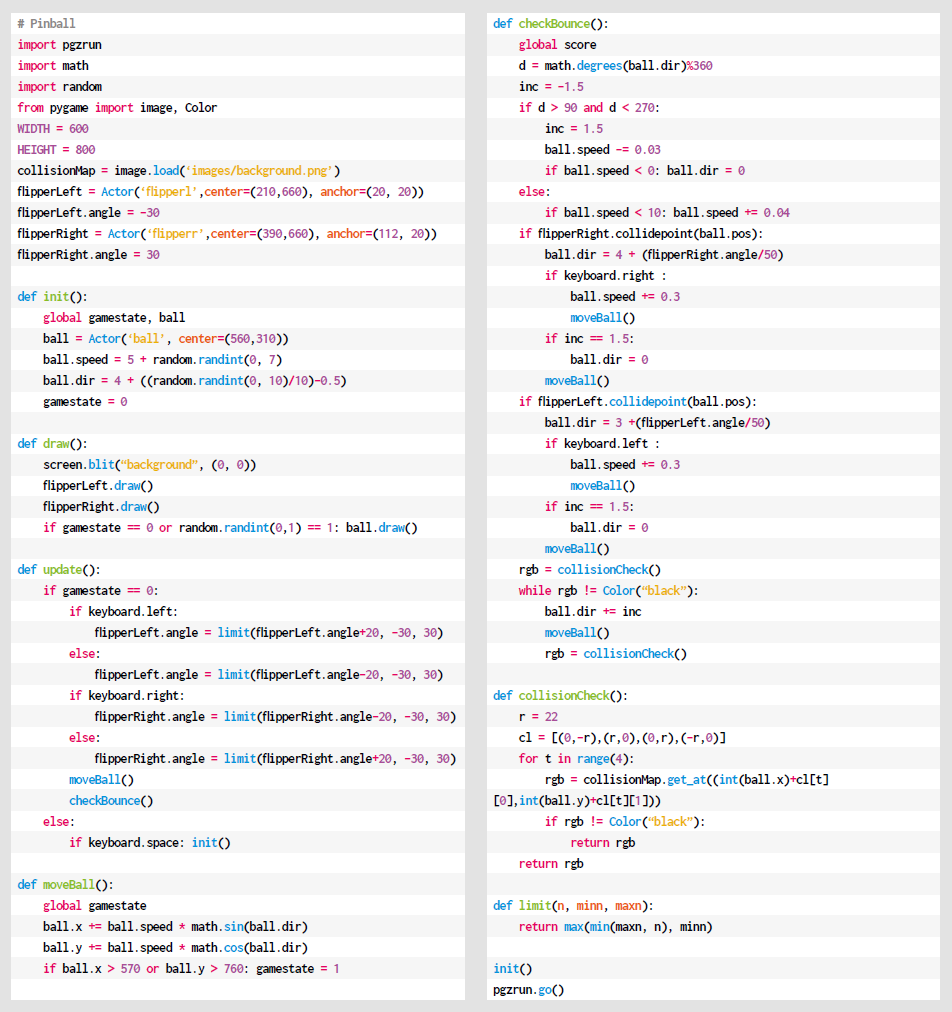

Don’t forget that we also need to provide the necessary Oracle credentials to start collecting Oracle database metrics:

The lessons learned in this blog post can be easily applied to ODBC monitoring and troubleshooting in general, not just Oracle. If you’re having any issues or wish to share your experience with ODBC or Oracle database monitoring – feel free to leave us a comment!

Rakesh Singh

Rakesh Singh